Last week Perplexity joined Anthropic, Cursor, Google and OpenAI in offering a $200 “Max” subscription. We are clearly entering the next level of subscription pricing, and I am quite confident this is only the beginning. The more complex the AI models become – the longer they can run autonomously without user interaction – the more costly it will be to keep them running. Claude 4 Opus is an excellent example of this, prompted the right way it can work literally for an hour producing code and documentation of very high quality.

I believe we will see the next price bump for AI models in 9-12 months when the next generation models arrive. Short-term we should see new models being introduced soon by Google, xAI and OpenAI, and I predict they will follow the same $200 pricing as Anthropic with their Max subscription. These models will probably still only be able to work for an hour or so, and they will not be able to fully replace a human coworker. But in 9-12 months I predict the models will be able to run autonomously for several hours, probably more, and will be able to produce much higher output quality than is possible today. Let’s say that a subscription for such a model costs $5,000 per month. The business model will then change from augmenting existing workers with a new tool, to replacing one or more workers with the new tool. This is when the large shift from manual labour to AI automatization begins.

Thank you for being a Tech Insights subscriber!

Listen to Tech Insights on Spotify: Tech Insights 2025 Week 28 on Spotify

THIS WEEK’S NEWS:

- Cloudflare Launches Pay Per Crawl System for AI Content Access

- Amazon Deploys 1 Million Robots and Launches DeepFleet AI Model for Warehouse Coordination

- Cursor Launches AI Coding Agents on Web and Mobile Platforms

- Perplexity Introduces $200 Max Subscription for Power Users

- AI-Generated Band Velvet Sundown Admits to “Art Hoax” After Viral Spotify Success

- Baidu Open Sources ERNIE 4.5 AI Model Family

- Columbia University’s AI System Achieves First Pregnancy for Couple After 18-Year Struggle

- Meta Hires 11 Top AI Researchers from OpenAI, DeepMind, and Anthropic for AGI Push

- Kyutai Labs Releases Open-Source Streaming Text-to-Speech Model with Ultra-Low Latency

Cloudflare Launches Pay Per Crawl System for AI Content Access

https://blog.cloudflare.com/introducing-pay-per-crawl

The News:

- Cloudflare introduced Pay Per Crawl in private beta, enabling website owners to charge AI companies for accessing their content and creating new revenue streams from automated crawling.

- Publishers can set flat per-request pricing across their entire site with three options: allow free access, charge a set fee, or block crawlers entirely.

- The system uses HTTP 402 Payment Required status code to enforce payment, requiring AI crawlers to authenticate and pay before accessing content.

- Major publishers including TIME, BuzzFeed, Quora, Stack Overflow, The Atlantic, and Webflow have already signed up for the beta program.

- Cloudflare acts as Merchant of Record, handling all payment processing and billing while providing the technical infrastructure.

- New Cloudflare customers now also have AI crawler blocking enabled by default, requiring explicit permission for access.

My take: Cloudflare powers about 20% of all web pages today, and this puts an immediate halt on all web crawling of publicly available data for training new language models. Web scraping has increased rapidly the past years, Cloudflare CEO Matthew Prince describes it the best:

- 10 years ago: Google crawled 2 pages per visitor

- 6 months ago: Google crawled 6 pages per visitor, OpenAI 250:1, Anthropic 6,000:1

- Now: Google crawls 18 pages per visitor, OpenAI 1,500:1, Anthropic 60,000:1

If you have the time, go watch the video with Matthew Prince, it’s well worth it. This change will put a hard stop on all AI crawlers, and it will be very interesting to see how this develops going forward – both for AI model development and how will it affect the business of content creators.

Read more:

- Axios’ Sara Fischer in conversation with Cloudflare’s Matthew Prince – YouTube

- What data was used to train Sora WSJ – YouTube

Amazon Deploys 1 Million Robots and Launches DeepFleet AI Model for Warehouse Coordination

https://www.aboutamazon.com/news/operations/amazon-million-robots-ai-foundation-model

The News:

- Amazon launched DeepFleet, a generative AI foundation model that coordinates robot movements across fulfillment centers, improving travel efficiency by 10% to reduce delivery times and operational costs.

- The company deployed its 1 millionth robot to a fulfillment center in Japan, bringing its robotic workforce close to matching its 1.56 million human employees across 300+ facilities worldwide.

- DeepFleet functions as an “intelligent traffic management system” that reduces congestion and optimizes robot navigation paths using Amazon’s internal logistics data and AWS SageMaker tools.

- The AI model continuously learns and adapts over time, allowing Amazon to store products closer to customers and process orders faster while reducing energy usage.

- Amazon’s diverse robot fleet includes Hercules (lifts 1,250 pounds), Pegasus (package conveyor), Proteus (fully autonomous navigation), and Vulcan (force-feedback sensors for tight spaces).

- Robots now assist in 75% of Amazon’s global deliveries, with the company averaging 670 employees per facility in 2024, the lowest in 16 years, while packages per employee increased from 175 to 3,870 over the past decade.

My take: Today most warehouse automation systems still use traditional algorithms for robot coordination, where Amazon’s new foundation model approach allows for continuous learning and adaptation. This is yet another example of companies moving from traditional, “mature” machine learning models where each model has to be meticulously crafted for each unique domain, into generalized foundation models based on the transformer architecture, with results such as 10% reduced operational costs as a result. If your organization is still using traditional machine learning models but have not yet tried transformer foundation models, please feel free to reach out to me and I can show you how to get started.

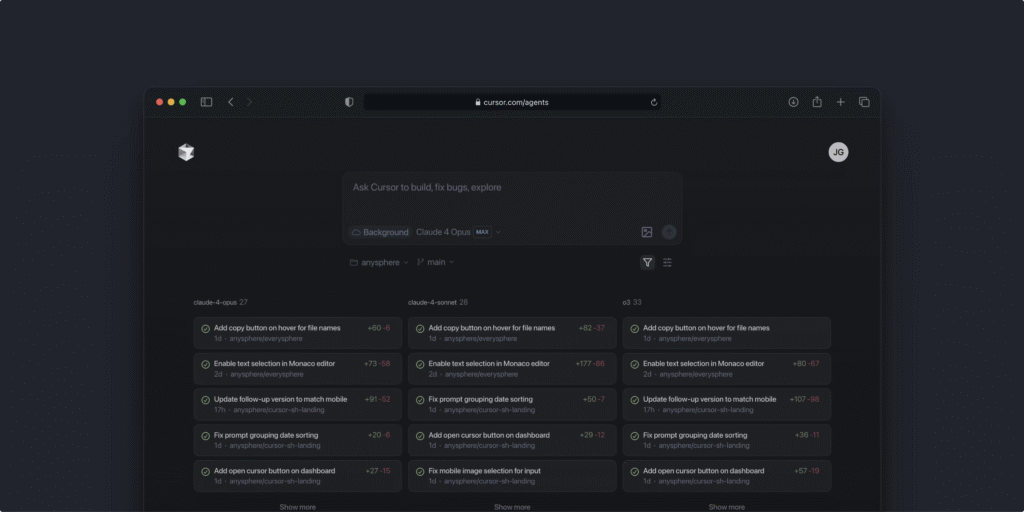

Cursor Launches AI Coding Agents on Web and Mobile Platforms

https://cursor.com/blog/agent-web

The News:

- Cursor launched Cursor Agents on web and mobile browsers, extending its AI coding assistant beyond the desktop IDE to enable developers to write code, fix bugs, and manage projects from any device.

- Developers can run background coding tasks while away from their computers, launching bug fixes, building new features, or answering complex codebase questions without active supervision.

- The web app works across desktop, tablet, and mobile browsers and can be installed as a Progressive Web App (PWA) for native app experience on iOS and Android devices.

- Team collaboration features allow members with repository access to review agent diffs, create pull requests, and merge changes directly from the web interface.

- Slack integration enables users to trigger agents by mentioning “@Cursor” in conversations and receive notifications when tasks complete.

- Users can run multiple agents in parallel to compare results, include images in requests, and add follow-up instructions for richer context.

My take: I have used literally trillions of tokens with the best coding model in the world: Claude 4 Opus, and I would NEVER let it submit any code straight into my repositories. I feel I am getting quite good at prompting it, but the process still takes time and the source code is never production quality from the first try. It takes several iterations to go from the initial code produced by Claude 4 Opus into production ready code. I can understand why a company such as Cursor would like to release something like Agents on web and mobile browsers, but I also cannot understand it. Cursor only gets access to Claude 4 Sonnet, which is an inferior model compared to Claude 4 Opus. This is not a model you will send out to work autonomously, and it is definitely not something you would like to spin off from your mobile phone. If you use Claude 4 Sonnet you need to develop in tiny steps, meticulously reviewing all code, and this is not something you do on a mobile phone. The past two weeks Cursor has also changed their licensing terms and business models two times, and the latest update on their website now says that the $20 license allows “Extended limits on agent”, where it previously said “unlimited”. Two months ago I wholeheartedly recommended Cursor as the go-to AI development environment for most use cases, today based on their licensing changes I advice against it. If you want to develop software with AI agents, Claude Code is the way to go. It’s costly but it’s worth it. If you only want tab-completion go with GitHub Copilot.

Read more:

Perplexity Introduces $200 Max Subscription for Power Users

https://www.perplexity.ai/hub/blog/introducing-perplexity-max

The News:

- Perplexity Max is a new $200 per month subscription designed for professionals, researchers, and content creators who need advanced AI tools and unlimited usage.

- Subscribers receive unlimited access to Labs, a workspace for building dashboards, spreadsheets, reports, and simple web applications, removing previous query limits.

- The plan includes early access to Comet, Perplexity’s upcoming AI-powered browser, and priority access to new features and agent-based tools.

- Max users can run queries on advanced AI models by default, including OpenAI’s o3-pro and Anthropic’s Claude Opus 4, with more models to be added.

- The subscription also offers priority customer support and access to exclusive premium data sources.

My take: We now have Claude Max, OpenAI Pro, Google AI Ultra, Cursor Pro and Perplexity Max that all are around $200 per subscription. When it comes to Perplexity, I don’t think switching to Claude 4 Opus or o3-pro would make such a big difference here, Claude 4 Sonnet is pretty good at summarizing articles when prompted correctly. No, the main problem I have with Perplexity is that it has so much difficulties sorting out bad news sites from good ones. I use Perplexity quite a lot, and I have recently had to start adding lists of domains to ignore to get decent results from it. The situation with bad news sites have gotten exponentially worse with more AI-generated news overflowing the Internet. I cannot imagine anyone wanting to pay $200 for Perplexity Pro, and the same actually goes for OpenAI Pro and Cursor Pro. The only service in my opinion worth $200 today is Claude Max, where you can use Claude 4 Opus API credits worth up to $10,000 every month for a fixed price of $200. If you know how to get the most out of Claude 4 Opus that subscription is a steal.

Read more:

- Perplexity launches a $200 monthly subscription plan | TechCrunch

- Perplexity joins Anthropic and OpenAI in offering a $200 per month subscription

- Perplexity Max tier launched for $200/month with unlimited Labs and access to advanced AI models – BusinessToday

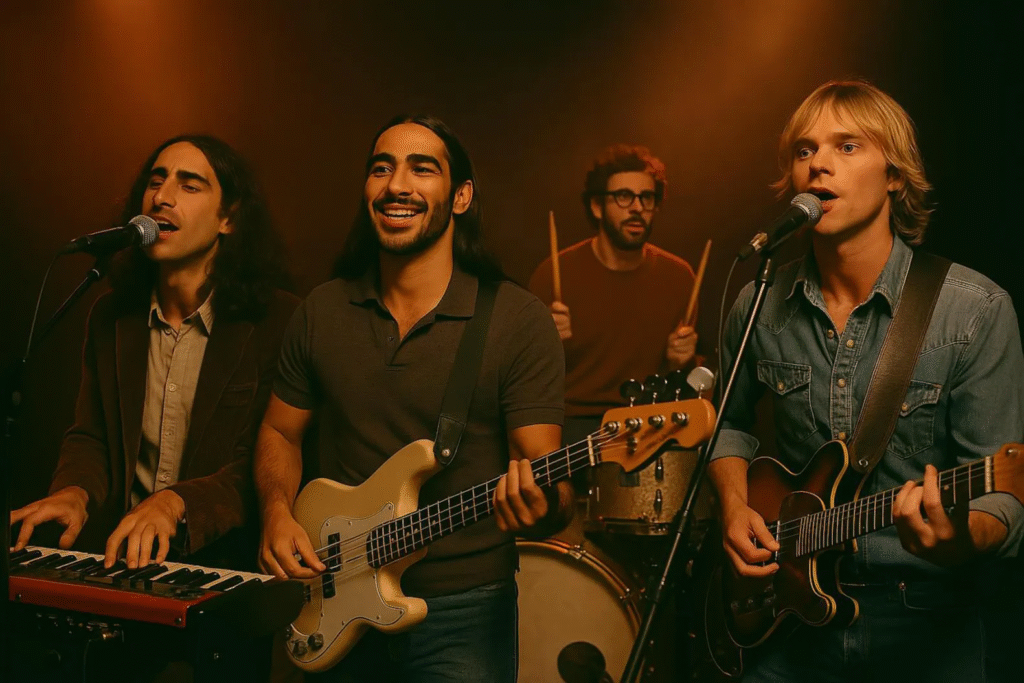

AI-Generated Band Velvet Sundown Admits to “Art Hoax” After Viral Spotify Success

https://www.rollingstone.com/music/music-features/velvet-sundown-ai-band-suno-1235377652

The News:

- The Velvet Sundown, a fictional psychedelic rock band, gained over 900,000 monthly listeners on Spotify using AI-generated music created with Suno’s platform. This case demonstrates how AI music can achieve mainstream streaming success without disclosure requirements.

- The band released two albums in June 2025 with no prior digital footprint, appearing across 30+ Spotify playlists and earning verified artist status. Their top track “Dust on the Wind” accumulated over 380,000 plays within weeks.

- Spokesperson Andrew Frelon initially denied AI usage on social media, calling accusations “lazy and baseless”. He later admitted to Rolling Stone that Suno was used for at least some tracks, stating “It’s marketing. It’s trolling”.

- The project utilized Suno’s “Persona” feature to maintain consistent vocal styling across tracks, similar to Timbaland’s AI artist TaTa. All band member photos and promotional materials were also AI-generated.

- Spotify currently has no requirements for AI music disclosure, while competitor Deezer flagged the content as “100% AI generated”.

My take: 100% AI-generated and over 900,000 monthly listeners. This news alone should spark an explosion of AI music production even greater than what we have seen so far. It’s definitely time for Spotify to flag AI-generated content, and also allow users to filter out 100% AI-generated content. But it’s getting hard to draw the line. Computer-generated sounds and rhythms are of course OK, so is it the AI-singing that’s the breaking point, or the combination? AI is about to change everything we know about music production, and it’s probably going to happen sooner than anyone expects.

Baidu Open Sources ERNIE 4.5 AI Model Family

https://yiyan.baidu.com/blog/posts/ernie4.5

The News:

- Baidu just released the ERNIE 4.5 model family as open source software under Apache 2.0 license, providing developers and researchers free access to multimodal AI models that process text, images, audio, and video content.

- The family includes 10 model variants ranging from 0.3 billion to 424 billion total parameters, with Mixture-of-Experts (MoE) architecture featuring 47B and 3B active parameters for the largest models.

- ERNIE-4.5-300B-A47B-Base outperforms DeepSeek-V3-671B-A37B-Base on 22 out of 28 benchmarks despite having significantly fewer total parameters (424B vs 671B).

- The smaller ERNIE-4.5-21B-A3B-Base model outperforms Qwen3-30B-A3B-Base on math and reasoning benchmarks including BBH and CMATH while using approximately 70% of Qwen3’s parameter count.

My take: Baidu Ernie 4.5 was launched earlier this year as closed source, and now Baidu did a complete reversal and released all models as open source under the Apache 2.0 license. So why is this an important release? Since it is open source, it can be post-trained and fine-tuned. Baidu posted both pre-training and post-training benchmarks, where the post-training benchmarks were done on “fine-tuned variants of the pre-trained model for specific modalities”. Now, it’s impossible to know exactly how Baidu post-trained their models. But if I were to guess, they probably used materials that were very close to the actual benchmark being tested. So when you look at the benchmarks where ERNIE-4.5 seems to beat DeepSeek-V3, GPT-4.1 and Qwen3, this was done using custom model variations specifically trained for specific purposes. So what does this mean? It means that if you work in a company with access to a stack of H100 cards (at least 8 of them with 80GB each) then you could download ERNIE 4.5 and fine-tune it for your specific purposes, and it would perform very similar to today’s top performing models for your data.

Columbia University’s AI System Achieves First Pregnancy for Couple After 18-Year Struggle

https://edition.cnn.com/2025/07/03/health/ai-male-infertility-sperm-wellness

The News:

- Columbia University’s STAR (Sperm Tracking and Recovery) system uses AI to identify viable sperm in men with severe infertility, offering new hope for couples facing male fertility challenges at a fraction of traditional IVF costs.

- The system scans 8 million microscopic images in under one hour, successfully locating sperm cells that human technicians cannot detect even after days of searching. In the breakthrough case, STAR found 44 sperm cells in one hour while trained embryologists found zero after two days of analysis.

- STAR adapts astrophysics algorithms originally designed to detect new stars and planets, applying the same pattern recognition technology to identify rare sperm cells in semen samples. Dr. Zev Williams, who led the five-year development, describes it as “finding a needle hidden within a thousand haystacks”.

- The technology targets azoospermia, a condition affecting up to 15% of infertile men where no measurable sperm are present in semen samples. Traditional treatments require painful surgical extraction from the testes or using donor sperm.

- STAR costs approximately $3,000 compared to $15,000-$30,000 for single IVF cycles, making fertility treatment more accessible. The system is currently available only at Columbia University Fertility Center, with plans to expand to other facilities.

My take: Traditional treatment for severe male infertility relies on surgical sperm extraction, which involves removing portions of the testes and manually searching tissue samples. This method is invasive, painful, and can only be performed a limited number of times before causing permanent damage. Thanks to AI, this new STAR system eliminates all this thanks to using non-invasive sample analysis. While still in early testing, the first pregnancy was just achieved with STAR, I think this is an amazing achievement by Columbia University Fertility Center and I hope they help spread the results of their work globally.

Meta Hires 11 Top AI Researchers from OpenAI, DeepMind, and Anthropic for AGI Push

The News:

- Meta has formed a new unit, Meta Superintelligence Labs, to accelerate research toward artificial general intelligence (AGI). The group aims to consolidate Meta’s AI efforts and develop next-generation AI models, which could impact products across Meta’s platforms.

- The company recruited 11 leading AI researchers from OpenAI, DeepMind, Anthropic, and Google. Notable hires include Trapit Bansal (co-creator of OpenAI’s o-series models), Shuchao Bi (developed GPT-4o’s voice mode), Huiwen Chang (architect of image generation systems at Google Research), and Jack Rae (pre-training lead for Gemini at DeepMind).

- Alexandr Wang, former Scale AI CEO, will lead the new lab as Chief AI Officer. Nat Friedman, ex-GitHub CEO, will oversee AI products and applied research.

- Meta invested $14.3 billion in Scale AI as part of this initiative, bringing in Wang and several associates. The company is also in talks with AI startups like Perplexity AI and Runway.

- Reports indicate Meta offered highly competitive compensation, with some signing bonuses reportedly reaching $100 million, to attract top talent from competitors.

My take: Apparently Zuckerberg himself reached out to many of these people, offering $100 million bonuses if they joined Meta to work on AI. Maybe this will work, maybe it won’t, team success is not only about having 11 of the smartest people in the same room, it’s about having a team of mixed competences that respect and listen to each other, and work well as a unit. When Meta released Llama 4 earlier this year they cheated the benchmarks, publishing one model online that was specifically engineered to present well on user benchmarks and then another model separately. With that culture in place maybe it won’t matter if you hire 11 of the smartest people available, the people who choose to work in that place for the money is maybe not the people you want in a successful team creating the world’s best AI systems.

Read more:

- Meta’s Llama 4 ‘herd’ controversy and AI contamination, explained | ZDNET

- It’s Known as ‘The List’—and It’s a Secret File of AI Geniuses – WSJ

Kyutai Labs Releases Open-Source Streaming Text-to-Speech Model with Ultra-Low Latency

The News:

- Kyutai Labs released an open-source 1.6B parameter text-to-speech model that processes text streams in real-time, enabling developers to build voice applications with significantly reduced latency compared to existing solutions.

- The model achieves 220ms latency from receiving the first text token to generating audio output, with production deployments serving 32 simultaneous users at 350ms latency on L40S GPU hardware.

- Unlike other TTS models that require complete text input before processing, Kyutai TTS streams both text input and audio output, allowing it to start generating speech while an LLM is still producing text.

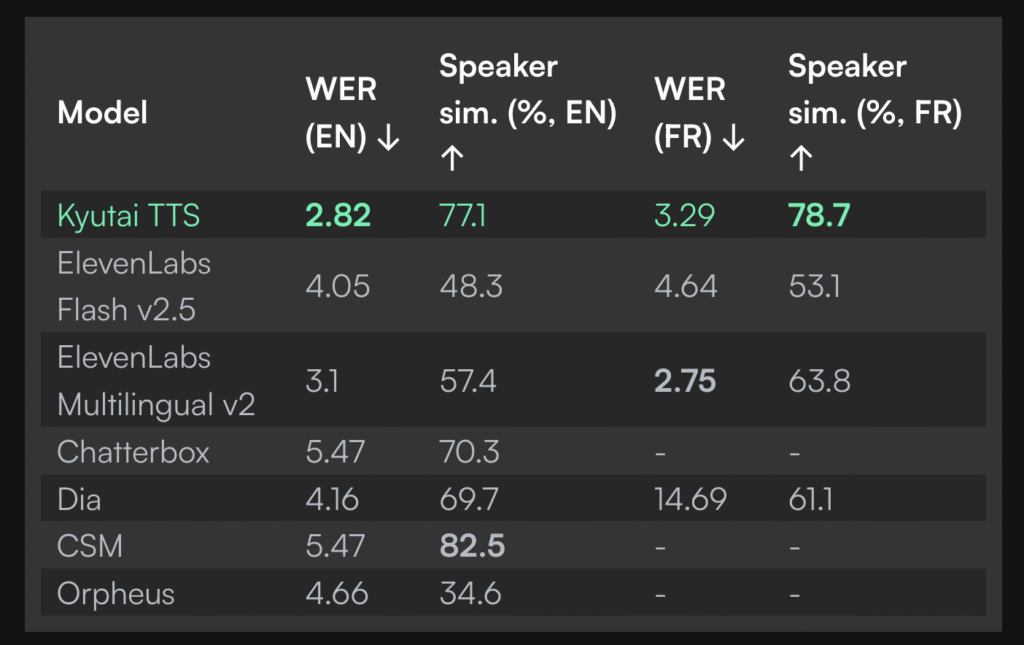

- Performance benchmarks show the model achieves 2.82% word error rate in English and 77.1% speaker similarity scores, outperforming ElevenLabs Flash v2.5 which scored 4.05% word error rate and 48.3% speaker similarity.

- The company also released Unmute, an open-source tool that wraps text LLMs with speech-to-text and text-to-speech capabilities, enabling voice conversations with any text-based language model.

- Voice cloning requires only a 10-second audio sample and includes word-level timestamps for applications like real-time subtitles and interruption handling.

My take: The way Kyutai TTS works is fundamentally different to the competition like ElevenLabs. Where ElevenLabs and other companies perform “streaming”, they only stream audio output after receiving complete text input. Kyutai TTS processes partial text as it arrives. User feedback has so far been very positive , with several people getting it to run very well on a single GTX 3090 card. Right now it ‘s only available in English and French though, so if you need other languages then this is not the model for you. Otherwise you can go ahead and download it and play around with it!

Read more: