Hello and welcome to a new episode of Tech Insights! The past week was fairly low on news, the TLDR version of the week is that NotebookLM got four new audio formats, including the interesting new “debate” mode where each host picks a different side in a discussion, Google launched a small and super efficient embedding model called EmbeddingGamma for mobile and edge devices, and China now mandates all AI generated content to be clearly marked as of September 1. Of course there were a few more news than that, but if you are in a hurry that was the gist of it.

Other than that we had the usual warning that AI will take away most entry-level jobs. This time it was the CEO of Anthropic (again) in an interview with CNN, stressing that “the ability to summarize a document, analyze a bunch of sources and put it into a report, write computer code” can all be done by AI today, and as the capabilities of AI systems increase this will also affect more entry level jobs. Amodei predicts AI tools could soon eliminate half of all white-collar, entry-level jobs, bringing the unemployment rate to up to 20%. His predictions are based on a clear shift in trends when it comes to how companies use AI – previously most companies used AI for augmentation (chat) and not so much for automation (agents), but those figures are shifting rapidly, and according to Amodei Anthropic has measured that over 40% of all users use AI for automation now, and less than 60% use it for augmentation. “We can see where the trend is going, and that’s what’s driving some of the concern (about AI in the workforce)”. Depending on your current situation, these trends are either a good thing or a bad thing.

Last week I wrote about the amazing model Nano Banana by Google, and if you haven’t yet tried it you should. Just sign in to Google Gemini with a Google account and enable the Image mode. Last week I found three great tips that will make your time with Nano Banana much more efficient:

- Google released six official prompting tips for Nano Banana. Definitely read these through if you are working with design.

- Rob de Winter launched a Photoshop script for Nano Banana where you can use it like a regular plugin within Photoshop! This is a true game changer and if you have a Photoshop license, don’t miss this one! Check this video how to use it: Nano Banana and Flux Kontext Script for Photoshop – YouTube

- PiXimperfect, one of my favorite Youtubers, launched a good feature overview of how to use Nano Banana as a professional artist. Don’t miss it if you work with design: Nano Banana vs Photoshop: A Fair Comparison – YouTube

Thank you for being a Tech Insights subscriber!

Listen to Tech Insights on Spotify: Tech Insights 2025 Week 37 on Spotify

THIS WEEK’S NEWS:

- Switzerland Launches Open Source LLM Alternative to ChatGPT

- Google DeepMind Releases EmbeddingGemma for On-Device AI Applications

- China Mandates AI Content Labels on Social Media Platforms

- NotebookLM Launches Four Audio Overview Formats

- OpenAI Announces 2026 Job Platform to Challenge LinkedIn Talent Marketplace

- Anthropic Raises $13B at $183B Valuation

- Lovable Launches Voice Mode for App Building

- Mistral Adds 20+ MCP Connectors and Memories to Le Chat

- Tencent Releases Voyager AI for Creating 3D Environments from Single Photos

Switzerland Launches Open Source LLM Alternative to ChatGPT

https://www.swiss-ai.org/apertus

The News:

- Switzerland launches Apertus, a national large language model developed by EPFL, ETH Zurich, and the Swiss National Supercomputing Centre as an open source alternative to ChatGPT and Meta’s Llama models.

- The model comes in two versions with 8 billion and 70 billion parameters, trained on 15 trillion tokens across more than 1,000 languages.

- Training data includes 40 percent non-English content, incorporating underrepresented languages like Swiss German and Romansh.

- All components are fully open: architecture, model weights, training data, and development recipes are documented and publicly accessible.

- The models comply with Swiss data protection laws and EU AI Act transparency requirements and is available under a permissive open source license for educational, research, and commercial applications.

- Deployment is supported through platforms including Transformers, vLLM, SGLang and MLX.

My take: If you are curious on just what it takes to train a 70b parameter LLM, then this is a gold mine. Unlike commercial models, Apertus’s architecture (Apertus from the Latin word “open”), model weights, training data, and development recipes are all openly accessible and fully documented, with full compliance for Swiss data protection laws and EU AI Act transparency requirements. Unfortunately user feedback from using the model has so far been really bad, and it’s clear that Apertus is very far from the performance of state-of-the-art models from Anthropic, OpenAI, Google and even Microsoft (MAI). The EU has fallen quite far behind both China and the US when it comes to model development, and even when we try our very best to push out a SOTA model like Apertus it still feels like it’s years behind current models from US providers.

Read more:

- Apertus: a fully open, transparent, multilingual language model : r/LocalLLaMA

- I see many people criticizing Apertus | LinkedIn

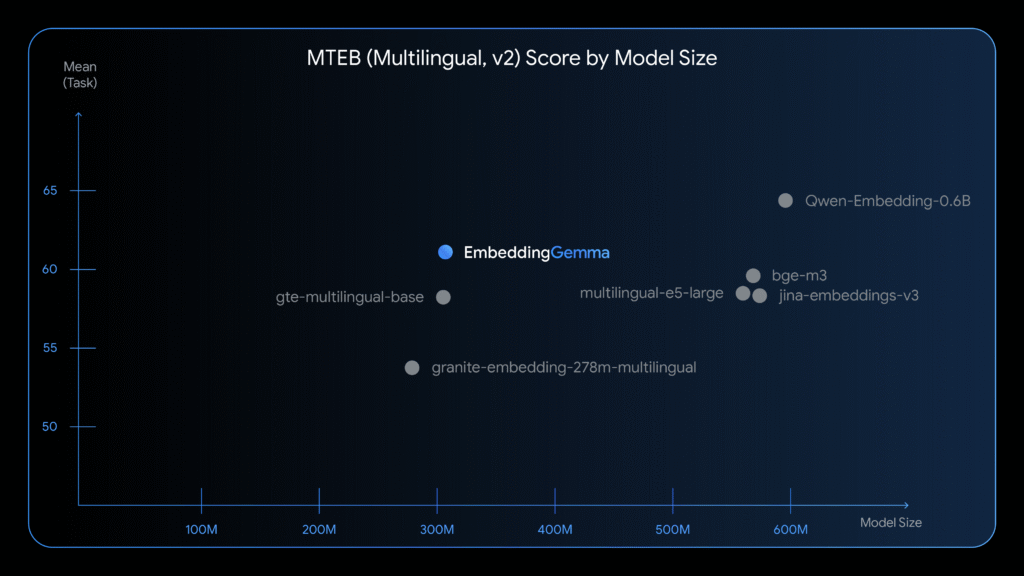

Google DeepMind Releases EmbeddingGemma for On-Device AI Applications

https://developers.googleblog.com/en/introducing-embeddinggemma

The News:

- Google DeepMind released EmbeddingGemma, an open-source text embedding model with 308 million parameters that runs on consumer devices without internet connection. The model processes text searches and understanding in 100+ languages while consuming less than 200MB of RAM when quantized.

- EmbeddingGemma ranks highest on the Massive Text Embedding Benchmark (MTEB) among models under 500 million parameters. The model delivers inference latency under 15 milliseconds for 256 tokens on EdgeTPU hardware.

- The model integrates with developer platforms including Hugging Face, Kaggle, LangChain, LlamaIndex, and runs directly in web browsers through transformers.js. Google provides fine-tuning options that let developers adjust precision between accuracy and speed.

- Built on Gemma 3 architecture, EmbeddingGemma uses standard transformer encoder stack with full-sequence self-attention rather than bidirectional attention layers. The model supports offline personal file search, private chatbots, and automatic query classification for mobile applications.

My take: Embedding models are used to semantically index data on your device into vectors, and when used with a model such as Gemma 3n you can build industry-specific and fully-offline enabled chatbots that access this indexed data through something like Retrieval-Augmented Generation (RAG). There are so many uses for embedding models on mobile devices, and I really love to see how companies like Google are able to push models to be both smaller and better at the same time. It kind of reminds me of the 90’s demo scene.

China Mandates AI Content Labels on Social Media Platforms

The News:

- China’s new AI content labeling law took effect September 1, 2025, requiring all artificial intelligence-generated text, images, audio, video, and virtual content to carry both explicit visible labels and implicit metadata identifiers.

- Major platforms including WeChat (1.4 billion users), Douyin (766 million users), Weibo (500 million monthly active users), and Xiaohongshu implemented all required compliance features last week.

- China’s Cyberspace Administration drafted the regulation alongside three government ministries as part of broader AI oversight efforts targeting deepfakes and online fraud.

My take: This new AI content labeling law puts China ahead of most countries in the world when it comes to AI content labeling. Here in the EU, the European Union proposed similar requirements under the AI Act, but the implementation still remains in early stages. And the US lacks federal AI content labeling mandates, relying primarily on voluntary industry standards and platform policies. I believe forcing users and platforms to clearly label AI generated content needs to be a requirement, and it was almost a surprise to see China taking the lead ahead of EU here.

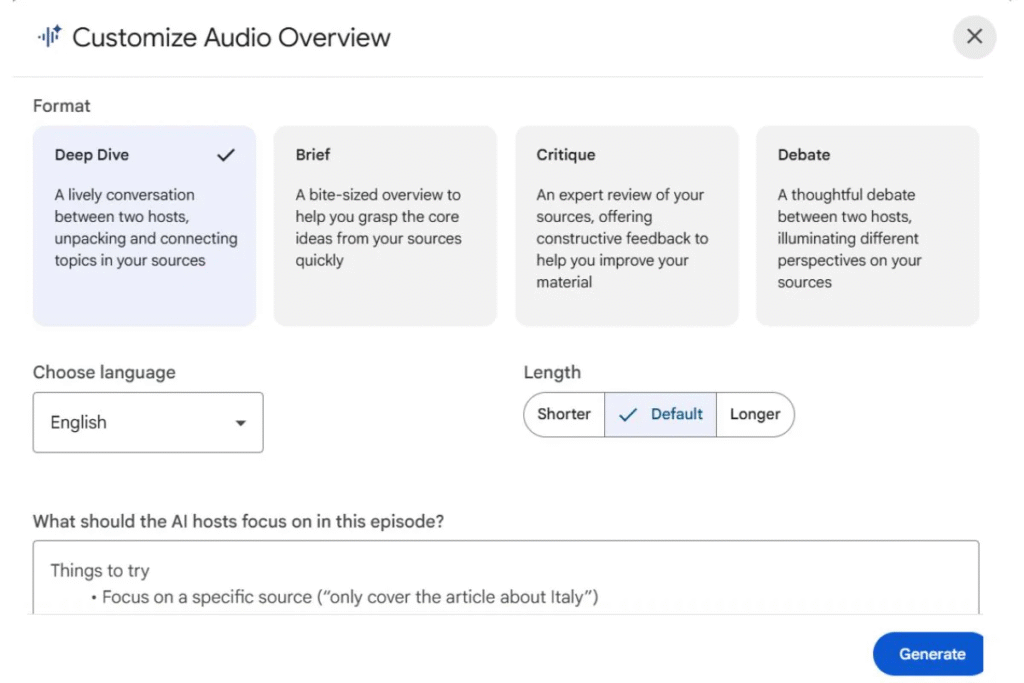

NotebookLM Launches Four Audio Overview Formats

https://twitter.com/NotebookLM/status/1962949985546187120

The News:

- Google’s NotebookLM just added three new Audio Overview formats alongside its existing Deep Dive podcast feature.

- Brief format delivers bite-sized summaries in 1-2 minutes with a single speaker covering core takeaways from uploaded materials.

- Critique format features two AI hosts providing expert-style reviews and constructive feedback on content like essays or design documents, with adjustable length options from four minutes to longer versions.

- Debate format presents two AI hosts taking opposing perspectives on the same source material, creating structured arguments that highlight different viewpoints.

My take: The new debate mode is so fun to listen to – send up any text and listen to these two presenters take different sides and debate on it. It just works so well, and if you are up for a panel discussion or debate in the coming week, make sure to send your material to NotebookLM first and listen to them debate it beforehand!

OpenAI Announces 2026 Jobs Platform to Challenge LinkedIn Talent Marketplace

https://openai.com/index/expanding-economic-opportunity-with-ai

The News:

- OpenAI announced plans to launch the OpenAI Jobs Platform by mid-2026, an AI-powered hiring platform that matches employers with AI-skilled candidates.

- The platform includes dedicated sections for local businesses and government agencies seeking AI talent, alongside traditional corporate recruitment.

- OpenAI partnered with Walmart, John Deere, Boston Consulting Group, Accenture, and Indeed to develop AI certification programs through the expanded OpenAI Academy.

- The certification program offers multiple fluency levels from basic AI workplace skills to advanced prompt engineering, with training and testing conducted directly within ChatGPT.

- OpenAI committed to certifying 10 million Americans in “AI fluency” by 2030, with pilot certifications launching late 2025.

- Walmart will provide customized OpenAI certifications to its 2 million U.S. employees starting in 2026 through its Live Better U program.

My take: I think this job platform could be a good thing, especially if the upcoming AI certification programs are technical enough so they can prove actual AI production skills, like fine tuning, RAG, setting up efficient semantic indexes, and so on. OpenAI is clearly targeting LinkedIn and Microsoft with this, and if you have ever tried finding candidates with the LinkedIn search function you know exactly how bad the current experience is. My main hope with this release is that it pushes Microsoft to do a better job with LinkedIn and maybe also lower their prices.

Anthropic Raises $13B at $183B Valuation

https://www.anthropic.com/news/anthropic-raises-series-f-at-usd183b-post-money-valuation

The News:

- Anthropic completed a $13B Series F funding round led by ICONIQ, valuing the AI company at $183B.

- The company’s run-rate revenue reached $5B by August 2025, growing from $1B at the beginning of 2025.

- Anthropic serves over 300,000 business customers with large accounts representing over $100K in revenue growing 7x in the past year.

- Claude Code generated over $500M in run-rate revenue with usage growing more than 10x in three months since its May 2025 launch.

- Significant investors include Fidelity, Lightspeed Venture Partners, BlackRock, Goldman Sachs Alternatives, and Qatar Investment Authority.

My take: Just for comparison, In November last year OpenAI was valued at $157 billion, and in March this year OpenAI was valued at $300 billion. OpenAI is currently in talks for a secondary share sale allowing employees and former employees to sell over $10 billion worth of stock at a $500 billion valuation. With more and more companies moving from AI augmentation to AI agentic solutions (as mentioned in the introduction), it’s easy to see why these valuations will continue to skyrocket. I wouldn’t be surprised at all if Anthropic is also valued way above $500 billion already next year.

Lovable Launches Voice Mode for App Building

https://twitter.com/lovable_dev/status/1963255845900484632

The News:

- Lovable just rolled out Voice Mode, a new functionality that converts voice commands into code and builds apps through speech-to-text technology powered by ElevenLabs’ model “Scribe”.

- The feature transcribes speech in 99 languages with character-level timestamps, speaker diarization, and audio-event tagging.

- Users tap a microphone icon, speak their ideas in natural language, and watch websites and apps generate automatically without touching a keyboard.

- The company claims ElevenLabs’ Scribe model offers precision that instantly materializes spoken descriptions into working websites.

My take: Great to see voice dictation built straight into the product! I use Superwhisper for macOS and it’s actually quite relieving to sometimes talk to the LLM instead of typing. It goes faster and you typically don’t consider each word in the sentence before speaking, but it definitely has it’s learning curve getting comfortable sitting and talking to an AI.

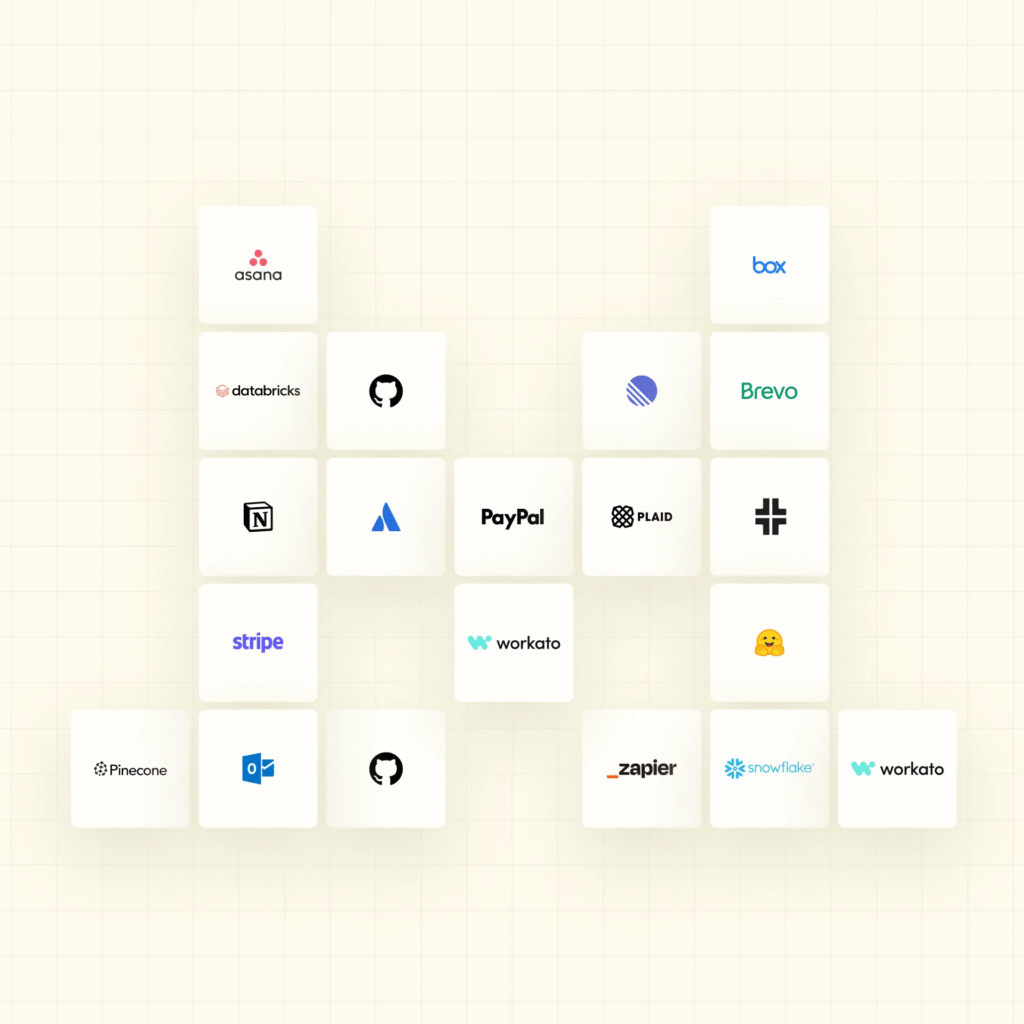

Mistral Adds 20+ MCP Connectors and Memories to Le Chat

https://mistral.ai/news/le-chat-mcp-connectors-memories

The News:

- Mistral released 20+ MCP-powered connectors for Le Chat, integrating enterprise platforms like Databricks, Snowflake, GitHub, Atlassian, Asana, Outlook, Box, Stripe, and Zapier.

- Users can search datasets in Databricks and Snowflake, manage GitHub repositories, create Asana project boards, and access payment data from Stripe and PayPal through direct chat commands.

- Custom MCP connector support allows organizations to add proprietary integrations beyond the standard directory.

- New Memories feature stores user preferences and context across conversations while filtering out sensitive information.

- Memories includes import functionality from ChatGPT and gives users control over what information gets stored, edited, or deleted.

- All features are available on the free plan and support on-premises, cloud, or mobile deployment.

My take: Chatbots with MCP connectors will probably be standard in the workplace in 2026, where chatbots can connect to every system and control most systems. Having 20+ connectors built in and available for use in Le Chat is great, however adding them to services like Claude and ChatGPT is quite trivial so the actual competitive advantage here is quite low. The main new feature here is Memories, and if you have tried both OpenAI’s version and Anthropics version you know how different they are. It would be interesting to hear your experience with Memories if you are a Le Chat user.

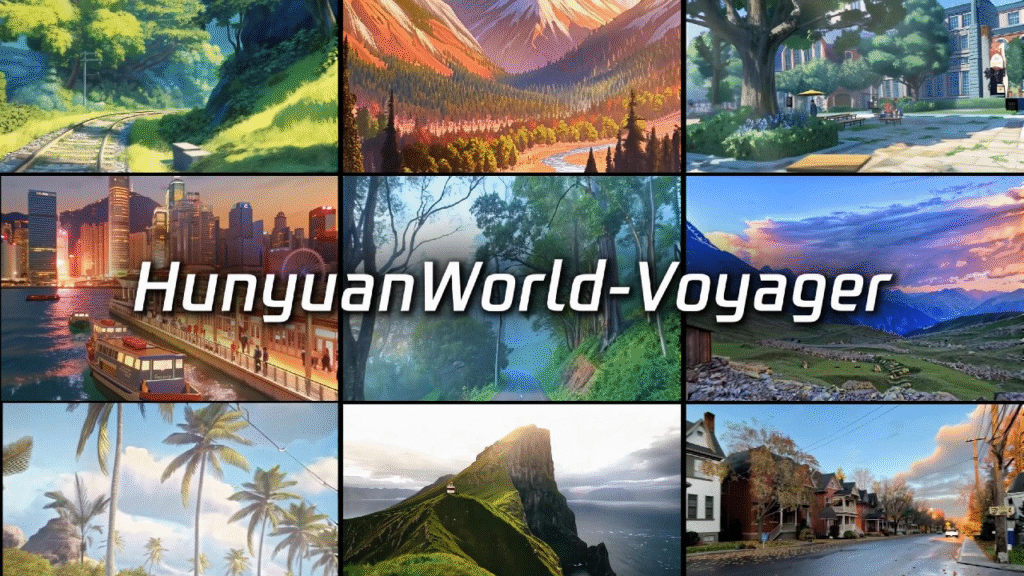

Tencent Releases Voyager AI for Creating 3D Environments from Single Photos

https://3d-models.hunyuan.tencent.com/world

The News:

- Tencent released HunyuanWorld-Voyager, an AI model that generates 3D environments from single images with custom camera navigation paths.

- The system creates 49 frames per generation (approximately 2 seconds) and allows users to chain multiple clips for longer sequences lasting several minutes.

- The model uses a “world cache” system that stores 3D points from previous frames to maintain spatial consistency as the camera moves through generated scenes.

- Each output includes both RGB video and depth information, which can be converted directly into 3D point clouds.

- Voyager achieved a score of 77.62 on Stanford’s WorldScore benchmark, outperforming OpenAI’s Sora (62.15) in spatial consistency and camera control.

My take: There are two things that makes this platform interesting. The first one is that it creates worlds from a single image with custom camera navigation points. There are tons of use cases for this. The second one is that it produces actual depth data alongside the video, making it suitable for 3D reconstruction. However if you live in the EU, UK or South Korea you cannot use it at all due to licensing. It also requires a high-end workstation GPU to run. But for companies outside the EU with their own custom workstation GPU this could be an interesting choice.