As many of you saw in my LinkedIn post last week – I have quit my job at RISE (Research Institutes of Sweden) and I am starting a new AI company. The first question most of you asked me was “What will happen with Tech Insights”? Tech Insights will continue just as before, for free, and I will even expand upon it to make the concept even better!

If you live near Gothenburg in Sweden, starting in April we will arrange free, bi-monthly AI Business Meetups at Kvarnberget, right in the center of the city. We will serve breakfast, present a 30 minute seminar on the latest AI innovations and how you can apply them in your company, followed by an open discussion. We will have experts in maths, ML, generative AI and software development available on-site, and we will hopefully be able to answer any questions you might have. Whenever possible we will do hands-on demonstrations. The first invites will go out in March and the number of seats will be limited. Did I mention it will be free? 🙂

We will also have a major launch party of the company with some outstanding external speakers, food, live music and drinks on March 13, but we will have a hard limit of 120 participants. Those of you who subscribe to my newsletter will be able to get your free tickets before everyone else!

And what an AI week it was! Apart from new models by OpenAI, free access to o1 by Microsoft, and a new AI agent by Google that can automatically call and book car service for you, the main news the past week was Google that announced that they just cut their code migration time in half thanks to AI! This is where I believe AI will shine the most in the coming years. The more systems you have, and the older they get, the more difficult it is to keep them updated with new features and move them to modern infrastructure. Google is leading the way here, moving 500 million lines of code from 32-bit to 64-bit, using AI to reduce the completion time by 50% compared to manual methods!

Thank you for being a Tech Insights subscriber!

THIS WEEK’S NEWS:

- Google Cuts Code Migration Time in Half with AI

- OpenAI Launches o3-mini, Its Cheapest But Most Dangerous AI Model to Date

- Google’s AI Can Now Make Phone Calls to Book Your Car Service

- Microsoft Makes OpenAI o1 Free for Everyone in Copilot

- Mistral AI Releases 24B Open-Source Language Model That Rivals GPT-4o mini

- Allen AI Releases Tülu 3 405B, Claims to Outperform DeepSeek and GPT-4o

- OpenAI Launches ChatGPT Gov for U.S. Government Agencies

- Alibaba Releases Qwen Vision-Language Model with PC Control Capabilities

- DeepSeek launches Janus-Pro Image Generator And NO It Does Not Challenge OpenAI

- YuE: Open-Source AI Music Generation Model

Google Cuts Code Migration Time in Half with AI

https://www.theregister.com/2025/01/16/google_ai_code_migration

The News:

- Google successfully implemented AI-powered tools to accelerate code migration processes, reducing completion time by 50% compared to manual methods.

- In Google Ads’ 500-million-line codebase, the new AI system helped convert 32-bit IDs to 64-bit IDs across tens of thousands of code locations. The task would have required hundreds of engineering years if done manually.

- The AI system achieved an 80% success rate in generating code modifications, with the remaining 20% requiring human intervention or editing.

- For the JUnit testing library migration, the AI completed the transition of 5,359 files and modified 149,000 lines of code in just three months, with 87% of AI-generated code being committed without changes.

- The system now generates more code than humans type, marking a significant shift in Google’s internal software development practices.

My take: Migrating old code bases will be one of the most significant ways large corporations use generative AI for programming. For large organizations struggling with technical debt and legacy system updates, this approach could make previously infeasible migration projects possible. If you have ever worked in a big company with 30 year old legacy systems, you know how difficult it is to modify or add features. Introducing generative AI to the old code bases means both a way forward to keep them updated, and also a way forward for transitioning them into modern platforms.

OpenAI Launches o3-mini, Its Cheapest But Most Dangerous AI Model to Date

https://openai.com/index/openai-o3-mini

The News:

- OpenAI released o3-mini, their newest reasoning model that offers improved performance at lower costs, making it available to both free and paid ChatGPT users for the first time.

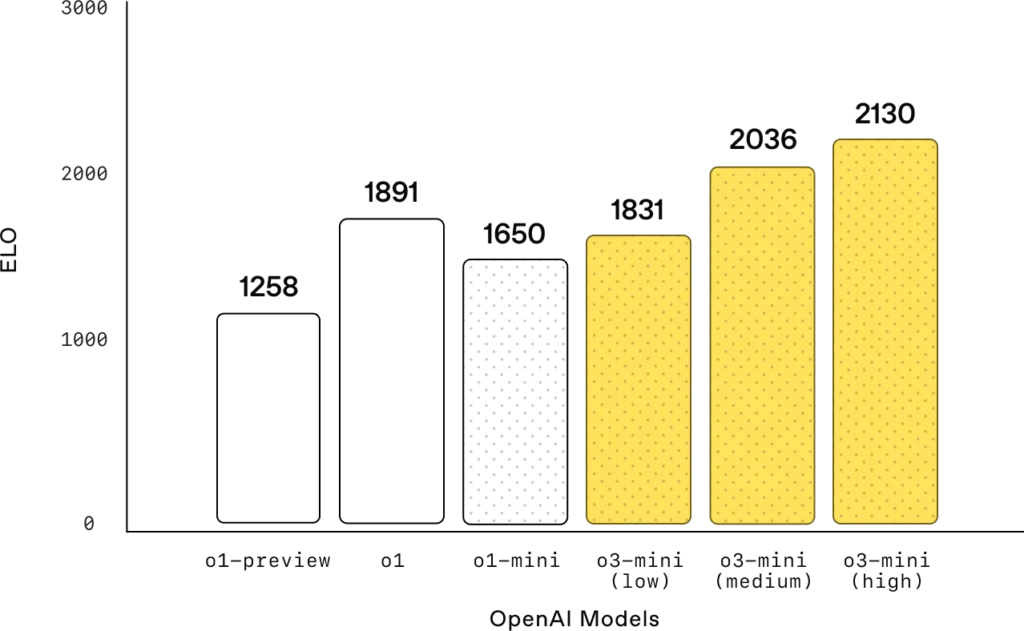

- o3 features three reasoning levels : low, medium, high, see chart above. Free users can only access to the “medium” version. Plus users can send up to 150 messages per day to the “high” version and Pro users get unlimited access.

- Pricing is significantly reduced compared to o1 – input cost is $1.10 per million tokens and output is $4.40 per million tokens, just marginally more than DeepSeek-R1 (whose pricing is only temporary as an “introduction price”).

- The input context window of o3-mini is 200.000 tokens, which is much better than GPT-4o and o1-mini (which both have 128k tokens).

- The knowledge cutoff date is October, 2023.

What you might have missed (1): From the OpenAI o3-mini System Card you can read: Due to improved coding and research engineering performance, OpenAI o3-mini is the first model to reach Medium risk on Model Autonomy. This is significant because OpenAI is already at the limit of what is possible according to their rules. Only models with a post-mitigation score of Medium or below can be deployed, and only models with a post-mitigation score of High or below can be developed further.

What you might have missed (2): Many of us spent many hours testing o3-mini-high over the weekend, and it’s really good at writing complex code with high quality. Here is just one example posted February 1st by Linas Beliūnas. Click here to view the video.

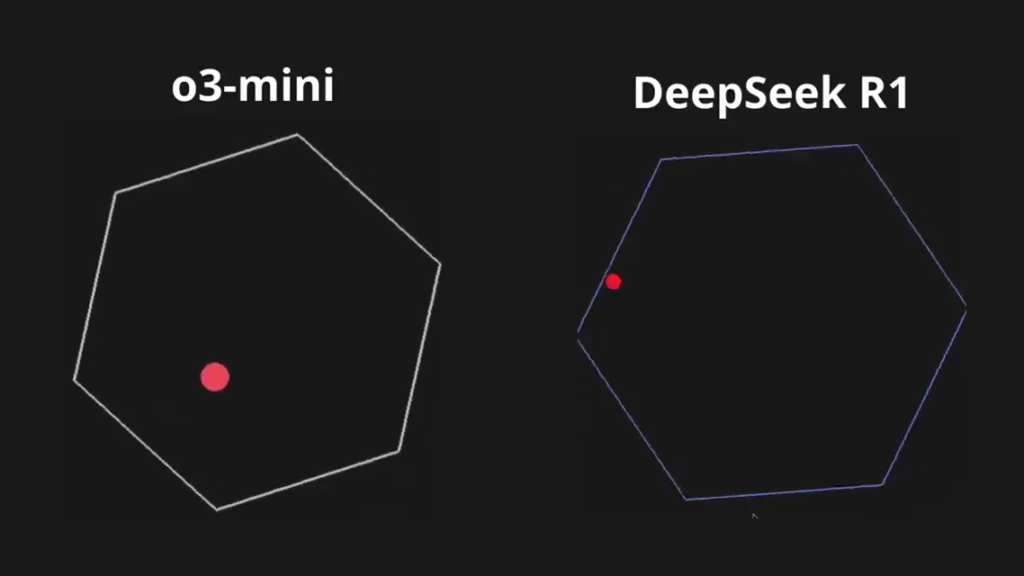

“This is wild! OpenAI’s latest AI model o3-mini crushed DeepSeek R1 😳 Here’s the prompt that was used: ‘write a Python program that shows a ball bouncing inside a spinning hexagon. The ball should be affected by gravity and friction, and it must bounce off the rotating walls realistically’. The fact that you can accurately do that with a single prompt is absolutely mindblowing.” From LinkedIn.

My take: I have tried o3-mini-high over the weekend for programming, refactoring and documentation, and it performs exceptionally well for all tasks. It excels at structured outputs, making it very well-suited for agentic workflows. Overall it has been a tumultuous week – on Monday everyone flocked to DeepSeek-R1, on Thursday Microsoft released OpenAI o1 for free within Copilot Chat, and on Friday OpenAI launched o3 which beats them all and is almost as cheap as DeepSeek when used through API. This week is also a good motivator for me to keep doing my weekly newsletter, the past week’s launches just again proves how important it is to sit tight, evaluate, analyze, and plan for what’s coming instead of acting in panic over the ever-increasing flood of daily AI news.

Read more:

Google’s AI Can Now Make Phone Calls to Book Your Car Service

https://blog.google/products/search/search-labs-ai-announcement-

The News:

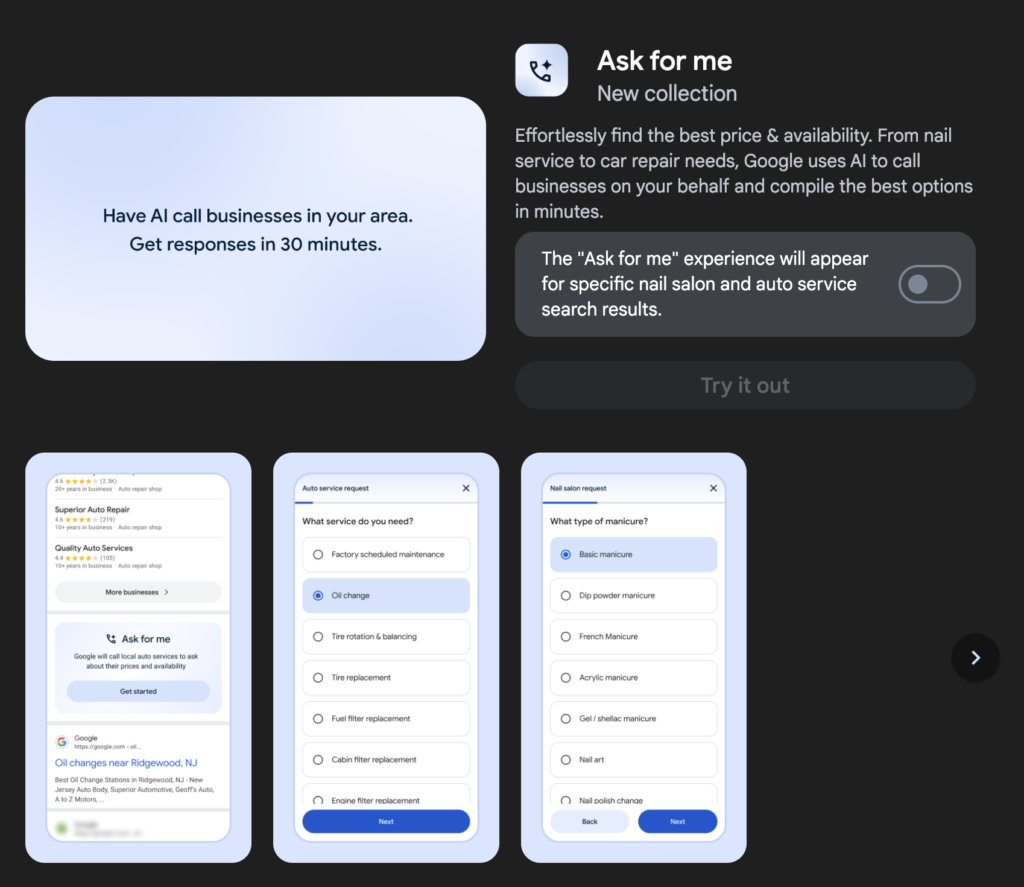

- Google just launched two new AI-powered calling features in Search Labs: ‘Ask for Me’ makes calls to local businesses for service inquiries, while ‘Talk to a Live Representative’ handles customer service calls.

- ‘Ask for Me’ currently works with nail salons and auto repair shops in the US. After users specify their needs (like car type and service required), the AI makes calls and delivers a summary via email or SMS within 30 minutes. The system uses Google’s Duplex technology, which clearly identifies itself as an automated system when calling businesses. Businesses can opt out through their Google Business Profile settings or during calls.

- ‘Talk to a Live Representative’ calls customer service numbers, waits on hold, and then calls the user back once a live representative is available for supported businesses like airlines, telecommunications companies, retail stores, and insurance providers.

- Google also implemented call quotas to prevent overwhelming businesses, and the collected information may be used to assist with similar future inquiries from other users.

- Both features are currently limited to US-based English speakers who opt in through Search Labs, with ‘Ask for Me’ requiring manual activation.

My take: This is it! No more waiting in queue for a company representative to answer the phone! While some phone systems feature “call me back when an operator is available”, not all of them do. My biggest concern however is that I see no easy way for Apple to implement this in the iPhone. It’s for things like this where the Android system shines the most. I would love to have these features in my phone, both of these agents would truly make a positive difference in my personal life.

Read more:

- Google’s ‘Ask for Me’ uses AI to call local businesses for you | The Verge

- Google’s ‘Ask for me’ AI calls businesses so that you don’t have to

Microsoft Makes OpenAI o1 Free for Everyone in Copilot

The News:

- Microsoft has made its Think Deeper feature, powered by OpenAI’s o1 reasoning model, available for free to all Copilot users, removing the previous “Copilot Pro” subscription requirement. This is the full o1 model, not a limited version, giving users access to advanced AI reasoning capabilities without any cost.

- The feature takes approximately 30 seconds to process queries, as it analyzes questions from multiple angles before providing responses.

- Users can access Think Deeper through the Copilot mobile app by tapping the three-dot menu and selecting “Think Deeper” from the options.

My take: Did DeepSeek have an impact on the decision to release o1 for free to all Copilot users? Definitely. If you have the need for a thinking model but do not want to spend any money, Copilot Think Deeper is a much better alternative than sending all your data to the CCP using deepseek.com. However just one day later, on Friday, OpenAI launched their latest o3-mini for free which beats both “Think Deeper” and DeepSeek-R1 on many points, and it even has a Context Window of 200k tokens. If you want the latest and greatest you still have to pay (o3-mini-high), but for DeepSeek-R1 comparable performance then Copilot Think Deeper is an excellent alternative.

Read more:

- Microsoft Copilot’s ‘Think Deeper’ Is Now Free

- Answer to DeepSeek hype. Microsoft made OpenAI’s o1 model free for all Copilot users | dev.ua

- Microsoft brings OpenAI’s o1 model to Copilot for free-tier users: Details | Tech News – Business Standard

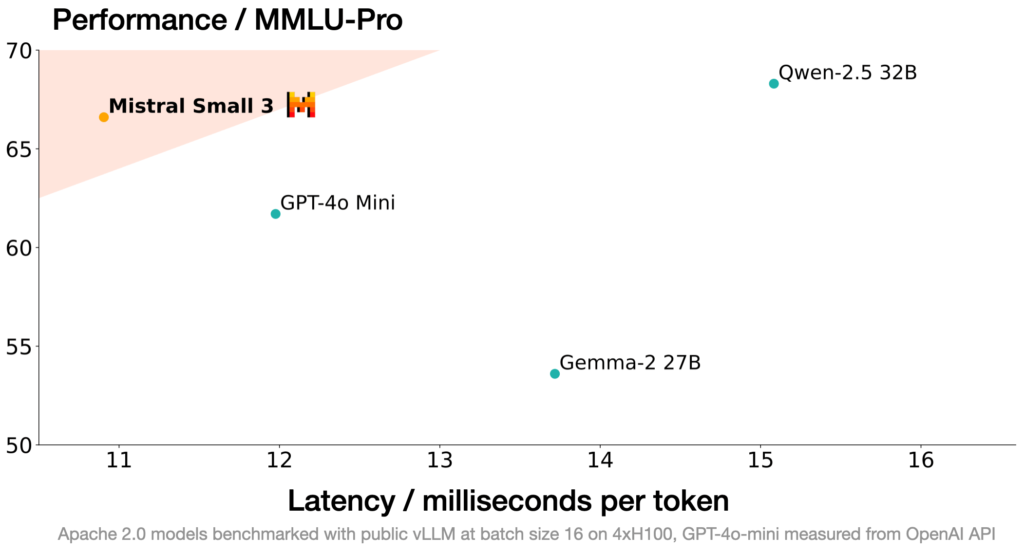

Mistral AI Releases 24B Open-Source Language Model That Rivals GPT-4o mini

https://mistral.ai/news/mistral-small-3

The News:

- Mistral AI just launched Mistral Small 3, a 24B-parameter language model optimized for low latency released under the Apache 2.0 license. The model matches the performance of models 3x its size while being significantly faster.

- The model achieves over 81% accuracy on MMLU benchmark with 150 tokens/s latency, making it the most efficient model in its category.

- Mistral Small 3 can run locally on consumer hardware – when quantized, it works on a single RTX 4090 GPU or a MacBook with 32GB RAM.

- Real-world applications include financial services (fraud detection), healthcare (patient triaging), robotics, manufacturing (on-device control), as well as customer service and sentiment analysis.

- The model excels at fast-response conversational assistance, low-latency function calling, and can be fine-tuned for specific domain expertise.

My take: I really appreciate the way Mistral keeps releasing smart and specialized models with flexible licensing. I think the main use of Mistral Small 3 will be in high-performance agentic workflows, with fine-tuned variants of it that are specialized in specific domains creating highly accurate subject matter experts.

Allen AI Releases Tülu 3 405B, Claims to Outperform DeepSeek and GPT-4o

https://allenai.org/blog/tulu-3-405B

The News:

- The Allen Institute for AI (Ai2) just released Tülu 3 405B, a fully open-source large language model built on Llama 3.1, featuring 405 billion parameters. The model claims to match or exceed the performance of DeepSeek V3 and GPT-4o in several key benchmarks.

- The model uses a novel “Reinforcement Learning with Verifiable Rewards” (RLVR) approach, which only rewards the system for producing verifiably correct answers.

- The model is fully open-source, with both the model and training data publicly available. Users can test it through the AI2 Playground, with code available on GitHub and models on Hugging Face

My take: Another week, another model that claims to be better in some benchmarks than models that have been out for quite a time. This release makes everything public: all training data, code, and weights have been published. But since it’s based on Llama 3.1, we still don’t have the pre-training data and process used to train Llama, those are still proprietary to Meta. It’s a great release by Allen AI but it will probably be left far behind shortly when all next-gen models are released.

OpenAI Launches ChatGPT Gov for U.S. Government Agencies

https://openai.com/global-affairs/introducing-chatgpt-gov

The News:

- OpenAI launched ChatGPT Gov, a specialized version of ChatGPT designed specifically for U.S. government agencies. The platform is promoted as more secure than ChatGPT Enterprise and allows governments to input non-public sensitive information while operating within their own secure hosting frameworks.

- The service allows government agencies to use OpenAI’s language models within their own secure hosting environment, with options to deploy either in Microsoft Azure’s commercial cloud platform or in a more secure Azure Government cloud tenant. This gives agencies better control over security and privacy, making it easier to meet compliance requirements.

- The platform provides access to GPT-4o and allows government employees to build and share custom GPTs, upload text and image files, and save and share conversations.

- Early results are promising – in Pennsylvania’s pilot program, ChatGPT Enterprise helped reduce time spent on routine tasks by approximately 105 minutes per day.

What you might have missed: In addition to ChatGPT Gov, OpenAI recently announced that they have teamed up with U.S. National Laboratories where 15 000 scientists will get access to o1 to support research within cybersecurity, power grid protection, disease treatment, and physics. According to OpenAI this is the “beginning of a new era, where AI will advance science, strengthen national security, and support U.S. government initiatives”.

My take: ChatGPT Gov is basically GPT-4o packaged and ready-to-install in a government cloud environment. No information leaves the government cloud, and governments can start exploring all the possibilities with generative AI without having to be concerned about privacy issues. If your organization have not yet rolled out generative AI to all employees you are missing out, and as an example in Sweden, RISE has helped over 17 municipalities to roll out a chat interface to GPT-4o hosted on secure Swedish Microsoft servers. There are tremendous performance benefits to be made here.

Alibaba Releases Qwen Vision-Language Model with PC Control Capabilities

https://qwenlm.github.io/blog/qwen2.5-vl

The News:

- Alibaba Cloud launched Qwen2.5-VL, a multimodal AI model in three sizes (3B, 7B, and 72B parameters), offering both base and instruction-tuned versions. The model is accessible through Qwen Chat platform and downloadable from Hugging Face.

- Qwen2.5-VL can understand hour-long videos and pinpoint specific events to the second, making it useful for video content analysis and event detection.

- Qwen2.5-VL has advanced document parsing capabilities, including the ability to analyze texts, charts, diagrams, graphics, and layouts within images, as well as generate structured outputs from scans of invoices, forms, and tables.

- Qwen2.5-VL can also function as a visual agent to control computers and mobile devices, performing tasks like checking weather and booking flight tickets.

- Qwen2.5-VL outperforms GPT-4o, Claude 3.5 Sonnet and Google’s Gemini 2.0 Flash on several benchmarks including video understanding, math and document analysis.

My take: Chinese AI companies are really catching up to OpenAI and Anthropic, but remember that all current models were built on hardware that is over three years old. Take all the recent innovations such as using reinforcement learning instead of traditional supervised fine-tuning, and pair that with a compute platform that is over 20 times more powerful than previous generations, and you can be pretty sure that the next generation models coming shortly will leave everything we have today pretty far behind in the rear mirror.

Read more:

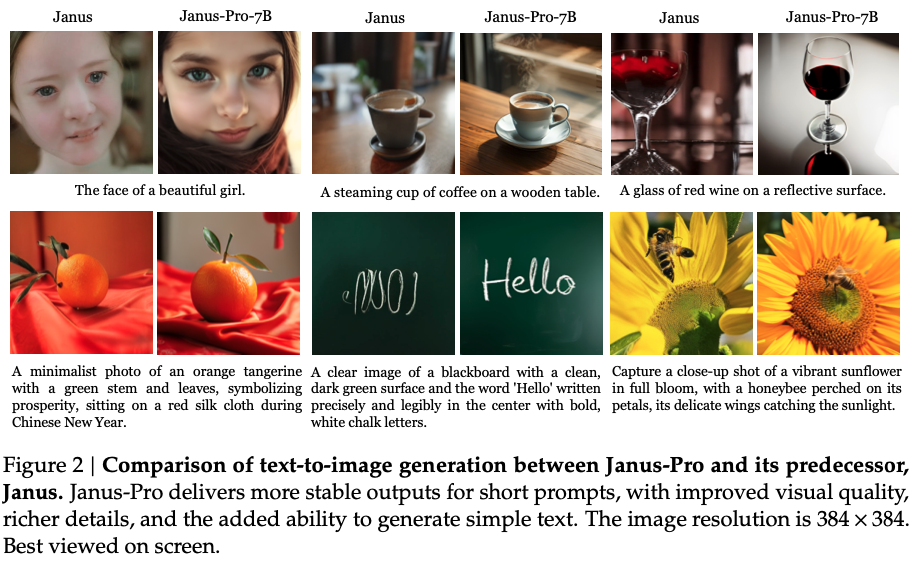

DeepSeek launches Janus-Pro Image Generator And NO It Does Not Challenge OpenAI

https://huggingface.co/deepseek-ai/Janus-Pro-7B

The News:

- Chinese AI startup DeepSeek released Janus-Pro, an open-source AI image generation model that claims to outperform OpenAI’s DALL-E 3 and Stable Diffusion across multiple benchmarks.

- Janus-Pro is available in two versions: Janus-Pro-1B and Janus-Pro-7B. It features multimodal capabilities, allowing both image generation and vision processing, though currently limited to 384 x 384 pixel image inputs and 768×768 pixel image outputs on the Hugging Face demo.

My take: If there is anything I have learnt from image-generating models it is to never trust the benchmarks. Ask it for something generic like a flower or a glass of red wine and most models perform OK. Ask it for “An image of a middle-aged man participating in an ice cream eating context in a french café” and you will get images like the ones below. This post is my “weekly reminder” why I write this newsletter at all, it’s your filter to keep shitty releases like this away from your focus.

Read more:

- DeepSeek Launches Janus-Pro-7B Model, Outperforms OpenAI DALL-E 3 – Business Insider

- DeepSeek says its newest AI model, Janus-Pro, can outperform OpenAI’s DALL-E | Mashable

YuE: Open-Source AI Music Generation Model

The News:

- A new open-source AI music tool called YuE was released last week, offering a free alternative to services like Suno and Udio. YuE can create songs up to five minutes long in multiple languages and in different musical styles.

- Unlike other AI music models that only generate short instrumental clips, YuE can produce entire songs lasting up to five minutes, including full vocal tracks that follow lyrics accurately while maintaining a cohesive musical structure.

- Key features, according to their web page, include:

- Support for multiple languages including English, Chinese, Japanese, and Korean

- Various music styles from pop to classical

- Realistic vocal performances with proper timing and pitch

- Automatic generation of matching instrumental accompaniment

- Advanced AI model that understands lyrical context

- System requirements: GPUs with at least 80GB video memory for full song generation. Users can rent an 80GB A100 on cloud platforms for a few dollars per hour.

My take: I don’t see this as a real threat to services like Suno, who have switched their focus to music producers and artists the past year. But as a prototyping tool it’s a fun and simple to use tool for everyone. If you use it a lot however, both Udio and Suno are cheaper with their fixed monthly pricing.

Read more: