Last month Shopify CEO Tobi Lütke sent out an internal memo saying that before employees ask for more headcount or resources, teams must first show why they “cannot get what they want done using AI”. And last week it was Duolingo’s turn, with their CEO Luis von Ahn sending out an internal memo saying “headcount will only be given if a team cannot automate more of their work”. If you were told on your job that you couldn’t grow your team or department before you have proven that you could not automate any more with AI, where would you start? Should you buy Microsoft 365 Copilot licenses and ask your employees to build chatbots, should you ask your IT department to support you with Copilot Studio, or should you do something else? Where do you begin? Where is this huge productivity increase everyone is talking about?

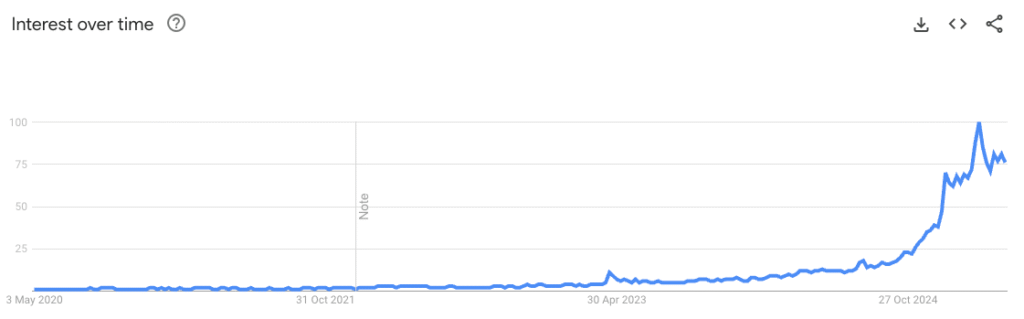

The past month I’ve had meetings with many large organizations that want to become AI first, but don’t really know where to begin. To become AI first you need to use something called autonomous AI agents, and while some of us have been working with AI agents since 2023 when CrewAI first launched, most people did not even hear about AI agents until 2025. Just see the graph below for Google Search trends for “AI Agents”.

To become AI first your company must first be fully committed that this is something you want to do. There are enormous gains to be made, but this is not a decision that can be made in a single department. Everyone needs to be on board. You also need people experienced with things like agentic frameworks, MCP, A2A, vector databases, fine-tuning, local and edge models, RAG, have strong ML skills and knows how to turn repetitive work into automated autonomous processes. If those people also have strong skills in maths or statistics it’s even better, because it really makes a difference when it comes to getting the most out of these models. Sure, you can probably give it a go without the proper expertise, but that was probably what happened here: The 6 biggest chatbot fails and tips on how to avoid them. This is in a nutshell why we started TokenTek, to help companies transform into an AI first culture and make the right decisions from the start. Please feel free to reach out to me on LinkedIn or by replying to this email if you have questions or need guidance, this is what we do every day.

Thank you for being a Tech Insights subscriber!

THIS WEEK’S NEWS:

- Duolingo Goes “AI-First”, Shifts Key Operations to Artificial Intelligence

- ChatGPT Adds Shopping Features to Challenge Google Search

- DeepWiki: AI-Powered Documentation Generator for GitHub Repositories

- Anthropic Launches Claude Integrations and Advanced Research Tools

- Visa and Mastercard Launch Payment Solutions for Autonomous Commerce

- Meta Launches Standalone AI App Powered by Llama 4

- Meta Launches New Llama AI Protection Tools for Enhanced Security

- Google Expands Audio Overviews to Over 50 Languages, Including Swedish

- Alibaba Releases Qwen3 AI Models with Hybrid Reasoning and Open Weights

- Microsoft’s Phi-4 Reasoning Models Challenge Larger AI Systems

- Xiaomi Releases MiMo: Compact 7B Open-Source Model Excels in Math and Code Reasoning

Duolingo Goes “AI-First”, Shifts Key Operations to Artificial Intelligence

https://www.theverge.com/news/657594/duolingo-ai-first-replace-contract-workers

The News:

- Duolingo, the language-learning platform, will adopt an “AI-first” approach to streamline content creation and internal processes, aiming to accelerate course development and reduce repetitive tasks for employees.

- CEO Luis von Ahn announced that Duolingo will gradually discontinue the use of contractors for work that AI can perform, with new hires only approved if a team cannot automate more of its responsibilities.

- AI usage will be evaluated in both hiring and performance reviews, and most teams will undergo changes to fundamentally restructure workflows around automation.

- The company recently launched 148 new AI-generated language courses, more than doubling its offerings in less than a year – a process that previously took years per course.

- Von Ahn acknowledged that some minor quality trade-offs may occur but emphasized the need for urgency, stating, “We’d rather move with urgency and take occasional small hits on quality than move slowly and miss the moment”.

- Duolingo clarified that the shift is not about replacing full-time staff but about removing bottlenecks so employees can focus on creative and complex challenges.

My take: “Developing our first 100 courses took about 12 years, and now, in about a year, we’re able to create and launch nearly 150 new courses. This is a great example of how generative AI can directly benefit our learners,” said Luis von Ahn, CEO and co-founder of Duolingo. If your company is still wondering if this is the right time to go AI first and start automating your repetitive business tasks, you can stop wondering now and start doing. Some of the AI projects I am involved in now show amazing results: reduced turnaround times, higher precision in cost estimates, less time spent on tasks that can be fully automated, and more time spent being productive. There are so many dimensions to the benefits of Generative AI and AI agents that if you are unsure where to start, feel free to reach out to me and I will help you get started in no time!

Read more:

ChatGPT Adds Shopping Features to Challenge Google Search

The News:

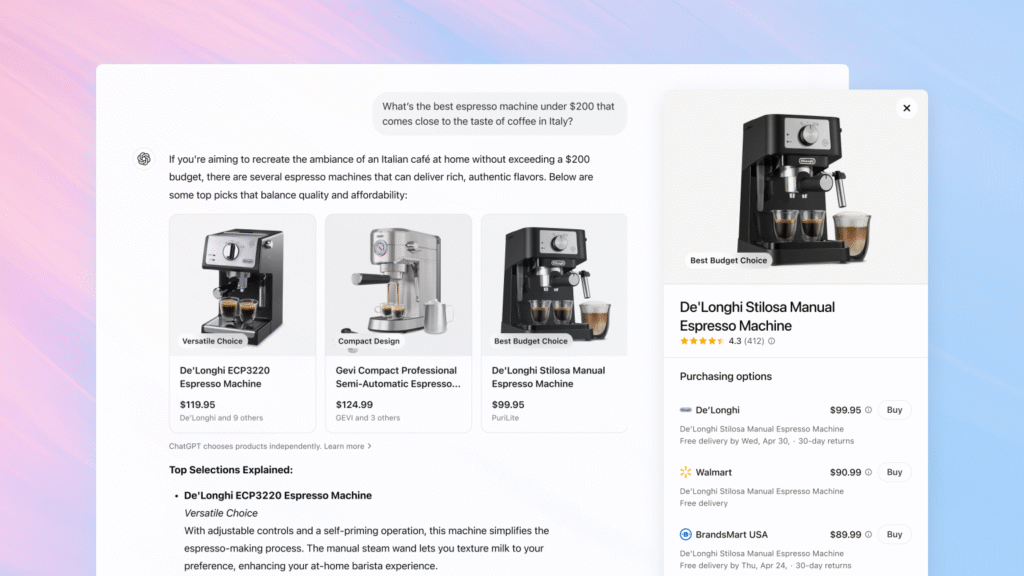

- OpenAI has updated ChatGPT with new shopping capabilities that display product recommendations with images, reviews, and direct purchase links when users ask shopping-related questions.

- The feature is available to all users globally through the GPT-4o model for all users, initially supporting electronics, fashion, beauty, and home goods categories.

- Product recommendations are generated organically without advertisements or paid placements, using structured metadata from third-party sources for pricing, descriptions, and reviews.

- OpenAI reports over 1 billion web searches conducted through ChatGPT in the past week, positioning this update as part of its strategy to compete with Google’s ad-driven search results.

- Future updates will incorporate ChatGPT’s memory feature for Pro and Plus users to provide more personalized recommendations based on previous conversations, though this won’t be available in the EU, UK, Norway, Iceland, and Liechtenstein.

My take: User feedback on this feature have so far been quite mixed. Some report that it works great, but others report that they’d rather have full control of the product search. If this feature works even close to “OK” then I think we have a winner here. LLMs continue to evolve at an exponential rate, and if current models are able to produce decent results now, expect them to deliver amazing results in 6-9 months. OpenAI knows this, and I believe they would never have released this feature unless they knew exactly where they are heading and what capacity they expect from upcoming models. Google Search quality has really been going down the drain lately and I am quite sure you have noticed this too. I switched to Kagi a while back, and most people I talk to are not fans of the current Google Search layout with lots of sponsored ads shown above the actual search results, bloat, and AI-generated text summaries. This makes it easy to convince users to switch to ChatGPT for search and shopping, a tool they already use on a daily basis. I am convinced that the web will change completely within a few years when most people stop searching for the right web page and scanning for the right content on each web page. Content will be the main thing that matters, not presentation, and if your content is not easily accessible from AI search bots now you’d better start planning.

DeepWiki: AI-Powered Documentation Generator for GitHub Repositories

The News:

- Devin AI has launched DeepWiki, a free tool that automatically transforms GitHub repositories into comprehensive, wiki-style documentation, helping developers quickly understand unfamiliar codebases without manual exploration.

- Users can access DeepWiki by simply replacing “github.com” with “deepwiki.com” in any repository URL, instantly generating structured documentation that includes project summaries, technology stacks, and file structures.

- The tool creates interactive diagrams such as architecture overviews, dependency graphs, and flowcharts to visually represent code relationships and workflows.

- DeepWiki features a conversational AI assistant powered by Devin’s DeepResearch agent that answers specific questions about the codebase in natural language, providing context-aware responses.

- Advanced users can utilize the Deep Research mode to identify potential bugs, suggest optimizations, compare repositories with similar projects, and uncover technical debt.

My take: Wow, if you are a software developer, stop what you’re doing and go checkout the deepwiki web site! Try to click around on some of the repositories, or add your own. Here is one of my repositories: Pixel perfect image plugin. Just click around in the left column to see detailed documentation on how it works. For me, DeepWiki is a complete game-changer. I have spent countless of hours creating documentation like this, and having it 100% automated is revolutionary! And you can even chat with the documentation using Devin AI. Why would anyone spend any more time documenting their code when tools like this exist? I’m really curious to hear your feedback on this.

Anthropic Launches Claude Integrations and Advanced Research Tools

https://www.anthropic.com/news/integrations

The News:

- Anthropic has introduced Integrations, allowing Claude AI to connect with remote MCP servers across web and desktop applications, expanding beyond the previous local-server limitation on Claude Desktop.

- Users can connect Claude to 10 popular services including Atlassian’s Jira and Confluence, Zapier, Cloudflare, Intercom, Asana, Square, Sentry, PayPal, Linear, and Plaid, with more integrations from companies like Stripe and GitLab coming soon.

- You can also add custom integrations to Claude by simply providing the URL of a remote MCP server. This allows you to connect Claude to any external tool or service-even those not officially supported or verified by Anthropic-by pasting the server’s URL in the Integrations settings. This feature is available for Claude Max, Team, and Enterprise plans.

- Claude’s Research capabilities have been enhanced with an advanced mode that searches across the web, Google Workspace, and connected integrations, delivering comprehensive reports with citations in 5-45 minutes depending on complexity.

- Finally, Claude Code is now included in the Claude Max subscription ($100-200/month)! If you used Claude Code previously you know you could easily spend $100 in a single day with that tool, so having it available with unlimited access for a fixed price is huge.

My take: Anthropic Claude is quickly becoming a true power tool, especially now when it can access virtually any system in the world using custom integrations. While you could do this already in the desktop client it required you to install custom MCP servers on your computer, which is not easy on most corporate environments. Being able to easily add integrations to Claude on the web is a true game changer, which suddenly makes Claude so much more interesting as a working tool compared to ChatGPT. And if you are working full-time as a programmer you might want to try the Claude Max subscription since you now get unlimited access to Claude Code with it.

Visa and Mastercard Launch Payment Solutions for Autonomous Commerce

https://techcrunch.com/2025/04/30/visa-and-mastercard-unveil-ai-powered-shopping/

The News:

- Visa and Mastercard have introduced new payment technologies that allow AI agents to shop and make purchases on behalf of consumers, marking a significant shift toward “agentic commerce”.

- Mastercard launched Agent Pay on April 29, 2025, which integrates with AI platforms to enable secure, personalized payment experiences through Mastercard Agentic Tokens, building on existing tokenization capabilities

- Visa announced its Intelligent Commerce service on April 30, partnering with companies like Anthropic, Microsoft, OpenAI, and Stripe to create AI shopping experiences where “the consumer sets limits, Visa helps the rest”.

- Both solutions aim to transform online shopping by allowing AI assistants to curate products, compare options, and complete transactions based on user preferences and instructions.

- Security is a central focus, with Mastercard requiring trusted AI agents to be registered and verified before making secure payments on behalf of users, while maintaining consumer control over what agents can purchase.

My take: AI Agents doing the shopping for you? Are we really there yet? For the use cases initially planned for these solutions, I believe we are. One of the main uses cases I believe will be watching for sold-out products and buying it when it’s available and within your budget. You could also use it to purchase event tickets, just let your agent sit in queue and buy the best tickets available. The possibilities are vast, and Mastercard and Visa are working with partners like Microsoft and IBM to expand these agent platforms into B2B scenarios, like automating routine business purchases and managing recurring expenses. I will be following this area closely!

Read more:

- Mastercard unveils Agent Pay, pioneering agentic payments technology to power commerce in the age of AI | Mastercard Newsroom

- Visa and Mastercard unveil AI-powered shopping | TechCrunch

Meta Launches Standalone AI App Powered by Llama 4

https://about.fb.com/news/2025/04/introducing-meta-ai-app-new-way-access-ai-assistant/

The News:

- Meta has released its first standalone AI application for iOS and Android, powered by the Llama 4 model, bringing personalized AI assistance beyond its existing integration in Facebook, Instagram, WhatsApp, and Messenger.

- The app features natural voice interaction capabilities, including a full-duplex speech demo that allows for real-time conversation without waiting for the user to finish speaking, currently available in the US, Canada, Australia, and New Zealand.

- Users can generate and edit images through text or voice prompts, with the web version offering enhanced controls for style, mood, and lighting adjustments.

- The app includes a social “Discover feed” where users can view, like, comment on, and share AI-generated content, plus a “Remix” option to try other users’ prompts directly.

- Meta AI connects with users’ Facebook and Instagram accounts to deliver more personalized responses and includes a memory function that recalls previous conversations to provide contextually relevant information.

My take: Should you give the Meta AI app a try? I would recommend that you first test Llama 4 on the web, at https://www.meta.ai/ and see how that works for you. In my experience it’s worse than both ChatGPT and Claude by quite a big margin, which means that I expect the same results from the Meta AI app. So why use it? If you spend most of your days in Facebook or Instagram you might like the fact that Meta AI is “personalized to you”. The whole goal with the Meta AI app is to build a more personal AI, specifically based around your Facebook and Instagram history. If you do not want to get deeper involved with Meta then you should probably just skip this one and continue using ChatGPT, Claude or Gemini.

Read more:

Meta Launches New Llama AI Protection Tools for Enhanced Security

https://www.infosecurity-magazine.com/news/meta-new-advances-ai-security

The News:

- Meta released Llama Guard 4, LlamaFirewall, and Llama Prompt Guard 2, aiming to strengthen the security of AI systems and protect against emerging threats such as prompt injection, insecure code, and jailbreak attacks.

- Llama Guard 4 acts as a unified safeguard across text and image understanding, preventing unwanted content like violence, child sexual abuse material, and privacy violations; it is now accessible through the new Llama API, currently in limited preview.

- LlamaFirewall is an open-source framework that orchestrates multiple guard models, including PromptGuard 2, Agent Alignment Checks, and CodeShield, to provide layered, real-time defenses for LLM-powered applications.

- Llama Prompt Guard 2 comes in two versions: 86M, which significantly improves jailbreak and prompt injection detection, and 22M, a smaller model that reduces latency and compute costs by up to 75% with minimal performance trade-offs.

- Benchmarks show Llama Prompt Guard 2 86M achieves 97.5% recall at 1% false positive rate for English jailbreak detection, outperforming previous models and competitors such as ProtectAI and LLM Warden.

- The tools are open source and designed to be integrated into developer pipelines, with multilingual support in the larger model and straightforward APIs for adoption.

What you might have missed: Meta also released the Llama API as a limited free preview, allowing developers to build custom apps using the latest Llama 4 Scout and 4 Maverick models.

My take: Should you add these tools to your toolchain? The license is very permissive, and as long as you clearly include “built with Llama” and have under 700 million monthly active users you can use it for free, even commercially. Feedback from users have so far been very positive, especially around the smaller 22M model which is very fast and efficient. When it comes to performance, the performance of the 86M model of 97.5% recall at 1% false positive rate for English jailbreak detection far surpasses competitors like ProtectAI (22.2%) and LLM Warden (12.9%) in real-world attack risk reduction. My recommendation would be to put these tools at the top of your list, then evaluate them based on your needs (latency and performance) and see how they perform.

Google Expands Audio Overviews to Over 50 Languages, Including Swedish

https://blog.google/technology/google-labs/notebooklm-audio-overviews-50-languages

The News:

- Google’s Audio Overviews feature in NotebookLM now supports over 50 languages, enabling users to generate podcast-style audio summaries from uploaded documents, web links, or pasted text.

- Users can select their preferred output language-including Swedish, Spanish, French, Hindi, Turkish, and more-directly in NotebookLM’s settings. The AI hosts then present the summary conversation in the chosen language.

- The feature maintains a conversational, podcast-like style with two AI-generated voices, and allows users to guide the discussion by specifying focus areas or asking follow-up questions.

- Audio Overviews are powered by Google’s Gemini 2.5 Pro model, which helps retain natural-sounding dialogue and tone across languages. Google notes that non-English support is currently in beta, so users should verify the accuracy of the generated audio.

- The update enables practical use cases such as teachers sharing multilingual study guides or students generating summaries from mixed-language resources. The feature is available for free in NotebookLM, with premium options for higher usage limits.

My take: I love Audio Overviews, and use it a lot. There are so many use cases opening up with these additional languages – both when it comes to easier listening in your native language, as well as learning a new language. My own kids have not used audio overviews since they had issues keeping up with the English, but now they can use it for most of their schoolwork assignments. If you are among the few who have not yet tried Audio Overviews in NotebookLM you are in for a treat, stop what you are doing and go here and try it out.

Read more:

Alibaba Releases Qwen3 AI Models with Hybrid Reasoning and Open Weights

https://techcrunch.com/2025/04/28/alibaba-unveils-qwen-3-a-family-of-hybrid-ai-reasoning-models/

The News:

- Alibaba has launched Qwen3, a new family of eight open-weight language models designed for advanced reasoning and agentic tasks.

- The Qwen3 series includes six dense models and two Mixture-of-Experts (MoE) models, ranging from 0.6 billion to 235 billion parameters, with all models available for download under open licenses on platforms like Hugging Face and GitHub.

- Qwen3 introduces a hybrid reasoning system that can switch between “thinking mode” for complex, multi-step problems and “non-thinking mode” for fast, general-purpose responses, allowing users to control the model’s reasoning depth and computation cost.

- The models support 119 languages and dialects (!), and are trained on a dataset of 36 trillion tokens, offering strong performance in multilingual, coding, and mathematical tasks.

- Benchmark results show the flagship Qwen3-235B-A22B model outperforms OpenAI’s o3-mini and Google Gemini 2.5 Pro on Codeforces, LiveCodeBench, and MMLU, and the publicly available Qwen3-32B matches or exceeds DeepSeek and OpenAI o1 on several industry benchmarks.

- Qwen3 is optimized for agent integration, supporting Model Context Protocol (MCP), robust function calling, and tool use, making it suitable for enterprise and developer workflows.

My take: Is it better than Claude 3.7 for every day coding? Definitely not. Is it a great option for agentic workflows? Absolutely! Users are now running the largest model Qwen3-235B at Q8 on a Mac Studio with M3 Ultra and 512 GB RAM, achieving over 20 tokens per second in streaming throughput! And the smaller 30B-A3B MoE model reached over 70 tokens on the same machine! While SOTA models like 4o and Claude 3.7 are still the best for most tasks, for specific tasks running models like Qwen work extremely well!

Read more:

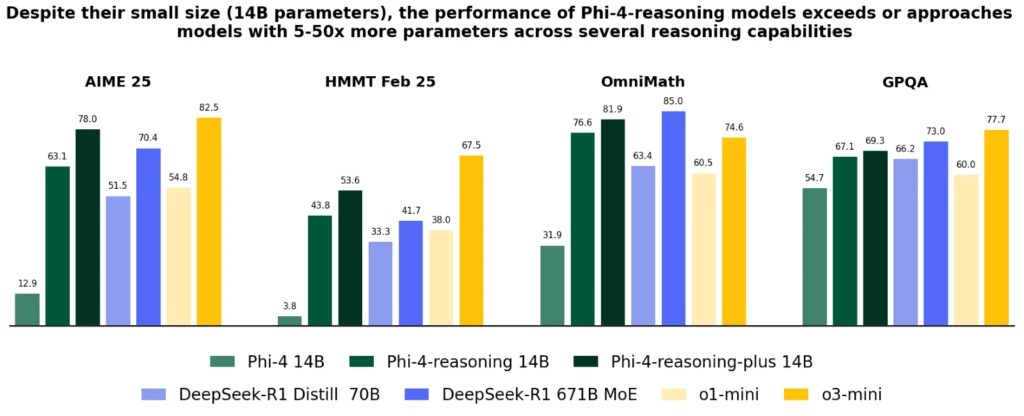

Microsoft’s Phi-4 Reasoning Models Challenge Larger AI Systems

https://azure.microsoft.com/en-us/blog/one-year-of-phi-small-language-models-making-big-leaps-in-ai/

The News:

- Microsoft has launched three new small language models (SLMs): Phi-4-reasoning, Phi-4-reasoning-plus, and Phi-4-mini-reasoning, designed to perform complex reasoning tasks while requiring less computing power than larger models.

- The flagship Phi-4-reasoning-plus model contains just 14 billion parameters yet outperforms much larger models, including OpenAI’s o1-mini and DeepSeek-R1-Distill-Llama-70B, across various benchmarks.

- On the AIME 2025 test (the qualifier for the USA Math Olympiad), Phi-4-reasoning-plus achieved higher accuracy than DeepSeek-R1, which has 671 billion parameters – nearly 48 times larger.

- The models excel in mathematical reasoning, science questions, coding, algorithmic problem-solving, and planning tasks, showing improvements of over 50 percentage points in math benchmarks compared to the base Phi-4 model.

- All three models are available under a permissive MIT license, allowing for commercial use, fine-tuning, and distillation without restrictions.

My take: Optimizing small language models for math operations seems very similar to demo coding on the Commodore 64 computer, where groups like Fairlight continue delivering amazing visuals over 40 years after the C64 computer was first launched. If you showed people in the 80s a demo like The Trip (C64 Demo) (released two weeks ago) they would probably never have thought it was possible to develop something like that on the C64, but here we are and time has proven it possible. Much like programming the C64 I believe there are still a huge number of optimizations we can do to improve the performance of these smaller LLMs, and the Phi-4 models is another great example of this.

Xiaomi Releases MiMo: Compact 7B Open-Source Model Excels in Math and Code Reasoning

https://github.com/XiaomiMiMo/MiMo

The News:

- Xiaomi introduced MiMo, a 7-billion-parameter open-source language model designed for advanced reasoning tasks, including mathematics and code generation. Its small size makes it suitable for deployment in resource-constrained environments or on edge devices.

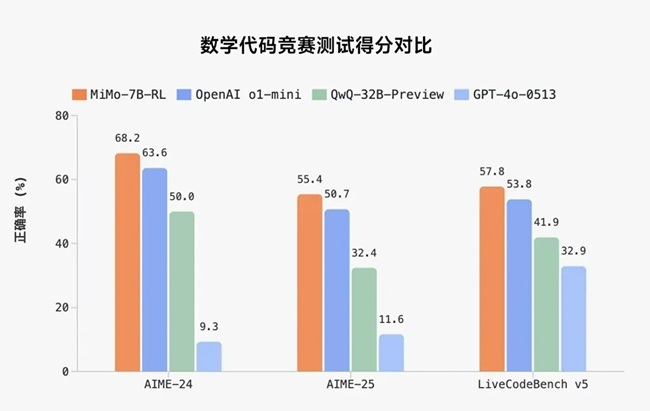

- MiMo-7B achieves benchmark scores that match or surpass much larger models, such as OpenAI’s o1-mini and Alibaba’s Qwen-32B-Preview, particularly on math (AIME 2025: 55.4 vs o1-mini’s 50.7) and coding (LiveCodeBench v5: 57.8% vs Qwen3-30B-A3B’s 62.6%).

- The model was pre-trained on 25 trillion tokens, with a focus on maximizing exposure to reasoning patterns, and further refined using reinforcement learning on 130,000 verifiable math and programming tasks.

- MiMo’s training pipeline includes innovations such as multi-token prediction for faster inference, a test difficulty-driven reward system to improve code evaluation, and a “Seamless Rollout Engine” that speeds up training and validation by over 2x.

- MiMo-7B is available in four variants (Base, SFT, RL-Zero, RL), all open-sourced for community use and research.

My take: How big does a model has to be for decent reasoning skills? It seems 7B can go quite a long way. If you have the need to run a small reasoning model locally or on an edge device, definitely check this one out. All MiMo models are released under the Apache 2.0 License which means you can use them commercially for free.

Read more: