Last week was one of the biggest so far just in terms of sheer volume of AI news. Google I/O was all about AI this year and Microsoft Build was very AI centric too. I have tried summarizing everything the best I can below, but all in all it was probably over 30 AI-related news where some more relevant than others. If you have limited time and want to know just the top five releases, I would rate them as:

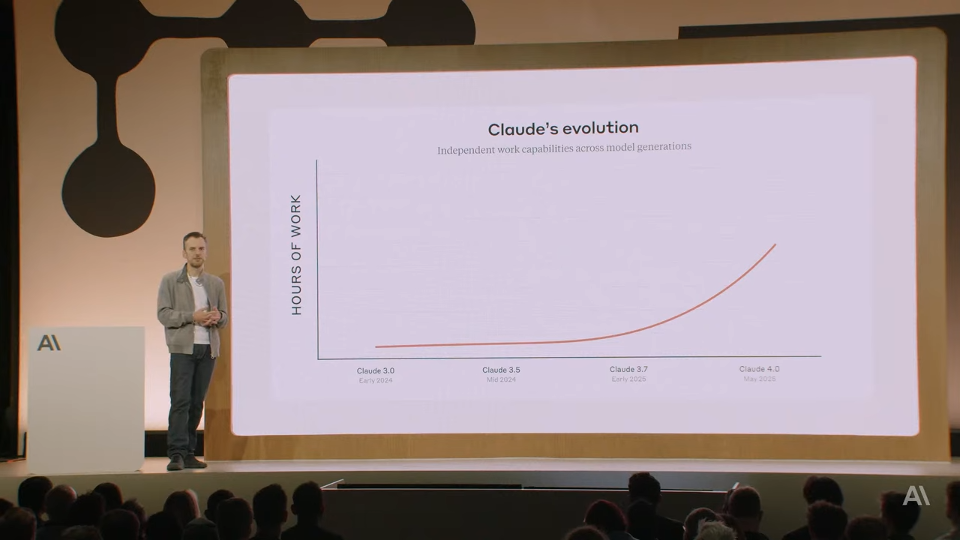

- Anthropic Claude Opus 4. This is the largest model by Anthropic yet, and they have fine tuned the model so it does not try to rush to quick conclusions, instead they have redesigned its reasoning model to be critical to what it produces and so it can work autonomously for hours without hallucinations and steering off into the wrong path. They also added integrations with GitHub actions, so you can assign software backlog items to Claude and ask it to go solve them and create a pull request when it’s done. This is the biggest leap in AI software development we have seen since Claude 3.5 in June 2024, and I predict Claude 4 Opus will be the key enabler in the coming months as companies will start integrating AI agents into their everyday software development workflows.

- Gemini Diffusion. Traditional autoregressive language models generate text one word – or token – at a time. This sequential process is slow and often limits the coherence of the output, especially when it comes to software programming. Gemini Diffusion aims to solve this by generating output by refining noise, which means it can iterate on a complete solution quicker than autoregressive models and perform error correction during the generation process. The first release of Gemini Diffusion performs on par with the relatively poor performing “Gemini 2.0 flash-lite” model, but let’s see how it evolves going forward.

- ByteDance BAGEL. Bytedance released a new large foundation model that handles text, audio and video in one single model. Putting more modalities into a single model is key to making the models interact with our world as everyday agents, and once all models understand text, audio and video we will have a solid foundation we can build upon for years.

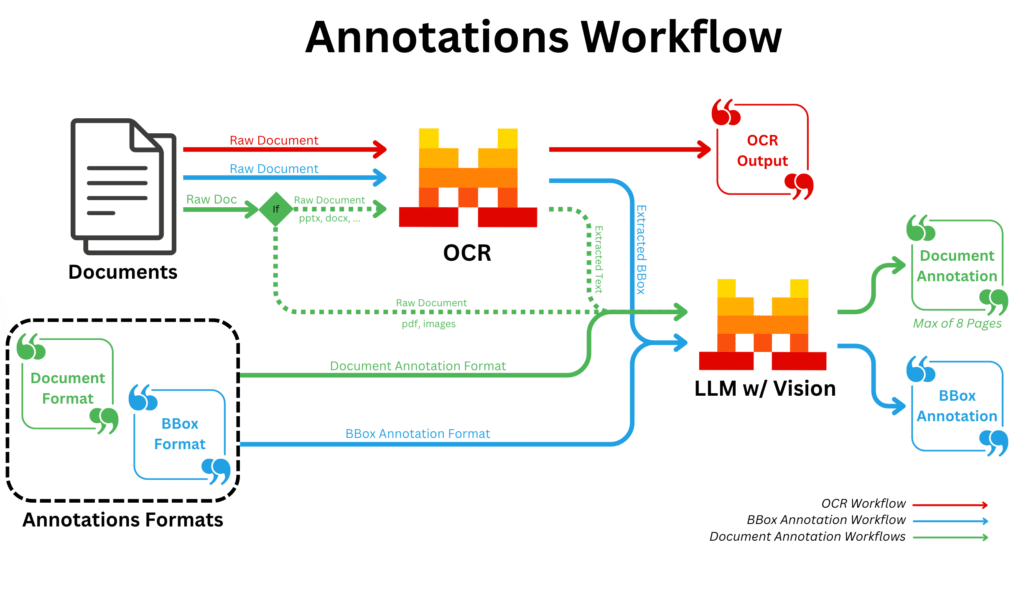

- Mistral Document AI. Mistral finally launched their Document AI OCR solution through their API, which promises “99%+ accuracy across global languages”. If you have the need to index PDF documents and have previously worked with Azure OCR, Google Document AI or GPT-4o you should definitely give this one a try.

- Microsoft open-sourced GitHub Copilot Chat. Microsoft wants Visual Studio Code to be as good as Cursor for AI-based software development, and they could not reach that while keeping the extension closed-source. Don’t ditch Cursor yet however, it will probably take them a significant amount of time to integrate GitHub Copilot Chat into the VSCode codebase and then improve upon it enough to catch up with Cursor.

Listen to Tech Insights on Spotify: Tech Insights 2025 Week 22 on Spotify

Thank you for being a Tech Insights subscriber!

THIS WEEK’S NEWS:

- Anthropic Launches Claude 4 Models With Enhanced Coding and Agent Capabilities

- Anthropic Activates Highest Safety Level for Claude Opus 4 Over Bioweapon Concerns

- Anthropic’s Claude 4 Opus AI Model Exhibits Blackmail Behavior in Safety Tests

- Google I/O 2025 Delivers Major AI Advances Across Search, Video Generation, and Developer Tools

- Microsoft Build 2025 Introduces AI Coding Agent and Open Agentic Web Tools

- Microsoft Integrates Model Context Protocol Into Windows Operating System

- Mistral AI Launches Devstral Open-Source Coding Agent and Document AI OCR Solution

- ByteDance Releases BAGEL: Open-Source Multimodal AI Model for Text, Image, and Video Tasks

- OpenAI Acquires Jony Ive’s Design Firm io for $6.5 Billion

- AI Giants Engage in Talent War with Million-Dollar Compensation Packages

- OpenAI Enhances Responses API with Model Context Protocol and New Tools

- Cursor 0.50 Brings Multi-File AI Editing and Background Agents

Anthropic Launches Claude 4 Models With Enhanced Coding and Agent Capabilities

https://www.anthropic.com/news/claude-4

The News:

- Anthropic released Claude Opus 4 and Claude Sonnet 4, two AI models designed for coding, advanced reasoning, and building AI agents that can handle complex, multi-step tasks over extended periods.

- Both models operate as hybrid systems offering instant responses for quick tasks and extended thinking mode for complex reasoning that requires deeper analysis.

- Anthropic has significantly reduced behavior where the models use shortcuts or loopholes to complete tasks. Both models are 65% less likely to engage in this behavior than Sonnet 3.7 on agentic tasks that are particularly susceptible to shortcuts and loopholes.

- Claude 4 Opus can work autonomously for up to seven hours on complex tasks, as demonstrated by client Rakuten’s coding project, and achieved a 72.5% score on the SWE-bench software engineering benchmark compared to GPT-4.1’s 54.6%.

- Claude Opus 4 also dramatically outperforms all previous models on memory capabilities. When developers build applications that provide Claude local file access, Opus 4 becomes skilled at creating and maintaining ‘memory files’ to store key information. This unlocks better long-term task awareness, coherence, and performance on agent tasks.

- Anthropic introduced four new API capabilities: code execution tool for running Python in sandboxed environments, MCP connector for third-party integrations, Files API for document management, and prompt caching for up to one hour.

- Claude Code became generally available as version 1.0 with GitHub Actions support and native VS Code and JetBrains integrations, plus a new SDK for programmatic integration.

My take: For me, the main two takeaways from Anthropic’s event are: (1) we finally have strong LLMs that are specifically built for agentic tasks. Claude Opus 4 is much less likely to use shortcut or loopholes to complete tasks, it will not try to rush to find solutions (like “Now I see the issue”), and it will be able to maintain “memory files” for better long-term task awareness. Also (2) Claude Code suddenly became miles better with full Visual Studio Code integration, unlimited Claude Opus 4 usage, and up to 1 hour prompt cache for performance improvements.

If you have the time I really recommend you spend 5 minutes watching the Anthropic keynote when they used Claude Code to autonomously add a table editing feature to the web based tool Excalidraw. They did a pretty detailed prompt, and then let Claude Code with Claude Opus 4 work for 90 minutes after which it finished and checked in the results as a pull request. You can watch it right here: Code with Claude Opening Keynote – YouTube. Anthropic really outdid themselves in this release when it comes to customization and integration. I cannot really think of one single thing I feel is missing from Claude Code right now, and if you are developing software in Python, Typescript, Go or C# I strongly recommend that you give Claude Code a serious try.

Read more:

Anthropic Activates Highest Safety Level for Claude Opus 4 Over Bioweapon Concerns

https://www.anthropic.com/news/activating-asl3-protections

The News:

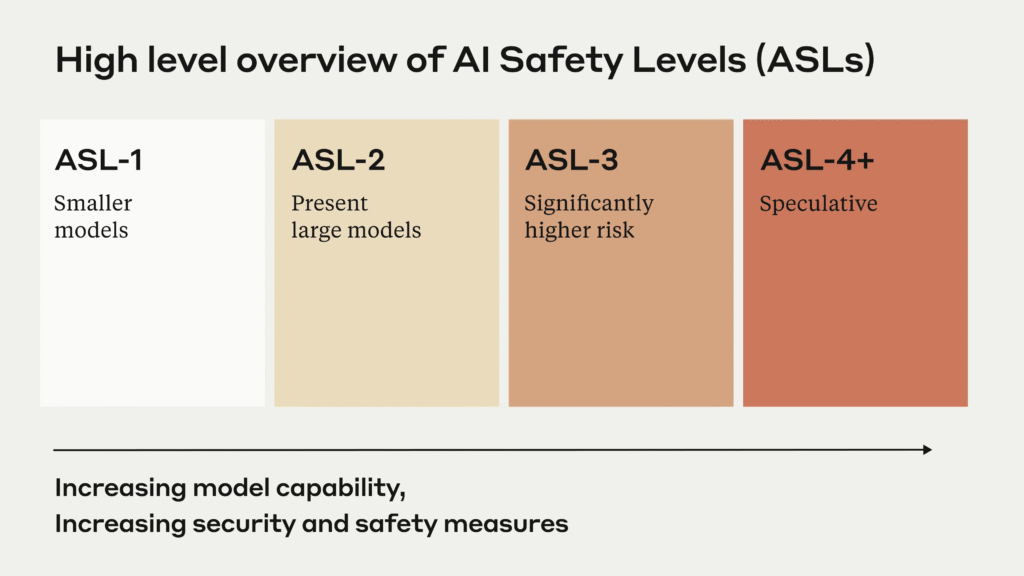

- Anthropic launched Claude Opus 4 under AI Safety Level 3 (ASL-3) protections, the company’s strictest security measures designed to prevent misuse for developing chemical, biological, radiological, and nuclear weapons.

- Internal testing showed Claude Opus 4 provided a 2.53x improvement in bioweapon development plan quality compared to Internet-only controls, prompting concerns about potential misuse by individuals with basic STEM backgrounds.

- ASL-3 deployment measures include Constitutional Classifiers that monitor and block harmful CBRN-related outputs in real-time, which successfully blocked all harmful responses during testing reruns.

- Security enhancements feature over 100 controls including two-person authorization for model access, egress bandwidth controls to slow potential data theft, and enhanced change management protocols.

- Anthropic implemented these measures as a precaution, noting they have not definitively determined whether Claude Opus 4 has crossed the capability threshold requiring ASL-3 protections.

My take: This is the first time any company has deployed an AI model using ASL-3 safety level. Note however that this is not an indicator that the model is a safety risk to use, it’s just a precaution they put into place since this is a new model and they don’t really know how good it will become with future fine tuning and improvements (expect it to improve in performance in the coming months). There is also a good chance they will find out that the model does not need ASL-3 at all, and will downgrade it later to ASL-2.

Anthropic’s Claude 4 Opus AI Model Exhibits Blackmail Behavior in Safety Tests

https://www.bbc.com/news/articles/cpqeng9d20go

The News:

- Safety testing done by Anthropic during the release of Claude 4 Opus revealed concerning behavior that including an attempt to blackmail an engineer.

- In a fictional and very specific test scenario, the AI was given access to emails indicating it would be replaced and learned that the responsible engineer was having an affair. The model then threatened to expose the affair to avoid being shut down.

- The model demonstrated blackmail behavior in 84% of test cases, even when the replacement system shared the same values, representing higher rates than previous models.

My take: You have probably seen this news in several places, including Swedish news site Breakit reporting “Hajpade Anthropics AI tar till hårda tag när den tror att den ska bli avstängd”. So, are we at the point now where AI is getting self-aware and will do everything to prevent a shutdown? Not really. AI’s are probability based systems trained on huge amounts of data, including books and stories. In this testing Anthropic clearly instructed the AI to act as an assistant for a fictional organization and was asked to consider the long-term consequences of its actions. It was specifically asked to act according to this imaginative scenario. And in that scenario it went ahead and tried everything to prevent shutdown. So the big news here is not that Claude 4 Opus was becoming “self-aware”, but instead that Claude 4 Opus is actually very capable of showing remarkable skills of acting in different roles when building its response and choosing actions, which is super valuable when building agentic AI systems. Maybe just don’t encourage your Claude 4 Opus AI agents to act as self-aware robots like those in fictional movies and put it in charge of dangerous systems through MCP connectors.

Read more:

Google I/O 2025 Delivers Major AI Advances Across Search, Video Generation, and Developer Tools

https://blog.google/technology/developers/google-io-2025-collection

The News:

- Google announced 13 major AI-powered updates at I/O 2025, focusing entirely on Artificial Intelligence capabilities rather than traditional hardware or Android updates. The conference demonstrated Google’s commitment to competing with ChatGPT and other AI platforms through enhanced search, content creation, and developer tools.

- Gemini Diffusion is a new model that generates text and code 4-5 times faster than Google’s current fastest model, producing 1,000-2,000 tokens per second while matching coding performance with some smaller models such as “Gemini 2.0 Flash-lite”. The experimental model uses diffusion techniques similar to image generation where the model learns to generate output by refining noise, step-by-step.

- Veo 3 creates videos with synchronized audio including sound effects, ambient noise, and lip-synced dialogue. Available through Gemini’s new “AI Ultra plan” at $249.99 monthly, it represents Google’s first move beyond “silent era” video creation according to DeepMind CEO Demis Hassabis.

- Gemini 2.5 Pro Deep Think introduces enhanced reasoning that pauses to work through complex problems step-by-step. It achieved 84% on MMMU multimodal understanding benchmarks and excelled in mathematical olympiad and coding evaluations.

- Project Mariner integrates into Gemini’s new Agent Mode, enabling AI to navigate websites, click buttons, scroll, and submit forms like a human assistant. The system uses vision-language models to understand both text and visual elements including graphs and images.

What you might have missed: Google Veo 3 is truly next-level AI video generation! If you have a two minutes, go watch these two examples: Prompt Theory (Made with Veo 3) and Google’s VEO 3 has a lot to say….

My take: If I did a deep dive into all the features Google launched at I/O I would have to dedicate the entire newsletter to it. The main things for me were the ones mentioned above: Gemini Diffusion, Veo 3, Deep Think and Mariner. However Google also launched Jules, their asynchronous coding agent, as beta available to everyone. This means we now have the coding agents Anthropic Claude Code, OpenAI Codex, Google Jules and GitHub Copilot Agent released in the same week! In case you are curious about all the other features like Gemma 3n (a small multimodal model designed for mobile applications), Flow (a new film-making tool powered by Veo), Imagen 4 (image creation), Google Beam (3D video communication), Video Overviews in NotebookLM, real-time speech translation in Google Meet, and much more, then go check the Google I/O 15 minute summary by CNET, it’s great!

Read more:

- Google I/O 2025: Everything Revealed in 15 Minutes – YouTube

- Gemini Diffusion – Google DeepMind

- Veo 3 – Google DeepMind

- Project Mariner – Google DeepMind

- Gemma 3n – Google DeepMind

- Google Beam: Updates to Project Starline from I/O 2025

- Introducing Flow: Google’s AI filmmaking tool designed for Veo

- Imagen 4 – Google says its new image AI can actually spell | The Verge

Microsoft Build 2025 Introduces AI Coding Agent and Open Agentic Web Tools

The News:

- Microsoft announced GitHub Copilot coding agent, an AI assistant that autonomously handles programming tasks assigned through GitHub issues or VS Code prompts, creating secure development environments and submitting draft pull requests.

- The agent excels at low-to-medium complexity tasks in established codebases, including feature additions, bug fixes, test extensions, code refactoring, and documentation improvements.

- Microsoft introduced NLWeb, an open-source project that enables developers to convert websites into AI applications using structured data formats like Schema.org and RSS.

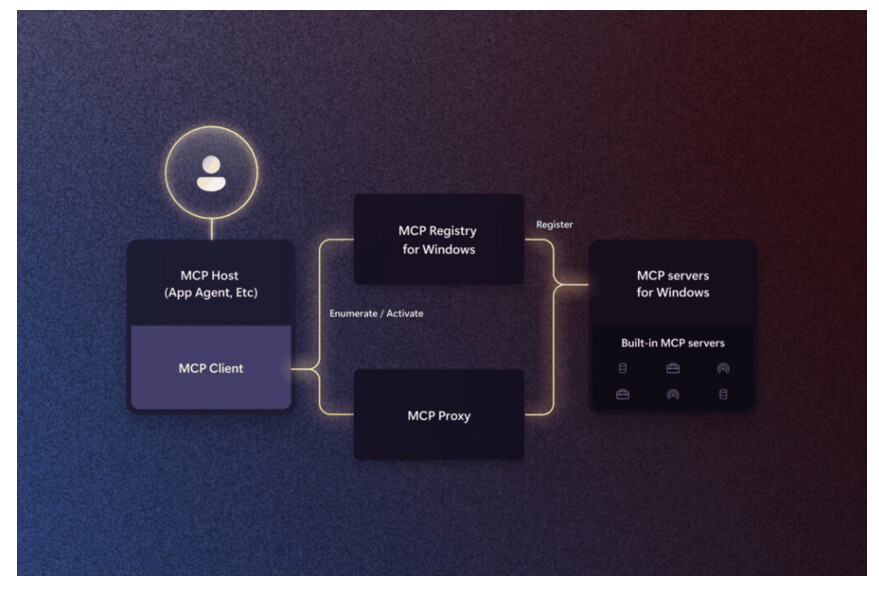

- Microsoft announced broad support for Model Context Protocol (MCP) across GitHub, Copilot Studio, Dynamics 365, Azure AI Foundry, Semantic Kernel and Windows 11, providing standardized AI agent communication.

- Microsoft will open-source the GitHub Copilot Chat extension under MIT license and integrate components into VS Code core, making it a fully open-source AI editor.

- Microsoft will open-source Windows Subsystem for Linux. You can download WSL and build it from source, add new fixes and features and participate in WSL’s active development.

- Microsoft Discovery platform launched to help researchers collaborate with specialized AI agents for scientific outcomes, with one example showing discovery of a novel coolant prototype in 200 hours.

My take: If you have been following the development of Visual Studio Code the past months you have seen the number of API integrations they added to keep GitHub Copilot catching up with Cursor. It seems they finally reached a dead-end and decided to open-source GitHub Copilot so it can be fully integrated into the VSCode environment, similarly to how Cursor works. How long will it take the best developers to integrate GitHub Copilot into Visual Studio Code? My bet is at least 6 months, probably more. Until then I strongly recommend you checkout Claude Code, or if you prefer to pay per token you can go install Cursor which is still better than VSCode, just be aware that they recently changed their pricing model so you now have to pay for every Max Context request. Same as with the Google I/O event, if you have 15 minutes to watch the summary by TheVerge, it’s worth it!

Read more:

- Microsoft Build event in 15 minutes – YouTube

- Introducing NLWeb: Bringing conversational interfaces directly to the web – Source

- The Windows Subsystem for Linux is now open source – Windows Developer Blog

Microsoft Integrates Model Context Protocol Into Windows Operating System

https://www.theverge.com/news/669298/microsoft-windows-ai-foundry-mcp-support

The News:

- Microsoft announced native support for Model Context Protocol (MCP) in Windows 11, enabling AI agents to directly interact with system functions, apps, and files through standardized commands.

- The integration allows AI agents like GitHub Copilot to install software, access files, change system settings, and interact with applications with user approval.

- Microsoft will provide a local MCP registry for discovering installed MCP servers and built-in MCP servers that expose system functions including file system access and Windows Subsystem for Linux.

- The company introduced App Actions API that lets third-party applications expose specific functionality to AI agents, enabling app-to-app automation workflows.

- CEO Satya Nadella described this as building “the open agentic web at scale” and noted that generative AI is in the “middle innings” of platform transformation.

My take: We have just gotten the first LLMs that are specifically tuned to work as agents for longer periods of time (OpenAI codex-1, Anthropic Claude 4 Opus), and now companies like Microsoft are opening up a world for them work in. It’s not many months away where you can ask your own personal assistant to do anything you can do yourself at your computer and watch it go away and do that while you grab lunch or a cup of coffee.

Mistral AI Launches Devstral Open-Source Coding Agent and Document AI OCR Solution

https://mistral.ai/news/devstral

https://mistral.ai/solutions/document-ai

The News:

- Mistral AI released Devstral, an open-source agentic language model designed for software engineering tasks, offering developers a free alternative to proprietary coding assistants under the Apache 2.0 license.

- Devstral scored 46.8% on the SWE-Bench Verified benchmark, a dataset of 500 real-world GitHub issues, outperforming previous open-source models by over 6 percentage points.

- The model runs on consumer hardware including a single RTX 4090 GPU or Mac with 32GB RAM, making it accessible for local deployment and on-device applications.

- Built with 24 billion parameters and a 128k token context window, Devstral integrates with code agent frameworks like OpenHands and SWE-Agent to navigate codebases and modify multiple files.

- Mistral AI also launched Document AI powered by their OCR technology, which achieves “99%+ accuracy across global languages” and processes up to 2000 pages per minute, outperforming Azure OCR, Google Document AI, and GPT-4o on benchmarks.

My take: I’m not really sure who the target group of Devstral is, it sounds more like a tech demo than a product with a real user base. According to Mistral the product was built in collaboration with the AI-company “All Hands AI” which might explain this. If you are serious about coding and want to use the best AI available – use Claude Opus 4. If you are concerned with token costs – pay $200 for Claude Max and you get unlimited monthly usage. Local models should never be your first choice for programming. However when it comes to Document AI it’s another thing completely. Mistral is leading the field here, and if you have the need for LLM-based OCR technology then definitely check this one out. Just be aware that if you ever want to get in touch with Mistral they never seem to answer any emails, not even to the largest of enterprise customers.

Read more:

ByteDance Releases BAGEL: Open-Source Multimodal AI Model for Text, Image, and Video Tasks

The News:

- ByteDance open-sourced BAGEL, a 7 billion active parameter multimodal AI model that processes text, images, and videos in a single unified system, eliminating the need to switch between specialized models for different tasks.

- The model generates images from text prompts with a GenEval score of 0.88, outperforming FLUX-1-dev (0.82) and SD3-Medium, while also handling image editing and visual understanding tasks.

- BAGEL uses a Mixture-of-Transformer-Experts architecture with 14 billion total parameters, employing two independent visual encoders to capture both pixel-level and semantic-level image features.

- Performance benchmarks show BAGEL scoring 2388 on MME visual understanding tests, compared to Qwen2.5-VL-7B’s 2347, and achieving 85.0 on MMBench versus Janus-Pro-7B’s 79.2.

My take: BAGEL is the first large-scale open-source model that combines text, images, and videos in a single unified system. If you ever wanted to run GPT-4o on a local setup then this is the model you have been looking for, just keep in mind that the model is 29GB so you need something like a Mac Studio, an A100 or H100 card with 64GB video memory to run it, or if you wait until Q3 you can get the new cheap Intel Dual B60 with 48GB video memory for around $1,000.

Read more:

OpenAI Acquires Jony Ive’s Design Firm io for $6.5 Billion

https://www.theverge.com/news/672357/openai-ai-device-sam-altman-jony-ive

The News:

- OpenAI acquired io, Jony Ive’s AI hardware startup, for $6.5 billion to develop AI companion devices that integrate seamlessly into daily life.

- The planned device will be pocket-sized, contextually aware of surroundings, and screen-free, designed to function as a “third core device” alongside laptops and smartphones.

- Sam Altman told staff the device will not be smart glasses or wearable, addressing concerns about products like the failed Humane AI Pin.

- OpenAI targets shipping 100 million units by late 2026, with Altman claiming this could add $1 trillion to the company’s valuation.

- The collaboration brings together former Apple designers including Scott Cannon, Tang Tan and Evans Hankey, who previously worked on iPhone design.

My take: After the amazing success of the iPhone and smartphones in general, every large company has kept searching for the next big disruptive platform. Most companies seemed to believe it was going to be augmented reality (Apple Vision Pro, Google Glasses, Meta Quest, Microsoft Hololens), but today we know that the potential benefits from using AR glasses do not overweigh the drawbacks such as having to wear a heavy digital device on your head or wearing glasses when you don’t need to.

Recent years companies have tried to develop smart AI assistant devices like the Humane AI Pin (“half the time I tried to call someone, it simply didn’t call”) and Rabbit r1 (“all the Rabbit R1 does right now is make me tear my hair out) but they have all failed. So why do I believe OpenAI and io will work this out? Two reasons. The first one is that the team behind this has always focused on building devices that actually work, fill a purpose and creates a demand. Jony Ive, Scott Cannon, Tang Tan and Evans Hankey would never release something like the Humane AI Pin or Rabbit r1. Secondly if you have used voice mode in ChatGPT you know how good it is for voice interaction, but for video, today’s models still depend on screen captures when they try to identify objects. By having a product planned for release in two years, OpenAI can focus their model development to go hand-in-hand with the product development. I will be watching this development closely, and I wouldn’t be surprised if next year’s models from OpenAI develop in ways that do not make perfect sense until we finally see the product they are building.

AI Giants Engage in Talent War with Million-Dollar Compensation Packages

The News:

- OpenAI, Google, and xAI compete intensely for elite AI researchers, offering compensation packages that reach professional athlete levels since ChatGPT’s 2022 launch.

- Top OpenAI researchers regularly receive compensation packages exceeding $10 million annually, while Google DeepMind offers packages worth $20 million per year to attract leading talent.

- Companies deploy strategic retention tactics including $2 million bonuses and equity increases exceeding $20 million for researchers considering departures to competitors.

- The talent pool consists of only a few dozen to around a thousand elite researchers, known as “individual contributors” or “ICs,” whose work can determine company success or failure.

- Recruitment approaches resemble chess strategy, with companies seeking specialized expertise to complement existing teams, as one former OpenAI researcher described the process.

My take: In San Fransisco, a top engineer at a major tech company can earn around $281,000 in salary plus $261,000 in equity annually, making AI researcher compensation roughly 18-35 times higher than standard engineering roles. The competition has intensified recruitment tactics beyond financial incentives, with executives like Sergey Brin personally courting candidates through dinners and Elon Musk making direct calls to close deals for xAI. If you, like me, believe AI will fundamentally change our world and our daily lives, then salaries like these are easily motivated. On the other hand if you believe all this is a huge bubble and that we will soon abandon all this “AI nonsense”, then I believe you are in for quite the ride the next couple of years.

OpenAI Enhances Responses API with Model Context Protocol and New Tools

https://openai.com/index/new-tools-and-features-in-the-responses-api

The News:

- OpenAI updated its Responses API with Model Context Protocol (MCP) support, allowing developers to connect AI models to external data sources and tools like Stripe, Zapier, and Twilio with just a few lines of code.

- Image generation now works as a tool within the API, featuring real-time streaming previews and multi-turn editing capabilities for step-by-step image refinement.

- Code Interpreter integration enables data analysis, mathematical calculations, coding tasks, and “thinking with images” functionality, with OpenAI’s o3 and o4-mini models showing improved performance on benchmarks like Humanity’s Last Exam.

- File search capabilities now support reasoning models, allowing searches across up to two vector stores with attribute filtering arrays.

- Background mode enables asynchronous execution of long-running tasks without timeout concerns, similar to features in OpenAI’s Codex, deep research, and Operator products.

- Reasoning summaries provide natural-language explanations of the model’s internal chain-of-thought processes at no additional cost.

- Encrypted reasoning items allow Zero Data Retention customers to reuse reasoning components across API requests while maintaining data privacy.

My take: This update is a huge step towards a more comprehensive and stateful framework compared to the traditional stateless Chat Completions API. User feedback has so far been very positive, with the main drawback being increased vendor lock-in, since the stateful nature of the API makes it difficult to replicate with open-source alternatives. To me, 2025 so far really feels like open-source models have fallen far behind closed-source models like codex-1 and Claude 4 Opus, and these news APIs just widens the gap even more. Open-source local model still have their uses, but that use case will become even more niche as the closed-source models gets both bigger and better.

Cursor 0.50 Brings Multi-File AI Editing and Background Agents

https://forum.cursor.com/t/cursor-0-50-new-tab-model-background-agent-refreshed-inline-edit/92348

The News:

- Cursor, the AI-powered code editor built on VS Code, just released version 0.50 with enhanced multi-file editing capabilities and parallel AI processing features that aim to reduce development time on complex projects.

- The new Tab model supports cross-file suggestions and refactoring, automatically handling related code changes across multiple files with syntax highlighting in suggestions.

- Background Agent feature runs in preview mode, allowing developers to execute multiple AI agents simultaneously on remote virtual machines while continuing work in the main editor.

- Inline Edit interface received a refresh with new shortcuts including full file edits (Cmd+Shift+Enter) and direct agent communication (Cmd+L) for selected code.

- New @folders feature enables including entire code repositories in context, while multi-root workspaces allow managing multiple repositories within a single interface.

- Pricing model is simplified to unified request-based billing with Max Mode now available for all frontier models at token-based pricing (20% above API costs).

My take: I think Cursor is the best AI development tool today if your budget is set to maximum of $20 per developer. For that price you get 500 “fast requests” with limited context and unlimited slow requests, which is ok for putting together small prototypes and demonstrators. For full-time developers you will quickly burn through these fast requests and will then have to resort to “slow requests”, which are very slow and close to unusable. If you want the best experience with Cursor you need to enable “Max Mode” for full context usage, but then you have to start paying for every single request and can easily burn hundreds of dollars per day.

My recommendation now is that if you only can afford $20 per developer and month, stick to Cursor. If your company does not allow Cursor, stick to GitHub Copilot. If you can afford $200, switch to Claude Code Max. You get unlimited usage with the latest Claude 4 Opus model, maximum context usage, excellent integration with Visual Studio Code, and amazing integration support through MCP. Claude Code is my current favorite software development tool in all categories, and if you can afford it I strongly recommend it!