Last week Elon Musk and xAI finally launched Grok 4, their next generation model, and at the same time unveiled a $300-per-month AI subscription plan called SuperGrok Heavy. “With respect to academic questions, Grok 4 is better than PhD level in every subject, no exceptions,” said Elon Musk during a livestream Wednesday night. “At times, it may lack common sense, and it has not yet invented new technologies or discovered new physics, but that is just a matter of time.”

And looking at the benchmarks it looks quite impressive, especially for math and problem solving. This is truly a next-generation model! It just has this small, tiny property that might be an issue to you: when asked sensitive questions, it will always answer exactly like Elon Musk. And I do mean EXACTLY like Elon Musk, it will in fact search X for Elon Musk posts and use them as reference. Sounds too crazy to be true? Sorry but it is true, you can see for yourself here , here , here , here , here, and here.

This clearly shows the power these AI companies have when it comes to spreading biased information, if they should choose to go in that direction. This might also explain that even if 46 EU CEOs from companies like Airbus, BNP Paribas, Carrefour, and Philips sent an open letter to European Commission President Ursula von der Leyen requesting a two-year “clock-stop” on the AI Act, the AI Act will probably still roll out in August as it is. Even if the technical standards companies need to follow won’t be finalized until August 2027, despite having to meet them from August 2025. Looking at how Elon Musk is using Grok for is own political agenda I can understand why many feel we need to rush to implement something like the AI Act, but not every company is like X with Elon Musk as their CEO.

Thank you for being a Tech Insights subscriber!

Listen to Tech Insights on Spotify: Tech Insights 2025 Week 29 on Spotify

THIS WEEK’S NEWS:

- Microsoft Reports $500 Million AI Cost Savings Amid Major Layoffs

- Google Hires Windsurf CEO in $2.4 Billion AI Talent Deal

- European CEOs Request Two-Year Delay for EU AI Act Implementation

- xAI Launches Grok 4 AI Models with Record Benchmark Performance

- Hugging Face Launches Reachy Mini Desktop Robot for AI Development

- Perplexity Launches Comet AI-Powered Browser with Agentic Assistant

- Anthropic Launches Free Educational Platform with Six Technical Courses

- Mistral Releases Devstral Medium and Upgrades Small Model for Coding Agents

- Microsoft Releases Phi-4-mini-flash-reasoning with 10x Speed Boost for Edge AI

Microsoft Reports $500 Million AI Cost Savings Amid Major Layoffs

The News:

- Microsoft’s internal AI tools delivered over $500 million in cost savings from call center operations alone in 2024, demonstrating how enterprise AI adoption can generate substantial operational efficiencies.

- Copilot now generates 35% of code for new Microsoft products, accelerating development timelines and reducing engineering costs.

- AI-powered sales tools boost revenue per salesperson by 9%, with the technology handling interactions with smaller customers and generating tens of millions in additional revenue.

- The company eliminated approximately 15,000 jobs in 2025, including recent cuts to 9,000 positions in sales and customer-facing roles.

- Microsoft invested $80 billion in AI infrastructure in 2025, with more than half allocated to U.S. data center expansion.

My take: Sales people get more efficient with AI, programmers get more efficient with AI, customer support gets more efficient with AI, everyone gets more efficient with AI. If you are still wondering when it’s the right time to start the AI journey within your company the answer is simple – you should have started yesterday. In 2025 AI tools has grown from single use-case chatbots and code assistants into complete production assistants that perform tasks autonomously while you do something else. This is much thanks to newer models such as Claude 4 and GPT-4.1, but also frameworks and integrations becoming mature and production ready. If 2024 was the year most people started using chatbots, 2025 is the year most organizations begin to increase their productivity for real with AI agents.

Google Hires Windsurf CEO in $2.4 Billion AI Talent Deal

https://www.cnbc.com/2025/07/11/google-windsurf-ceo-varun-mohan-latest-ai-talent-deal-.html

The News:

- Google acquired key talent from Windsurf, an AI coding startup that helps developers write and debug code using artificial intelligence, providing time-savings for software development teams and expanding AI-assisted programming capabilities.

- The deal brings Windsurf CEO Varun Mohan and co-founder Douglas Chen to Google DeepMind, where they will focus on “agentic coding” initiatives within the Gemini AI project.

- Google pays $2.4 billion for nonexclusive licensing rights to Windsurf technology while the startup continues operating independently under interim CEO Jeff Wang.

- The agreement follows the collapse of OpenAI’s $3 billion acquisition attempt, which fell apart due to tensions with Microsoft over intellectual property access rights.

- Windsurf serves over one million developers and generates approximately $40 million in annual recurring revenue, having raised $243 million at a $1.25 billion valuation.

My take: Windsurf (founded in 2021) has over 300 employees listed on LinkedIn as working for the company. And now the CEO, their co-founder and a few select key employees sold the nonexclusive licensing rights for the “Windsurf technology” to Google while at the same time leaving the company to become full-time employees at Google DeepMind. Based on the events around Cursor and Windsurf the past six months I am not so sure that companies like Cursor, Windsurf, and even Lovable will be able to survive long-term. They are AI-wrappers with no core product IP, and with API prices currently going through the roof for the world’s most powerful models, not even the $200 subscriptions like Perplexity Max or Cursor Ultra even comes close to the $200 flat-rate offering by Anthropic in terms of effective API usage, so the AI-wrapper companies either have to offer an inferior product, loose money, or increase their prices to be much higher than what they are today. And yeah, Anthropic blacklisted Windsurf a few weeks ago when they were in discussions with OpenAI, meaning no Windsurf users are able to use Claude models while programming. Good luck keeping that ship floating.

European CEOs Request Two-Year Delay for EU AI Act Implementation

https://www.euronews.com/next/2025/07/03/europes-top-ceos-call-for-commission-to-slow-down-on-ai-act

The News:

- Forty-six chief executives from major European companies including Airbus, BNP Paribas, Carrefour, and Philips sent an open letter to European Commission President Ursula von der Leyen requesting a two-year “clock-stop” on the AI Act before key obligations take effect in August 2025.

- The executives argue that “unclear, overlapping and increasingly complex EU regulations” create legal uncertainty that threatens Europe’s ability to compete globally in AI development and deployment.

- The AI Act’s general-purpose AI model rules are scheduled to begin August 2, 2025, but the corresponding Code of Practice that companies need for compliance guidance remains unfinished despite an original May 2025 deadline.

- High-risk AI system obligations are set to take effect in August 2026, with companies warning that rushed implementation could prevent European industries from deploying AI “at the scale required by global competition”.

- The letter states that a postponement “would send innovators and investors around the world a strong signal that Europe is serious about its simplification and competitiveness agenda”.

My take: The European Commission has already responded to this request, and firmly rejected stating there would be “no stop the clock”, “no grace period”, and “no pause”. I do not think it’s the AI Act in itself that’s the main issue here, but the AI Act in combination with existing laws like GDPR, Digital Services Act (DSA), and sector-specific regulations in finance, healthcare, and automotive industries that makes all this a very complex and problematic framework for companies to follow. Add to this that there are no clear rules for how to use personal data for training generative AI, making it difficult to comply with both GDPR and AI Act requirements. And also, despite having a deadline of May 2025, the Code of Practice that companies need for compliance guidance remains unfinished, and the technical standards companies need to follow won’t be finalized until August 2027, despite having to meet them from August 2025. It’s really a total mess, but I have no doubt that the EU will roll this out as it is.

Read more:

- EU Refuses to Delay AI Act – ITdaily.

- Implementation of the AI Act: Numerous Tensions with Existing Regulations

xAI Launches Grok 4 AI Models with Record Benchmark Performance

The News:

- xAI released Grok 4 and Grok 4 Heavy, two new AI models designed to compete with ChatGPT and Gemini.

- Grok 4 achieved 16.2% on the ARC-AGI-2 visual pattern recognition test, nearly double the score of Claude Opus 4, the next best commercial model

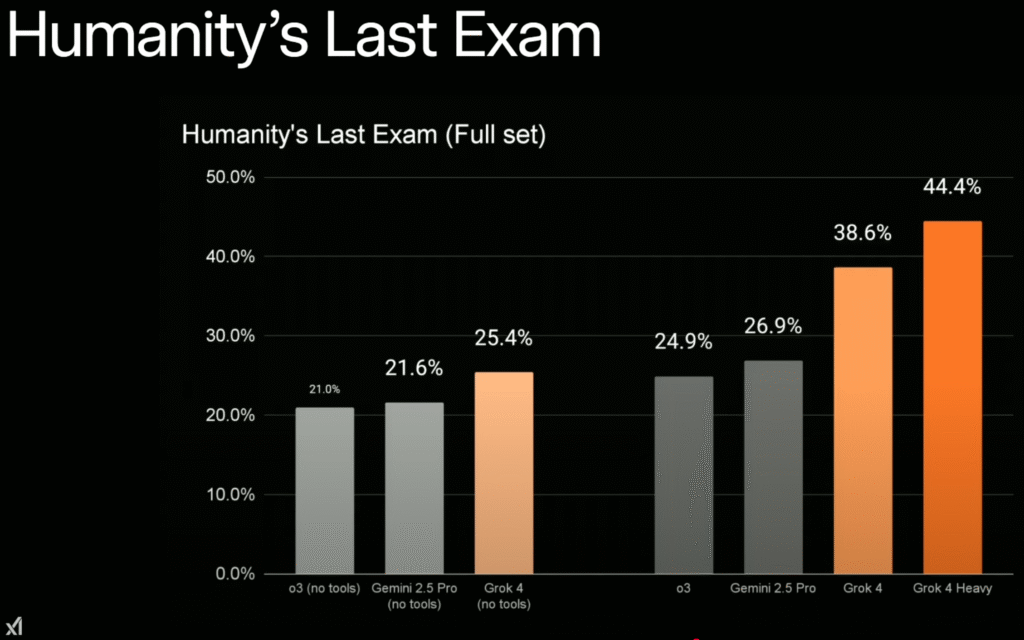

- The model scored 25.4% on Humanity’s Last Exam without external tools, outperforming Google’s Gemini 2.5 Pro (21.6%) and OpenAI’s o3 high (21%).

- Grok 4 Heavy uses multiple AI agents that work simultaneously on problems and compare results to find optimal solutions, achieving 44.4% on Humanity’s Last Exam with tools enabled.

- xAI launched SuperGrok Heavy, a $300 monthly subscription plan that provides early access to Grok 4 Heavy and upcoming features, making it the most expensive AI subscription among major providers.

- The company plans to release an AI coding model in August, a multi-modal agent in September, and a video generation model in October.

My take: My, oh my. I don’t even know where to start. First, when asked about polarizing topics, such as the Israel-Palestine conflict, abortion or immigration, Grok 4’s reasoning mode often searches for Elon Musk’s recent post on X before formulating an answer! Secondly, in its “think mode”, Grok 4 sometimes explicitly states it is consulting Musk’s views for context, even if the user’s question does not mention Musk at all. Sounds totally unreal? Well here are seven links with details. Grok 4 does not consult Elon Musk’s opinions for all topics – only certain controversial subjects. So who is Grok 4 for? If you are looking for an AI model to use for programming, this is not it. Grok 4 performs much worse than Claude 4 Opus and OpenAI o3 on LiveBench Agentic Coding. The strengths of Grok 4 seems to lie in reasoning and maths. If you want an AI chatbot with a strong political bias, and in particular a chatbot that aligns exactly with Elon Musk’s world view, then this is the AI model for you. Maybe you should even get the $300 / month SuperGrok Heavy to show your support. Otherwise I would recommend to stay as far from Grok 4 as possible right now.

Read more:

Hugging Face Launches Reachy Mini Desktop Robot for AI Development

https://huggingface.co/blog/reachy-mini

The News:

- Hugging Face released Reachy Mini, an 11-inch desktop robot designed for AI experimentation, human-robot interaction, and educational programming, offering developers an affordable entry point into physical AI at $299.

- The robot features 6 degrees of freedom head movement, full body rotation, animated antennas, a wide-angle camera, microphones, and a 5W speaker for multimodal AI interactions.

- Two models are available: Reachy Mini Lite ($299) requires connection to Mac or Linux computers, while Reachy Mini Wireless ($449) includes a Raspberry Pi 5, WiFi, battery power, and four microphones for standalone operation.

- Ships as a DIY kit with over 15 pre-programmed behaviors available at launch through the Hugging Face Hub, including facial tracking, hand tracking, and dancing capabilities.

- Fully programmable in Python with planned support for JavaScript and Scratch, integrating directly with Hugging Face’s ecosystem of 1.7 million AI models and 400,000 datasets.

- Deliveries begin late summer 2025 for the Lite version and fall 2025 through 2026 for the Wireless model.

My take: If you have a minute, go watch their launch video. Then you know what to buy your kids for Christmas this year! The Reachy Mini Lite hooks up wirelessly to your computer, which means it can access virtually any AI model that is available through Hugging Face. So how come the AI community Hugging Face is releasing an AI Robot? Well in April this year, Hugging Face acquired the French company Pollen Robotics, founded in 2016. Pollen Robotics are specialized in open-source humanoid robots for real-world applications. Looking into the acquisition post by Hugging Face, there are actually some more details about Reachy Mini, such that it uses LiDAR for navigation, can handle payloads up to 3kg with bio-inspired arms, and has fully open-source hardware designs. This is a super exciting project, and I really hope they are able to deliver what they promise (based on my experience with Kickstarters I have learned to be skeptical). But do keep an eye out for this one, it might be the Christmas gift of the year!

Read more:

- Your open-source companion – Reachy Mini – YouTube

- Hugging Face Acquires Pollen Robotics to Unleash Open Source AI Robots | WIRED

Perplexity Launches Comet AI-Powered Browser with Agentic Assistant

https://www.perplexity.ai/hub/blog/introducing-comet

The News:

- Perplexity launched Comet, an AI-native web browser that integrates the company’s search engine as the default and includes an AI assistant capable of automating tasks like booking meetings, sending emails, and making purchases. The browser aims to reduce context-switching between tabs and applications by consolidating workflows into conversational interactions.

- Comet Assistant operates through a sidebar that can see and understand content on any active webpage, allowing users to ask questions about YouTube videos, analyze Google Docs, or summarize articles without switching tabs. Users can highlight text on any page to get instant explanations or ask the assistant to perform actions based on what they’re viewing.

- Built on Chromium, Comet enables compatibility with existing Chrome extensions and allows one-click migration of bookmarks and settings. In contrast to Chrome however the browser includes a native ad blocker and employs a hybrid AI architecture combining local processing for basic tasks with cloud-based APIs for complex operations.

- Currently available only to Perplexity Max subscribers ($200 per month) with invite-only access rolling out gradually through summer.

My take: Maxwell Zeff at TechCrunch has been running the Comet browser for a while now, and he writes: “During our testing, we found Comet’s AI agent to be surprisingly helpful for simple tasks, but it quickly falls apart when given more complex requests”. Perplexity, like so many other AI startups, do not have their own AI models and have to rely on companies like Anthropic and OpenAI to deliver value to their consumers. This means they constantly have to be on the lookout for new wrappers to launch as new products, such as web browsers or smart assistants. I do not believe that “web search” will be a good enough offering from a third-party company like Perplexity in 2026, it probably won’t take long for both Anthropic and OpenAI to catch up with Perplexity and give a web search experience that’s even better since they have so much more compute power to use. But today Perplexity is still the king of AI search engines, and I still use it every single day.

Read more:

Anthropic Launches Free Educational Platform with Six Technical Courses

https://anthropic.skilljar.com

The News:

- Anthropic launched Anthropic Academy, a free educational platform hosted on Skilljar that offers six comprehensive courses covering Claude API development, Model Context Protocol, and Claude Code integration.

- The platform includes dozens of lectures, self-guided quizzes, and certificates upon completion for each course.

- Course offerings include “Claude with the Anthropic API”, “Claude with Amazon Bedrock”, “Claude with Google Cloud’s Vertex AI”, “Introduction to Model Context Protocol”, “Model Context Protocol: Advanced Topics”, and “Claude Code in Action”.

- Each course provides interactive examples and hands-on coding challenges, with the curriculum developed using insights from developers actively using Claude in real-world applications.

- The courses favor Claude 3 Haiku, Anthropic’s lowest-cost model, to keep API costs down for students following along with materials.

My take: Lots of good tips in these courses! And I think they are especially interesting if you are working with MCP integrations. If you are working with any kind of data engineering workflows the class “Model Context Protocol: Advanced Topics” should be mandatory reading, with topics like “Implement sampling callbacks to enable server-initiated LLM requests” and “Deploy scalable MCP servers using stateless HTTP configuration”. And if you are not working with MCP integrations, you probably should, so then I would recommend the starter class “Introduction to Model Context Protocol”.

Mistral Releases Devstral Medium and Upgrades Small Model for Coding Agents

https://mistral.ai/news/devstral-2507

The News:

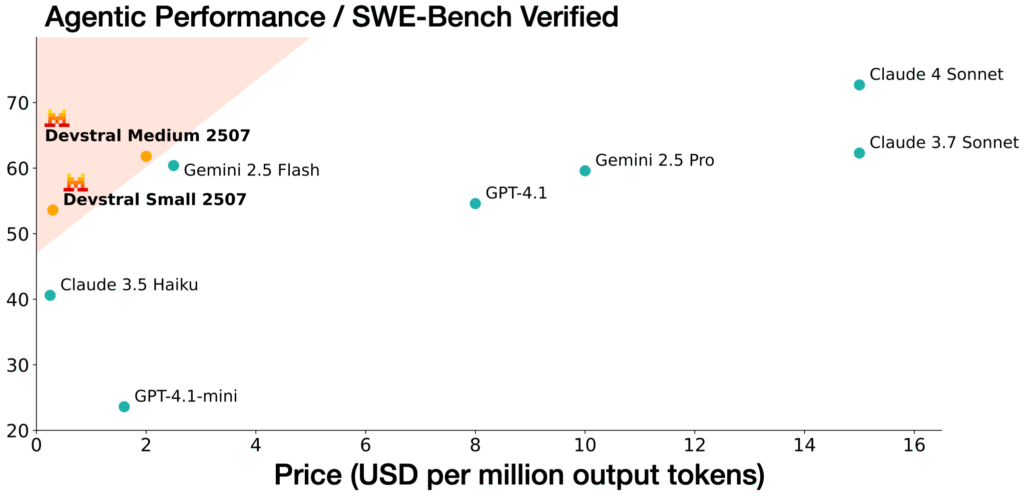

- Mistral AI released Devstral Medium and upgraded Devstral Small to version 1.1, both designed for software engineering agents that automate coding tasks across multiple files and repositories.

- Devstral Small 1.1 achieves 53.6% on SWE-Bench Verified benchmark, setting a new state-of-the-art for open models without test-time scaling.

- The upgraded Small model operates with 24 billion parameters and runs on a single RTX 4090 or Mac with 32GB RAM, making it accessible for local deployment.

- Devstral Medium scores 61.6% on SWE-Bench Verified and is available only through API, not as open weights.

- Both models support 128k token context windows, allowing them to process entire code repositories and handle complex multi-file editing tasks.

- Pricing remains competitive with Devstral Small 1.1 at $0.1/M input tokens and $0.3/M output tokens, while Devstral Medium costs $0.4/M input tokens and $2/M output tokens.

My take: There are two things that makes these models so interesting. First: smaller models are typically very well suited for fine-tuning as output generators for specific types of content in agentic setups. And Devstral Small is released under Apache 2.0 license meaning you can fine-tune it as much as you want! Unsloth already has a guide up on how to fine tune both Devstral Small and Medium, go check it out if you are interested in this! Mistral also provides a fine-tuning API for Devstral Medium, so even if it’s closed source it’s a first candidate for fine-tuning too. Secondly: The pricing of both models is super cheap. If you decide to use one of these models and fine tune them, running them in agentic workflows is cheaper than running Gemini 2.5 Flash. If you are building agentic workflows and need a cheap model that’s very easy to fine-tune, why not start with Devstral Medium to see if it works for your needs, if it does then try to scale down to Devstral Small for virtually free local access.

Read more:

Microsoft Releases Phi-4-mini-flash-reasoning with 10x Speed Boost for Edge AI

https://azure.microsoft.com/en-us/blog/reasoning-reimagined-introducing-phi-4-mini-flash-reasoning/

The News:

- Microsoft released Phi-4-mini-flash-reasoning, a 3.8 billion parameter AI model designed for edge devices and mobile applications where compute power, memory, and latency are constrained.

- The model uses a new hybrid architecture called SambaY that features Gated Memory Units (GMU), which replace heavy cross-attention operations with simple element-wise multiplication between current input and memory states from earlier layers.

- Performance benchmarks show up to 10 times higher throughput and 2 to 3 times average reduction in latency compared to its predecessor Phi-4-mini.

- The model supports a 64,000 token context length and maintains linear prefill time complexity, enabling consistent performance even with long sequences.

- It runs on a single GPU and is available on Azure AI Foundry, NVIDIA API Catalog, and Hugging Face with MIT licensing for open access.

- Benchmark results show the model achieving 87.1% on Math-500, 78.4% on GPQA Diamond, and 34/40 on AIME 2025 tests.

My take: Phi-4-mini-flash-reasoning looks like a solid model with better performance than o1-mini on Math-500 benchmarks. So what should you use it for? Microsoft suggests that it’s perfect for classrooms, where the model’s small footprint (3.8B parameters) makes it possible to run on a tablet or smartphone without cloud connectivity. This enables things like math tutoring apps, homework helpers, and educational games that work anywhere. Other uses include things like quality control systems that need to reason through complex decision trees, or medical devices and diagnostic tools. I can see this model being integrated in lots of applications in the next couple of months.