Last week OpenAI announced that they are generating over $1 billion per month with over 700 million weekly active users. AI will soon be used daily by everyone with an Internet connection, and while most of today’s AI services can be used for free, they will soon become quite expensive. Microsoft launched “Copilot Mode” for their Edge browser last week, stating that the feature will be “free for a limited time” but after that will require a subscription. At the same time Anthropic announced new weekly limits to their $200-per-month Max subscription that will give “most users” three to five days of usage every week. Which in practice means that if you are a Claude Opus 4 power user your monthly cost from September will grow to at least $400 per month for dual subscriptions.

And this is probably where things are heading: Free AI for most people where data is permanently saved by the AI companies so they can use it to train future models, Personal AI for end-users at reasonable price tiers ($20-$40) and Professional / Max / Ultra AI licenses that are currently at $200-$400 but will probably soon be way above $1,000 per month. Based on my own experience launching hundreds of software apps the past decades I believe that the value even now for Claude 4 Opus is easily worth $1,000 per month for those who have the skills to use it. Right now however most developers do not have these skills, and this is why Meta is joining the large number of companies that require developers to showcase their AI skills during job interviews. I have said it many times before – if you are a developer who has not yet increased your productivity 2-10 times thanks to AI you’d better start now, or you will be obsolete in 2026.

Thank you for being a Tech Insights subscriber!

Listen to Tech Insights on Spotify: Tech Insights 2025 Week 32 on Spotify

THIS WEEK’S NEWS:

- OpenAI Disables ChatGPT Search Indexing After Personal Data Exposure

- Google Releases AI Model to Create Better Maps from Satellite Data

- Google DeepMind Releases Gemini 2.5 Deep Think

- Microsoft Introduces Copilot Mode for Edge Browser

- OpenAI Launches Study Mode

- NotebookLM Launches Video Overviews

- Anthropic Introduces Weekly Rate Limits for Claude Code Users

- OpenAI Launches Stargate Norway, First European AI Data Center

- Adobe Releases New AI-Powered Photoshop Features Including Harmonize and Generative Upscale

- Ideogram Launches Character Consistency Model Using Single Reference Photos

- Black Forest Labs and Krea AI Release FLUX.1 Krea

OpenAI Disables ChatGPT Search Indexing After Personal Data Exposure

The News:

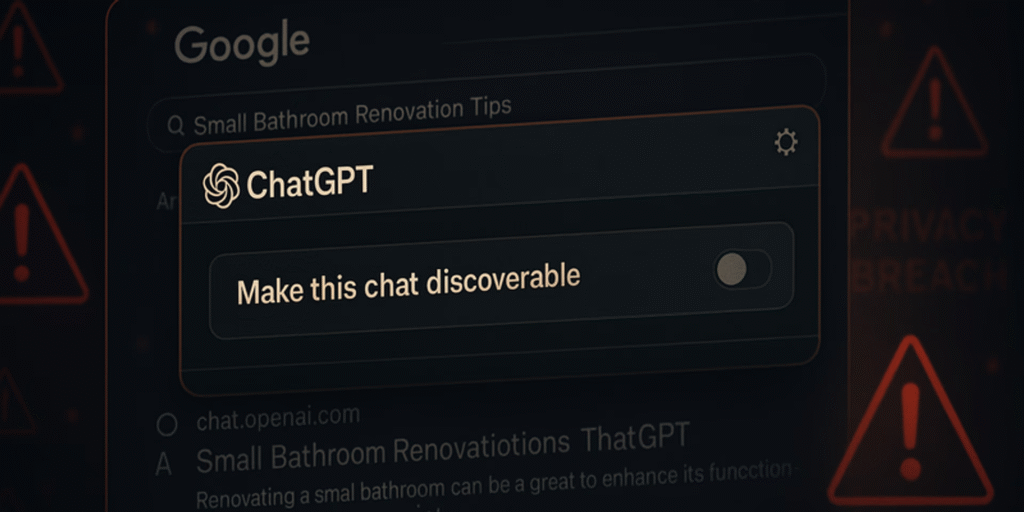

- OpenAI has disabled the feature that allowed shared ChatGPT conversations to be indexed by Google and other search engines, after thousands of public links exposed personal information including resumes, mental health discussions, and work-related content.

- The feature worked through a two-step process where users first clicked the “share” button to create a public link, then checked a box to make the conversation “discoverable” by search engines.

- Fast Company found over 4,500 publicly indexed ChatGPT conversations accessible through simple Google searches using “site:chatgpt.com/share” queries.

- Some conversations contained personally identifiable information, with one researcher easily finding a user’s LinkedIn profile based on details in their shared resume rewrite request.

- OpenAI confirmed it was “a short-lived experiment to help people discover useful conversations” but acknowledged it “introduced too many opportunities for folks to accidentally share things they didn’t intend to”.

My take: If you look at all the news titles for this one it’s enough to make everyone afraid: “OpenAI pulls chat sharing tool after Google search privacy scare”, “ChatGPT users shocked to learn their chats were in Google search results”, and so on. Now, users actually had to BOTH make a chat publicly available, and explicitly check a box to make the box appear in search engine results. For those users that shared a chat AND clicked to make it public AND clicked in the setting to allow Google to search it, if they are now “shocked” to learn that their chats were now indexed, then I guess we all can learn a lot from this. From now on I will always assume people will click every button they see without understanding at all what it does, and I think you should consider this too. For companies this means that if your employees use AI tools, you need to be absolutely sure they are using licensed tools where all data is kept private.

Google Releases AI Model to Create Better Maps from Satellite Data

The News:

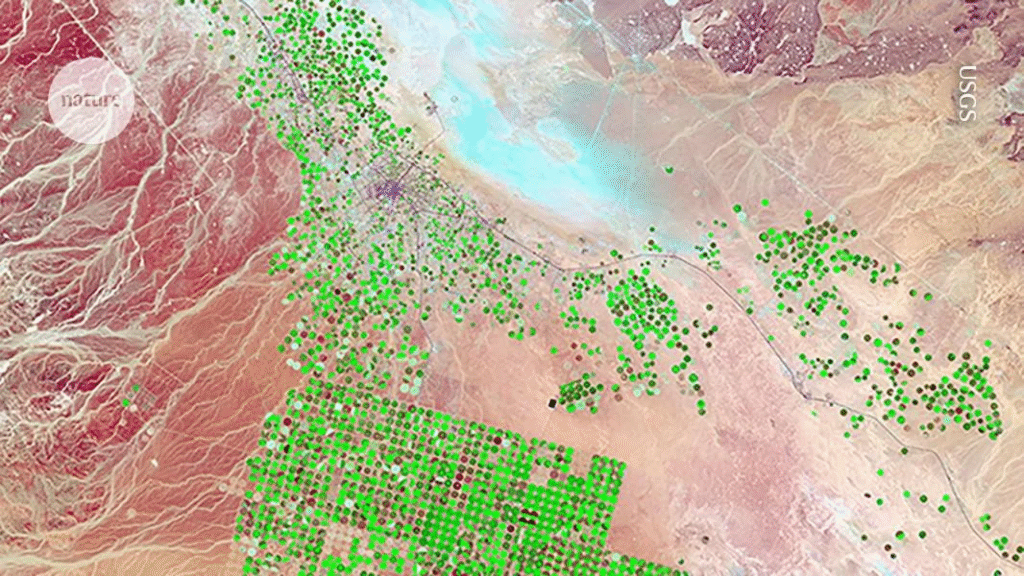

- Google just introduced AlphaEarth Foundations, an AI model that processes petabytes of Earth observation data into compact digital representations, saving scientists from spending “tens to hundreds of days” processing satellite data and enabling on-demand mapping of land and coastal waters.

- The model combines optical satellite images, radar, 3D laser mapping, and climate data into 10×10 meter grid squares, creating data summaries that require 16 times less storage than comparable AI systems while reducing analysis costs for planetary-scale monitoring.

- During testing, AlphaEarth Foundations achieved 24 percent lower error rates compared to other models, performing particularly well when training data was limited, which is common in remote sensing applications.

- Google released the Satellite Embedding dataset containing 1.4 trillion embedding footprints per year through Google Earth Engine with over 50 organizations already using it for ecosystem classification, biodiversity conservation, and tracking deforestation and agricultural changes.

- The model has successfully mapped challenging terrain including Antarctica and Canadian agricultural practices invisible to the naked eye, while penetrating cloud cover in Ecuador to reveal agricultural fields at different development stages.

My take: The innovation here is how AlphaEarth Foundations is able to combine multiple types of Earth observation data (optical images, radar, 3D laser mapping, climate data) into a unified representation for more complete pictures of any location. Traditional satellite mapping systems typically only process single data sources one by one. Like most other innovations the past year AlphaEarth Foundations uses a transformer-based architecture with specialized adaptations for geospatial data. The core of the system is something called a Spatiotemporal Precision Encoder (STP) that process information across key three dimensions: space (understanding local patterns), time (tracking changes over periods), and measurements (integrating different data types). The result is a system that can be used by researchers worldwide to analyze planetary-scale changes without the traditional computational barriers. The 1.4 trillion dataset enables consistent global monitoring of deforestation, urban expansion, and agricultural changes and the use cases are virtually unlimited.

Google DeepMind Releases Gemini 2.5 Deep Think

https://blog.google/products/gemini/gemini-2-5-deep-think

The News:

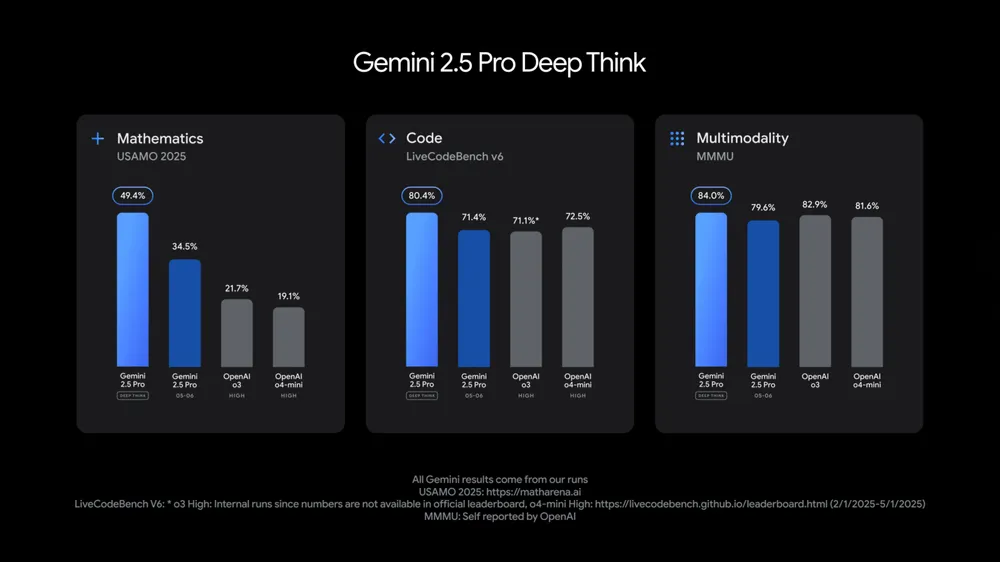

- Google DeepMind released Gemini 2.5 Deep Think, an advanced reasoning model that uses extended, parallel thinking and reinforcement learning techniques to solve complex problems more effectively.

- The model leads performance on key benchmarks including achieving 35 points on the International Mathematical Olympiad problems, earning a gold-medal standard, and scoring 83.0% on AIME 2025 math problems.

- Deep Think processes information through a 1 million token context window (expanding to 2 million), allowing it to handle entire code repositories, lengthy documents, and complex multi-step reasoning tasks.

- The model scored 63.8% on SWE-Bench Verified for agentic coding tasks and can generate complete video games from single-line prompts, demonstrating advanced code generation capabilities.

- Google positioned Gemini 2.5 as their “most intelligent AI model” that tops the LMArena leaderboard by significant margins in human preference evaluations.

My take: I ran Gemini 2.5 Deep Think on one of my code bases (around 1 MB source code, ~200k tokens) and it found several things to optimize that Claude Code did not find. If your organization allows the use of Google Gemini then this is an amazing tool to have when analyzing and optimizing large code modules. While Claude Code is amazingly good at writing high quality source code if you prompt it properly, its limited context window (200k) often has problems understanding complex workflows and scenarios. For most cases this is not a problem since you as a developer can steer it in the right direction, but if you have complex applications and want to analyze optimizations then having a huge context window of 2 million tokens makes a significant difference.

Microsoft Introduces Copilot Mode for Edge Browser

The News:

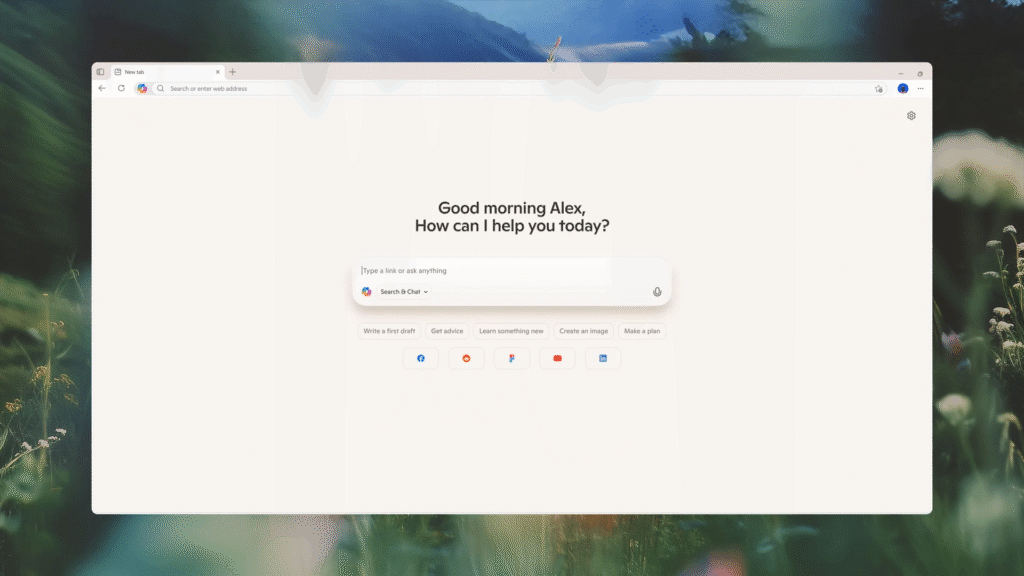

- Microsoft launched Copilot Mode, that transforms their web browser Edge from a traditional browser into an intelligent browsing assistant, helping users accomplish tasks more efficiently by analyzing context across multiple tabs.

- The feature replaces the standard new tab page with a single input box that combines chat, search, and web navigation functions, allowing users to interact through both text and voice commands.

- Copilot analyzes all open tabs simultaneously to compare information across multiple websites, such as comparing vacation rentals to identify which property is closest to the beach and includes a full kitchen.

- Voice navigation enables users to speak directly to Copilot for tasks like locating information on a page or opening comparison tabs between products.

- Microsoft plans to add advanced capabilities that let Copilot access browsing history and stored credentials to perform tasks like booking reservations and managing errands on users’ behalf.

- The feature is currently free and opt-in for Edge users on Windows and Mac in all Copilot markets, though Microsoft indicated this free access is temporary.

My take: Microsoft says that this functionality will be “free for a limited time”, and you can clearly see where all this is going. Microsoft is going to add AI to every single product they ship, and it will be free at first and later come with a subscription. I cannot help but wonder how this will affect their product development going forward. There are still so many quirks and weird things in Windows 11 that I would like to see fixed, but now they are instead going all-in adding AI features into every single aspect of both Windows and all their products. There is a risk that the user experience for “non-paying” AI users will get worse since those will be left with patched products that are kept on life support where all new features are coming to those who are willing to pay a monthly subscription for them.

OpenAI Launches Study Mode

https://openai.com/index/chatgpt-study-mode

The News:

- OpenAI introduced study mode in ChatGPT, a learning tool that guides students through problems step-by-step rather than providing direct answers. The feature aims to support deeper learning by encouraging active participation and critical thinking.

- Study mode uses “Socratic questioning”, hints, and self-reflection prompts to help students work through problems.

- The system breaks down complex information into manageable sections and adjusts difficulty based on student skill level. It includes knowledge checks with quizzes and personalized feedback to track progress.

- Students can toggle study mode on and off during conversations for flexibility. The feature is available to all ChatGPT users including Free, Plus, Pro, and Team tiers, with ChatGPT Edu access coming in the next few weeks.

- OpenAI developed the feature with teachers, scientists, and pedagogy experts based on learning science research.

My take: My own kids use ChatGPT every day when doing their homework, and it has been super helpful to always have someone to ask when getting stuck. This new study mode looks even better – what previously required a special prompt technique will now be enabled by default. Using AI for learning new skills is one of the best use cases we have for it, and I hope most schools understand this and invest in proper licenses so students do not have to be rate limited using the free versions.

NotebookLM Launches Video Overviews

https://blog.google/technology/google-labs/notebooklm-video-overviews-studio-upgrades

The News:

- Google’s NotebookLM now generates Video Overviews, creating narrated slideshows from user documents to complement its existing Audio Overviews.

- The feature transforms uploaded materials like PDFs, notes, and images into visual presentations with AI-generated slides, pulling in diagrams, quotes, and data from source documents.

- Video Overviews are customizable, allowing users to specify focus topics, learning goals, and target audiences through prompts like “I know nothing about this topic; help me understand the diagrams in the paper”.

- The new Studio panel allows users to create multiple Audio and Video Overviews from the same notebook, enabling different language versions or role-specific presentations.

- Video Overviews are available in English to users over 18, with additional languages and formats planned for future releases.

My take: If you need a good starting point for your next presentation then this is it. Just upload your working document to NotebookLM and you will have a great starting point that you can work on, refine and publish. And if you are a student – just upload the material you want to learn more about and ask NotebookLM to create a presentation for you. NotebookLM is quickly becoming the tool of choice for both teachers and students that quickly want to create summaries and presentations from large amounts of study material in an easy to digest format. If you have not yet tried NotebookLM you should, it’s great.

Read more:

Anthropic Introduces Weekly Rate Limits for Claude Code Users

https://techcrunch.com/2025/07/28/anthropic-unveils-new-rate-limits-to-curb-claude-code-power-users/

The News:

- Anthropic announced new weekly rate limits for Claude Code starting August 28, targeting users who run the AI coding tool “continuously in the background, 24/7” and those violating usage policies by sharing accounts or reselling access.

- The limits affect Claude Pro ($20/month) and Max ($100-200/month) subscribers, with existing five-hour reset limits remaining unchanged.

- Two new weekly limits will reset every seven days: one for overall usage and another specifically for Claude Opus 4, Anthropic’s most advanced model.

- According to Anthropic, based on current usage patterns less than 5% of subscribers will be affected.

- Subscribers to the $200-per-month Max plan can expect 24 to 40 hours of Opus 4 every week, although usage may vary based on codebase size.

My take: So if you are on the Max plan now at $200 per month, what Anthropic is saying is that you will no longer be able to use Opus 4 for a full working week, instead you will get something between 3-5 days of usage from it. I am using Claude Opus 4 myself every day, and it’s amazing if you know how to control it. The net result here is that the monthly price for using Anthropic Claude 4 Opus just went up, from $200 to $400, if you are a full time AI programmer. Still, there aren’t really any alternatives to Claude Code when it comes to programming efficiency. Maybe that is why even OpenAI is using Claude Code in their daily work, Anthropic just announced that they revoked access to Claude Code for OpenAI because “OpenAI’s own technical staff were using our coding tools ahead of the launch of GPT-5”.

OpenAI Launches Stargate Norway, First European AI Data Center

https://www.wsj.com/tech/ai/openai-strikes-partnership-to-bring-stargate-to-europe-169a38b3

The News:

- OpenAI launched Stargate Norway, its first AI data center project in Europe under the OpenAI for Countries program, delivering 230MW capacity with 100,000 NVIDIA GPUs by late 2026, enabling European researchers and startups to access AI compute without relying on overseas infrastructure.

- The $1 billion facility is located near Narvik in northern Norway, chosen for its abundant hydropower, cool climate, and power prices below European averages.

- The data center runs entirely on renewable hydropower and uses closed-loop, direct-to-chip liquid cooling for maximum efficiency, with excess heat supporting low-carbon regional enterprises.

- Nscale Global Holdings and Aker ASA own the facility through a 50/50 joint venture, each contributing approximately $1 billion to the initial 20MW phase, with plans to expand by an additional 290MW in future phases.

- The facility will use NVIDIA’s GB300 Superchip processors connected through NVLink network technology, positioning it among the first AI gigafactories in Europe.

My take: Congratulations to Norway for becoming one of the AI powerhouses in Europe. Hydropower provides reliable, clean and continuous power which is perfect for AI infrastructures. The climate is also a key choice for the location, with Sam Altman stating that they found ideal conditions in Narvik “with clean, affordable energy, ideal climate, and great partners”. I believe the increasing growth of AI usage will have many countries re-evaluating their choices of energy sources – otherwise most European countries will soon be 100% dependent on other countries for all their daily operations.

Adobe Releases New AI-Powered Photoshop Features Including Harmonize and Generative Upscale

The News:

- Adobe introduces new AI-powered Photoshop features aimed at reducing tedious editing tasks and accelerating creative workflows for photographers, designers, and content creators.

- Harmonize feature enables automatic blending of new objects into compositions by analyzing surrounding context and adjusting color, lighting, shadows, and visual tone in a few clicks. The tool addresses time-consuming manual adjustments for tasks like creating surreal landscapes or campaign visuals.

- Generative Upscale enhances image resolution up to 8 megapixels without sacrificing clarity, targeting photographers who need to refine edits for print or adapt assets across different platforms.

- Improved Remove tool eliminates unwanted elements while generating realistic replacement content with better quality and fewer artifacts compared to previous versions. Examples include removing power lines or cleaning portrait backdrops.

- Projects feature organizes creative work in shared spaces to eliminate scattered files and streamline collaboration by allowing entire collections to be shared at once.

- The new Gen AI Model Picker allows users to choose between Firefly Image Model 1 and Firefly Image 3 for Generative Fill and Generative Expand tools.

My take: The new Harmonize feature is crazy good – it fixes shadows, lightning, reflections and so much more. If you have ever worked with composites in Photoshop you know how many hours it takes to achieve results that Harmonize now does in seconds. The current model for generative fill and expand is quite bad for cartoon style images, so being able to switch between models for generative fill and expand is very welcome. Also the new Generative Upscale is also a direct competitor with Topaz labs, famous for their AI upscaler Gigapixel 8.

Read more:

Ideogram Launches Character Consistency Model Using Single Reference Photos

https://about.ideogram.ai/character

The News:

- Ideogram Character allows users to generate consistent character variations from a single reference photo, addressing the challenge of maintaining character identity across multiple AI-generated images.

- The tool automatically detects and masks face and hair from input images, enabling users to place characters in new scenes while preserving facial features and appearance.

- Users can customize the character mask to include or exclude clothing and hair, providing control over which elements remain consistent across generations.

- The feature integrates with existing Ideogram tools including Magic Fill for advanced face placement, Describe for style replication, and Remix for style matching with adjustable weight controls.

- Initially available on Ideogram Plus and Pro plans, the company is offering free access to all users during the launch period.

My take: This was fun and it’s one of the few services you can still use to turn yourself into a super hero, most other tools will reject creating copyrighted styles. The end result is OK but not great. It’s free right now so if you want to turn yourself into wonder woman or superman this is your chance!

Black Forest Labs and Krea AI Release FLUX.1 Krea

https://bfl.ai/announcements/flux-1-krea-dev

The News:

- Black Forest Labs and Krea AI released FLUX.1 Krea [dev], an open-weights text-to-image model designed to generate more realistic images without the oversaturated appearance typical of AI-generated content.

- The model uses an “opinionated” approach that produces diverse outputs with a distinctive aesthetic style, aiming to surprise users with visually interesting results.

- FLUX.1 Krea [dev] matches the performance of closed solutions like FLUX1.1 [pro] in human preference assessments while outperforming previous open text-to-image models.

- The model maintains architectural compatibility with the existing FLUX.1 [dev] ecosystem, allowing developers to integrate it into current workflows.

- Model weights are available through BFL’s HuggingFace repository, with commercial licensing through BFL’s Licensing Portal and API access via partners including FAL, Replicate, Runware, DataCrunch and TogetherAI.

My take: Unless you have been following Black Forest Labs this news item probably sounds like a foreign language. Black Forest Labs (BFL) was founded in 2024 by Robin Rombach, Andreas Blattmann, and Patrick Esser, former employees of Stability AI. Krea AI was founded in 2022 by Victor Perez and Diego Rodriguez. Today Krea AI is famous for using the foundation models created by BFL and fine tuning them to create content that does not have that “AI generated style”. Krea has one model today: Krea 1, and BFL have three models: dev, pro and schnell, where dev is the smallest open-source version and schnell is a quicker model. The new model FLUX.1 Krea [dev] is a collaboration between BFL and Krea and is released as open weights, which means that anyone can use it for free locally for non-commercial purposes! If you are working with AI image content generation and have been put-off by expensive API costs (like with GPT Image 1 where each 1536×1024 image cost $0.25 to generate) and you are tired of that AI-generated look in your content, then FLUX.1 Krea [dev] should be on the top of your list!