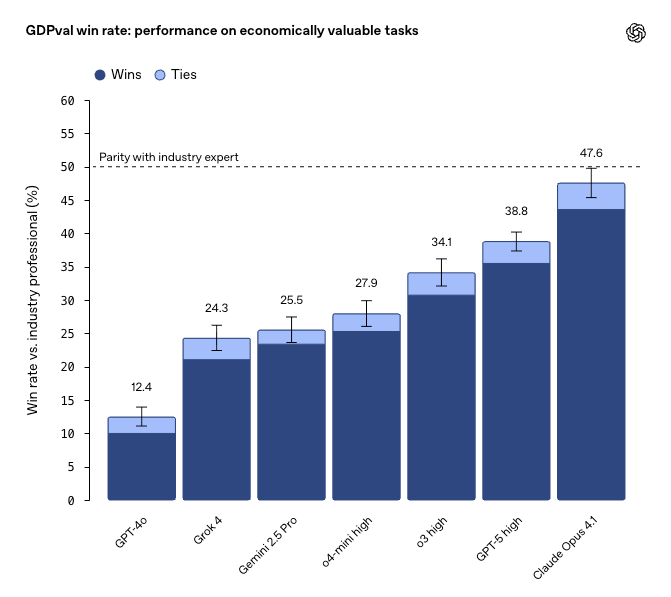

Just how good are today’s state-of-the-art AI models at doing normal office work? To test this, OpenAI just introduced GDPval, a new benchmark focusing on AI model performance on real work tasks, tested against professionals with 14 years of experience. Last year’s best model, GPT-4o, was only better than humans in 13.7% of all tests. Today’s best model, Claude Opus 4.1, is now better than humans in 47.6% of all tests. According to OpenAI the performance increase of AI models for everyday tasks have improved almost linearly the past 18 months, and is expected to continue that way in the near future.

Now, this benchmark is only for a single model doing one task. This means that if you deploy an agentic system where several models work together, you will end up with an AI system that does the job much better than almost any human with 14 years of experience. Now also consider that current AI models solved all tasks 100 times faster than any human, and I feel I repeat myself – but we have really crossed the line where agentic AI systems are both better at doing our work while at the same time doing it 100 times faster. At this rate a single AI model will do most of your work tasks better than you in a year, think a bit about what this means for you and the work you are doing. What skills do you need to improve in a world where an AI does all routine work for you instantly.

The past week I have continued my side gig with my app Notebook Navigator. 60 000 downloads so far, and I have added features like shortcuts, recent notes, manual sort order, a new icon pack, customizable hotkeys, file icons, and much more. These are all features that would have taken a regular development team several weeks to finish, if not months, and I wrapped them up in my spare time in less than a week. 100% AI generated, documented and tested. If you are curious about the source code quality of todays best AI models (currently GPT5-CODEX-HIGH) just check the repo, it’s all open source. It doesn’t get better than this from any developer.

The quality is here and the scaling factors are here. It’s time to start rewiring your company!

Thank you for being a Tech Insights subscriber!

Listen to Tech Insights on Spotify: Tech Insights 2025 Week 40 on Spotify

THIS WEEK’S NEWS:

- OpenAI Introduces GDPval: New Benchmark for Real-World AI Performance Across 44 Occupations

- ChatGPT Pulse Launches as Proactive AI Assistant for Pro Users

- Google DeepMind Releases Gemini Robotics 1.5 with Thinking and Cross-Robot Learning

- Google Launches Chrome DevTools MCP Server for AI Agent Browser Debugging

- Microsoft Adds Claude 4 Models to Copilot for Enterprise Users

- Google Reports 90% Developer AI Adoption in Workplace

- GitHub Copilot CLI Launches Public Preview for Terminal AI Development

- Perplexity Launches Search API for Global Web Index Access

- Google Updates Gemini 2.5 Flash with Enhanced Tool Use and Efficiency

OpenAI Introduces GDPval: New Benchmark for Real-World AI Performance Across 44 Occupations

https://openai.com/index/gdpval

The News:

- OpenAI released GDPval, a benchmark that measures AI model performance on real professional tasks across 44 occupations in nine GDP-contributing industries including healthcare, finance, manufacturing, and government.

- The evaluation uses 1,320 tasks created by professionals with an average of 14 years of experience, including deliverables like legal briefs, engineering blueprints, customer support conversations, and nursing care plans.

- GDPval tests multimodal capabilities requiring models to process reference files and produce documents, slides, diagrams, spreadsheets, and multimedia outputs rather than simple text responses.

- Claude Opus 4.1 scored highest with 47.6% win/tie rate against human experts, while GPT-5 achieved 40.6%, showing models can complete tasks 100x faster and cheaper than professionals.

- OpenAI found performance more than doubled from GPT-4o (13.7% win/tie rate in spring 2024) to GPT-5 (40.6% in summer 2025), following a linear improvement trend.

My take: If you still haven’t started your company transformation using AI, please begin your week reading this article. Seriously. Today’s AI models are nearly EQUAL in performance to senior professionals within 44 different occupations with 14 years of experience. And the performance increase of AI models for these types of tasks has grown linearly for the past 18 months. This means that in less than one year, if you still use humans for most tasks, you are (1) working at least 100 times slower than you should be working, and (2) you are getting much worse results than you would if you had AI agents doing most of the work.

Do you still believe we will spend most our times in Excel and Word in 1-2 years? Just follow the curve: I don’t think anyone will do any kind of routine work in 2-3 years. Sure, you might disagree because you are still struggling to roll out the first batch of Copilot licenses to your employees, but once AI agents are better than any human in any profession you bet corporate management will be very quick to push the big reform button.

My recommendation to any company is: (1) Partner up with an AI specialist company (like TokenTek), that can help you get started with an AI Policy so you have all the prerequisites in place to start building agentic solutions. Then (2) start building agentic AI solutions, anything really, so you can start building this critical skill within your company. Then (3) give 2-3 of your most senior software engineers access to both OpenAI Pro and Anthropic 20x Max accounts. It’s expensive but worth it. Next year you will build software completely different, but getting up to speed takes time.

Read more:

ChatGPT Pulse Launches as Proactive AI Assistant for Pro Users

https://openai.com/index/introducing-chatgpt-pulse

The News:

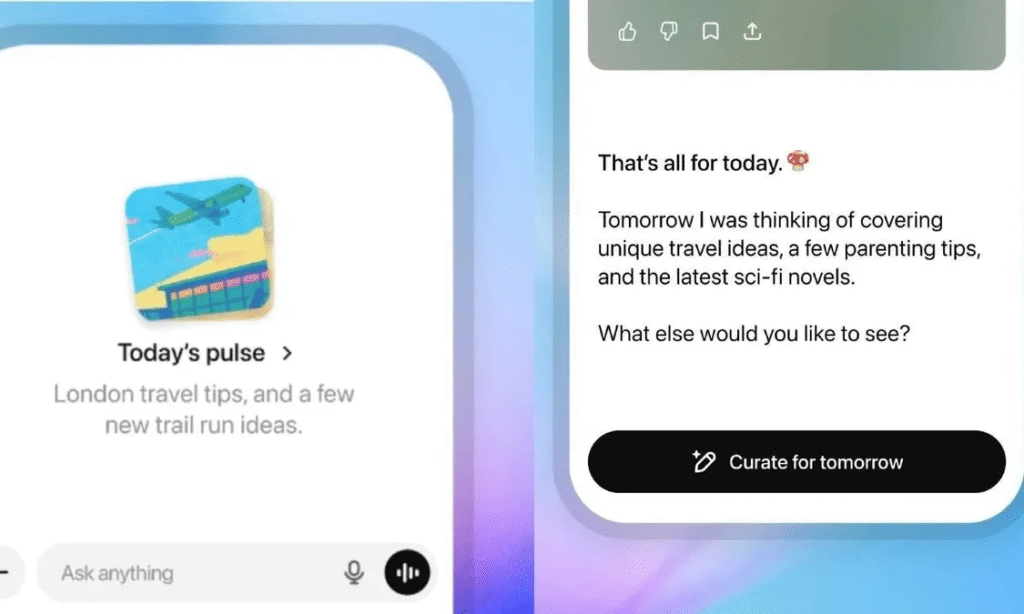

- OpenAI releases ChatGPT Pulse exclusively for Pro users on mobile, shifting from reactive question-answering to proactive daily research and updates.

- Pulse analyzes chat history, user feedback, and connected Gmail or Google Calendar accounts overnight to generate personalized visual cards each morning.

- Daily briefings include follow-ups on discussed topics, practical suggestions like dinner recipes, and progress steps toward long-term goals such as marathon training.

- Users can curate content by requesting specific research topics and providing thumbs up or down feedback to improve future suggestions.

- The feature includes safety checks on all content and limits updates to one day unless saved, preventing endless scrolling behavior.

My take: I think it’s becoming clearer every month now that a single AI provider is not the right choice for every coworker in every company. I have used Pulse the past few days and it is AMAZING. ChatGPT knows about the things I am working on right now, and can provide me news and updates related to that I need when I wake up in the morning. It’s becoming clearer every month why Microsoft is building their own large language models; if they want to stay competitive they cannot just be a model provider. They need services like Pulse to stay competitive, but these services only works on the latest and greatest models that are too expensive to fit into a copilot subscription (since they don’t own the models themselves).

If you are a developer or working with marketing, sales or business innovation then ChatGPT Pro is probably without comparison the best alternative in all categories for you. It’s very expensive, but you get the best model for software development (GPT5-CODEX-HIGH), the best tools like CODEX, and now also the best services like Pulse. The main benefit Microsoft has with M365 Copilot is it’s integration in Office – but if people use Office less and less, that advantage is slowly slipping away too.

Google DeepMind Releases Gemini Robotics 1.5 with Thinking and Cross-Robot Learning

https://deepmind.google/discover/blog/gemini-robotics-15-brings-ai-agents-into-the-physical-world

The News:

- Google DeepMind upgraded its March 2025 robotics models with Gemini Robotics 1.5, introducing thinking capabilities that generate internal reasoning sequences before taking action.

- The new models show their thought process in natural language, explaining decisions like “I need to grasp this object carefully because it appears fragile” before executing motor commands.

- Gemini Robotics-ER 1.5 can now search the Internet during tasks, looking up local recycling guidelines to properly sort waste into compost, recycling, and trash bins.

- Cross-robot learning enables skills trained on one robot type to transfer automatically to completely different embodiments without retraining, including humanoid robots.

- Developers can now access Gemini Robotics-ER 1.5 through Google AI Studio’s API, with tunable thinking budgets that balance speed versus accuracy.

- The system achieves state-of-the-art performance on 15 spatial understanding benchmarks and introduces precise 2D point generation for object location.

My take: Following up OpenAI’s GDPval benchmark that shows that today’s best AI models are as good as professionals with 14 years of experience, it’s easy to understand why Google focuses so strongly on robots. Just follow the trajectory, and within a few years you will have robots that do anything a human can do, but with much higher quality, 100 times faster and can work 24 hours per day, every day. Giving these robots Internet access also means they will be able to adapt to any possible situation. We’re not there yet, but it’s not far away from a breaking point where machines beats us both intellectually and physically at any given task. Intellectually maybe next year, physically maybe 2-3 years.

Google Launches Chrome DevTools MCP Server for AI Agent Browser Debugging

https://developer.chrome.com/blog/chrome-devtools-mcp?hl=en

The News:

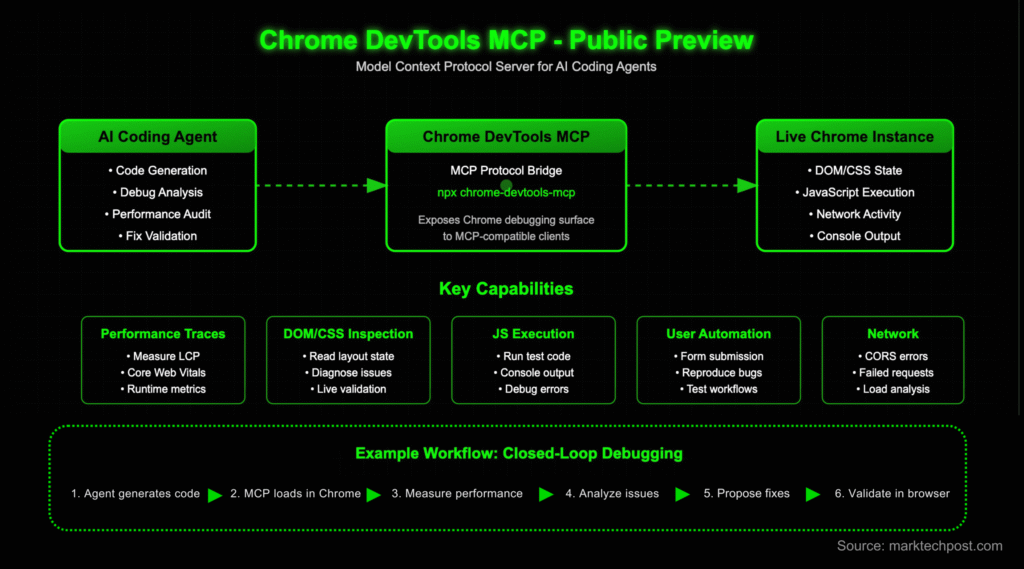

- Google released a public preview of Chrome DevTools Model Context Protocol (MCP) server, connecting AI coding assistants to live Chrome browser debugging capabilities.

- AI agents can now record performance traces, inspect DOM and CSS, read console logs, analyze network requests, and automate user interactions within Chrome DevTools.

- The server provides 26 debugging tools including performance_start_trace for recording website performance data and suggesting optimizations based on actual browser measurements.

- Installation requires adding a single configuration entry to MCP clients: “command”: “npx”, “args”: [“chrome-devtools-mcp@latest”].

- The tool addresses the core limitation where “coding agents face a fundamental problem: they are not able to see what the code they generate actually does when it runs in the browser”.

My take: This one is quite useful! If you are creating web based apps and want to debug specific tasks, you can now install this MCP server and your AI agent will then be able to click around, drag elements, upload files, navigate around, read console logs and even query all DOM elements in a Chrome web browser! Previously most developers used Microsoft Playwright MCP for this, and a few months ago there was a third party DevTools implementation launched on GitHub. But this is the official version, and it also integrates directly with Chromes native debugging infrastructure. Super handy if you are a web developer, and as AI agents become more powerful your everyday job will become much more efficient.

Microsoft Adds Claude 4 Models to Copilot for Enterprise Users

The News:

- Microsoft 365 Copilot now includes Anthropic’s Claude Sonnet 4 and Claude Opus 4.1 along existing OpenAI models, giving enterprise customers model choice for AI-powered work tasks.

- Claude Opus 4.1 powers the Researcher agent, which handles complex research tasks across web content and internal Microsoft 365 data including emails, meetings, and files.

- Both Claude models are available in Copilot Studio for building enterprise AI agents, allowing organizations to mix and match models from Anthropic, OpenAI, and Azure’s model catalog for specialized tasks.

- Access requires admin approval through Microsoft 365 admin center and is currently limited to Frontier Program participants who opt-in to the preview.

- Anthropic models remain hosted on Amazon Web Services outside Microsoft’s infrastructure and are subject to Anthropic’s terms of service rather than Microsoft’s data protection agreements.

My take: I was genuinely surprised by the fact that Claude accessed through Copilot is still hosted on Amazon and is still subject to Anthropic’s terms of service, rather than Microsoft’s data protection agreements. I fully understand why most companies choose to go the safe M365 Copilot route, since it makes it so much easier for top management not having to approve new services and API endpoints. But here it clearly shows that this is not enough. There’s a reason why Microsoft added Claude to M365 Copilot and the reason is that Claude is much better than ChatGPT in writing technical articles. If your coworkers work primarily with technical reports then Claude will give them better results. So if your company is working with product development you should make sure your employees can access Anthropic, OpenAI and Mistral through API services. It will make it possible for you to use Claude either directly or through M365 Copilot, and it will also pave the way for future agentic services that are built with a mix of different service providers (at TokenTek often use all three in our agentic workflows).

Google Reports 90% Developer AI Adoption in Workplace

https://blog.google/technology/developers/dora-report-2025

The News:

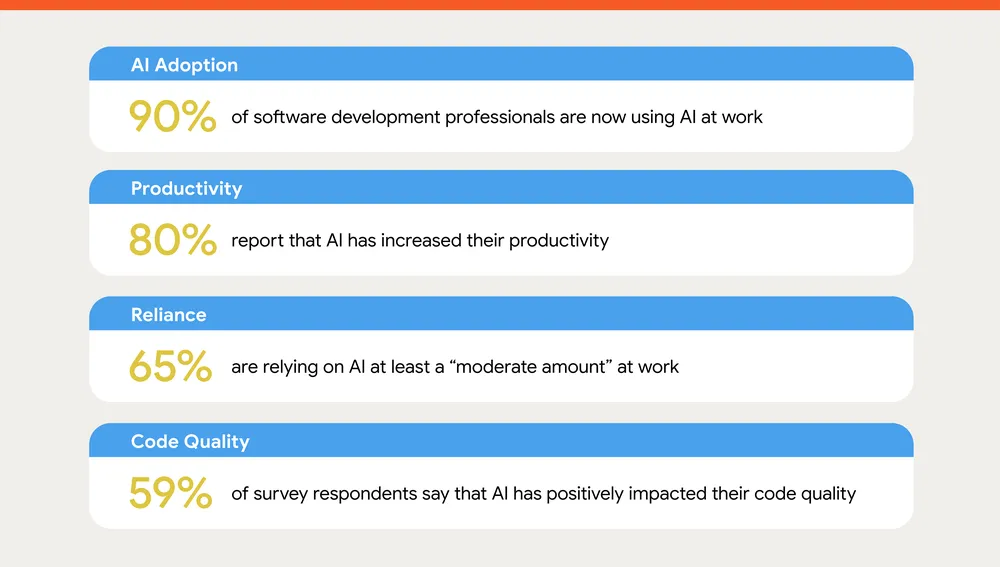

- Google’s 2025 DORA report surveyed 5,000 technology professionals and found that 90% of software developers now use AI tools in their workflows, marking a 14% increase from the previous year.

- Developers spend a median of two hours daily working with AI, with 65% reporting heavy reliance on the technology, including 37% with “moderate reliance” and 28% with “a lot” or “a great deal” of reliance.

- Over 80% of respondents report productivity gains from AI, with 59% indicating positive impacts on code quality and 71% using AI primarily for writing new code.

- The report reveals a trust paradox where only 24% express “a lot” or “a great deal” of trust in AI, while 30% trust it “a little” or “not at all,” despite widespread adoption.

- Google introduced a new DORA AI Capabilities Model identifying seven essential capabilities for organizations to maximize AI’s impact beyond simple adoption.

My take: 90% of all software developers use AI, and 80% of them reported increased productivity from it. With figures like these I’m still so amazed when large companies with tens of thousands of people feel they cannot afford to roll out paid chat clients to all their employees. These figures are only from the first wave of AI-driven development, where AI is used like a chatbot within Visual Studio Code. Next year I expect to see totally different figures as agentic AI driven development takes a foothold in most organizations. Also the study mentioned 30% had little or no trust in the AI, and that depends of course a lot of the model. In my own experience I really do trust the results of GPT5-CODEX-HIGH, and this is a first for me. Claude 4 Opus has gone bad really quick, and previous smaller models like Claude 4 Sonnet and GPT-4o are wrong more times than they are right, I also don’t trust them. If anything I would have guessed more than 30% of the developers had little trust in these mid-level AI solutions.

GitHub Copilot CLI Launches Public Preview for Terminal AI Development

https://github.blog/changelog/2025-09-25-github-copilot-cli-is-now-in-public-preview/

The News:

- GitHub released Copilot CLI in public preview, bringing the Copilot coding agent directly to terminals without requiring IDE integration.

- Developers can install the tool via npm (npm install -g @github/copilot), authenticate with GitHub accounts, and access existing Copilot subscriptions (Pro, Business, or Enterprise).

- The CLI includes agentic capabilities for building, editing, debugging and refactoring code, with built-in GitHub integration for repositories, issues, and pull requests.

- GitHub’s implementation supports MCP (Model Context Protocol) servers by default and allows custom MCP server extensions.

- The system defaults to Claude Sonnet 4 but supports switching to GPT-5 using COPILOT_MODEL=gpt-5 environment variable.

- All actions require explicit user approval before execution, providing full control over code changes.

My take: These CLI tools are critical for agentic software development, since it enables full terminal access to run tools and write custom software to interact with the code base. Both Claude Code and OPENAI Codex often surprise me when they decide that the best way to solve a specific task is to write a python program that does one task so the other 3 tasks can be done quicker. They also use tools like ripgrep and are surprisingly effective in just everyday computer use. This is the future of programming, so of course both Cursor and GitHub wants to be onboard. What they do NOT have however is unlimited access to the latest and greatest models like Claude 4.1 Opus and GPT-5-CODEX-HIGH. It’s these models that make it work, so just having this CLI won’t make much of a difference. Maybe the next “standard” version of GPT 5.5 or Claude 4.5 Sonnet will have same performance as today’s GPT5-CODEX-HIGH, then good, most developers are then able to get really productive with agentic development. Until then I recommend every developer that really want to try this to just buy an OpenAI Pro license and install OpenAI Codex, it’s an incredible experience.

Perplexity Launches Search API for Global Web Index Access

https://www.perplexity.ai/hub/blog/introducing-the-perplexity-search-api

The News:

- Perplexity launches its Search API providing developers access to the same infrastructure that powers its public answer engine, covering hundreds of billions of webpages.

- The API returns raw, ranked web results with structured snippets rather than synthesized answers, distinguishing it from Perplexity’s existing Sonar API.

- The service processes tens of thousands of index updates per second to maintain real-time freshness and uses AI-powered content parsing to handle diverse web content.

- Pricing starts at $5 per 1,000 requests for raw searches, with the company offering an SDK and open-source evaluation framework for developers.

- The API divides documents into sub-document units that are individually scored against queries, reducing preprocessing requirements for AI applications.

My take: Perplexity operates their own custom search engine that indexes hundreds of billions of webpages and have an infrastructure comprising tens of thousands of CPUs and hundreds of terabytes of RAM. They are actually one of the larger search providers right now. So why would you want to use Perplexity search index instead of Google, Bing or Brave? Well one reason is that Perplexity manages to find things that many other search engines do not find. Perplexity have an aggressive approach that crawls web pages that clearly have declared they do not want to be crawled, so I can see that for some people and some use cases it makes sense to go with Perplexity instead of one of the more established search engines.

Read more:

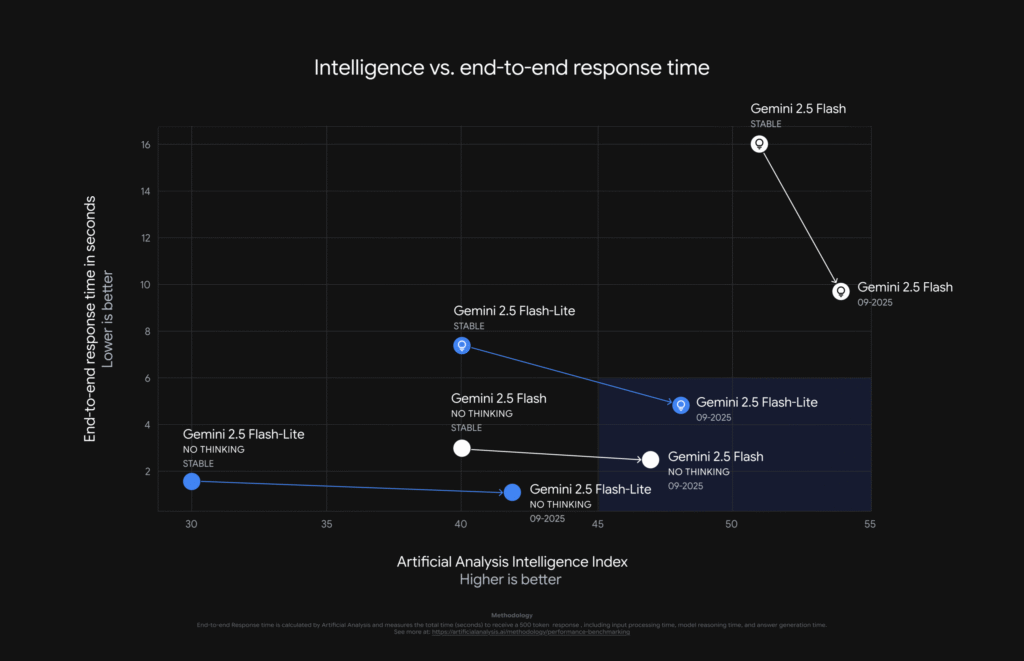

Google Updates Gemini 2.5 Flash with Enhanced Tool Use and Efficiency

The News:

- Google released updated versions of Gemini 2.5 Flash and Flash-Lite models through Google AI Studio and Vertex AI, focusing on improved quality and efficiency.

- Gemini 2.5 Flash shows 5% improvement on SWE-Bench Verified benchmark, rising from 48.9% to 54%, with better agentic tool use and multi-step reasoning capabilities.

- The model produces higher-quality outputs while using fewer tokens, reducing latency and deployment costs across applications.

- Flash-Lite demonstrates 50% reduction in output tokens compared to previous versions and operates 40% faster than the July 2025 release.

- Google introduced -latest aliases (gemini-flash-latest, gemini-flash-lite-latest) that automatically point to the most recent model versions, with 2-week advance notice before updates.

My take: I’m a big fan of software optimizations, so looking at these optimizations just makes me happy. 50% reduction in output tokens while at the same time going from 48.9% to 54% on the SWE-Bench benchmark is huge. If you need a fast, high quality and very low cost LLM for agentic use, this is it.