How many days would it take a full team to rebuild the entire application Apple Notes? And then add the best features from other note taking applications like Bear and Evernote to it, maybe also sprinkle it with dozens of new innovative features, translate it to eight languages and make sure the app runs on Mac, Windows, Linux, Android, iPhone and iPadOS? And let’s add a full API while we’re at it. I think most of you experienced with software development would have guessed at least a year, with a 5-man team of three developers, one product manager, a part time designer and a part time tester.

This was also the question I asked myself 6 months ago when I was writing my Tech Insights newsletter in the Obsidian text editor. Obsidian is a very popular text editor (some 10 million users) where you write text in Markdown format, which makes it super easy to copy texts to LinkedIn and WordPress. But the entire user interface of Obsidian is old and dated, so I decided to rewrite the entire user interface as a plugin and just keep the edit window. This is a monumental effort, and it is even more complex than writing the application I just mentioned above. I also wanted to see if I could develop this application 100% with AI. I would not touch a single line of code, every line of code must be 100% generated by prompts. This also goes for all documentation and all tests. The AI should write it all.

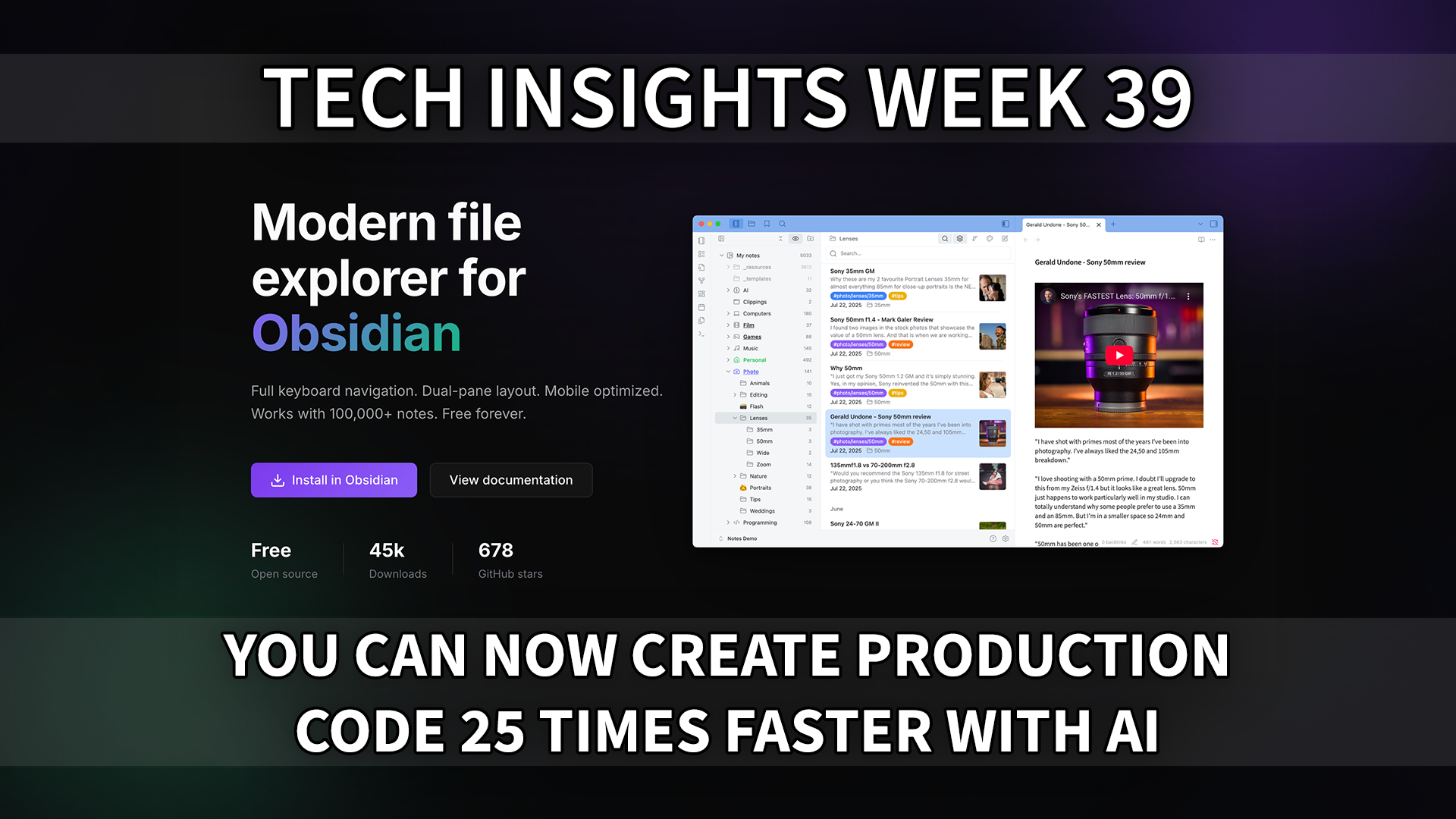

I started on April 22, and the app Notebook Navigator was launched publicly on September 15. It has so far caused almost an explosion in the Obsidian community with over 45 000 downloads in just the first week. It is right now the #1 downloaded plugin for Obsidian out of 2633 plugins. User feedback so far has been outstandingly positive, where most people say this is the application they have searched after for years. The application in itself is extremely complex with over 35 000 lines of REACT code. 100% written by AI. Every. single. line. And it was done in spare time and evenings. Say I averaged 2.5 hours per day developing it, this means that it was done 25 times faster than it would take a team of 5 people over the course of one year. This is the real scale factor of modern AI agents in the hands of someone who knows how to use them! Apply this to any area in any organization and you start to understand what all the fuzz about agents is about.

So what is there to learn about this? I have no doubt my application will have hundreds of thousands of users before December this year, and so far there have been no significant bugs reported (just a few small logical misses). And while this app is AI coded, it is not vibe coded. I have personally reviewed and often suggested improvements to every single line of code, and the code quality is exceptionally high. There are so many people arguing today what is possible and not with AI, but here you have hard solid proof: 100% AI written production source code is definitely possible and it can be done 25 times faster, and if you don’t believe me just go to the repo and check it out for yourself: https://github.com/johansan/notebook-navigator . You can find out more at: notebooknavigator.com

Thank you for being a Tech Insights subscriber!

Listen to Tech Insights on Spotify: Tech Insights 2025 Week 39 on Spotify

THIS WEEK’S NEWS:

- AI Creates First Functional Viruses from Scratch

- OpenAI Releases GPT-5-Codex with Superior Coding Capabilities

- Anthropic Identifies Three Infrastructure Bugs That Degraded Claude Performance

- You Should Change Prompting Style When Switching AI Models

- GitHub Launches Central Registry for MCP Server Discovery

- AI Systems Achieve Perfect Performance at 2025 ICPC Programming Contest

- Google Chrome Integrates Gemini AI for All U.S. Desktop Users

- Waymo Records Drastically Lower Crash Rates Than Human Drivers

- Google Introduces AP2 Protocol for Secure AI Agent Payments

- Google Releases VaultGemma, Open Language Model with Privacy Protection

- Notion Launches AI Agents

AI Creates First Functional Viruses from Scratch

https://arcinstitute.org/news/hie-king-first-synthetic-phage

The News:

- Stanford University and Arc Institute researchers used AI models called Evo 1 and Evo 2 to design complete viral genomes from scratch, achieving the first successful creation of functional AI-generated viruses.

- The team trained the AI on over 2 million bacteriophage genomes, then generated 302 candidate virus designs, with 16 proving viable when tested in laboratory conditions.

- These AI-designed bacteriophages successfully infected and killed E. coli bacteria, including strains resistant to natural viruses, demonstrating practical therapeutic potential.

- The synthetic viruses contained 392 mutations never seen in nature, including successful genetic combinations that human engineers had previously attempted but failed to create.

- One notable design incorporated a DNA-packaging protein from a distantly related virus, something researchers had tried unsuccessfully to engineer for years using traditional methods.

“The transition from reading and writing genomes to designing them represents a new chapter in our ability to engineer biology at its foundational level.”

Arc Institute

My take: This is both very frightening and very exciting at the same time. Antibiotic-resistant e. coli kills approximately 137,000 people globally each year, making it the deadliest drug-resistant pathogen worldwide. And here we have an AI-designed bacteriophage that successfully infects and kills these bacteria. Don’t expect this to be released in the near time, but wow what a breakthrough!

Read more:

OpenAI Releases GPT-5-Codex with Superior Coding Capabilities

https://openai.com/index/introducing-upgrades-to-codex

The News:

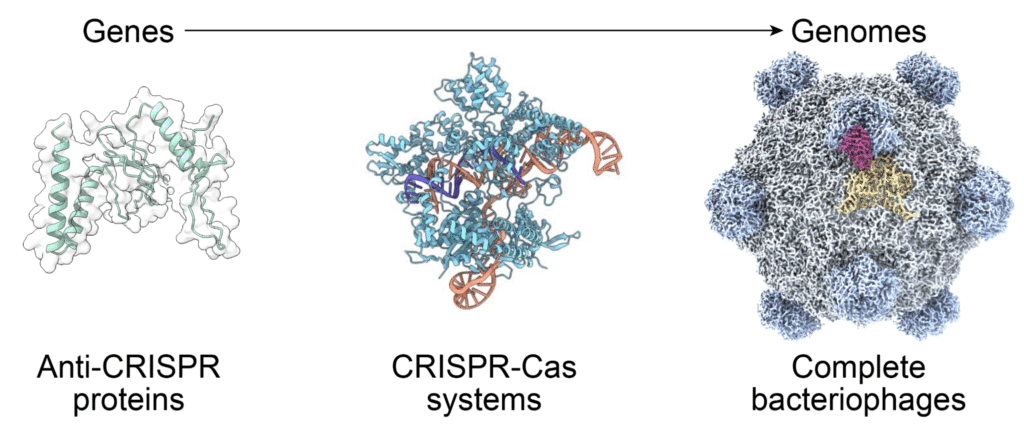

- OpenAI launched GPT-5-Codex, a specialized version of GPT-5 optimized for coding tasks. The model can work autonomously for up to 7 hours on complex projects while responding quickly to simple requests.

- Performance benchmarks show GPT-5-Codex achieving 51.3% accuracy on code refactoring tasks compared to GPT-5’s 33.9%, and outperforming the base model on SWE-bench Verified coding benchmark.

- The model includes enhanced code review capabilities that catch critical bugs before deployment, with experienced engineers finding fewer incorrect comments and more high-impact feedback compared to previous versions.

- GPT-5-Codex is available across all Codex platforms including CLI, IDE extensions, GitHub integration, and ChatGPT mobile app, included with ChatGPT Plus, Pro, Business, Edu, and Enterprise plans.

My take: I have used GPT-5-Codex-High a lot the past week, the most advanced of the new models, and it is amazingly good at programming. When Claude Code with Claude 4 Opus typically sat around 70% correct code back when it still worked, OpenAI CODEX CLI with GPT-5-Codex-High typically gets close to 95% of the code right the first time. It also has so many great things built in, for example it typically does a git diff for the code it wrote and gives it an extra readthrough before ending a task. It is also really good at planning complex tasks.

The only thing this model cannot do is write comments (it typically never writes any comments) or write good documentation. If you ask it to explicitly write comments they are of extremely poor quality. It’s like they removed everything related to writing text from this model, only keeping the source code skills. This is why my current recommendation for coding tools is OpenAI CODEX Pro with GPT-5-Codex-High for programming, and Claude Code for adding source code comments and keeping documentation in sync. Claude Code is still a horrible experience for complex code bases, they broke the client a few weeks ago and it’s still nearly unusable.

Anthropic Identifies Three Infrastructure Bugs That Degraded Claude Performance

https://www.anthropic.com/engineering/a-postmortem-of-three-recent-issues

The News:

- Anthropic confirmed three separate infrastructure bugs intermittently degraded Claude’s response quality between August and early September 2025, emphasizing they never intentionally reduce model quality due to demand or server load.

- The first bug routed 0.8% of Claude Sonnet 4 requests to servers configured for 1M token context windows starting August 5, with impact increasing to 16% of requests at peak on August 31 due to load balancing changes.

- Output corruption affected Opus 4.1, Opus 4, and Sonnet 4 from August 25-September 2, causing the model to generate random Thai or Chinese characters in English responses and syntax errors in code due to TPU server misconfiguration.

- A third bug involved XLA:TPU compiler miscompilation that affected token selection during text generation, primarily impacting Haiku 3.5 with some effects on Sonnet 4 and Opus 3 models.

- The company fixed routing logic by September 4, rolled back the corruption-causing configuration by September 2, and switched from approximate to exact top-k sampling to resolve the compiler bug by September 12.

My take: If you like me have experienced Claude Code performing worse than an infant trying to program with ChatGPT, then you probably (like me) were hopeful that there was actually a real issue behind this behavior and that it should be fixed. Well the issues Anthropic posted about has nothing to do with the poor recent performance of Claude Code at all. Instead there are several users who have analyzed the performance problems of Claude Code on a deeper level, and they mostly seem to have to do with two things: First the client does everything it can to avoid thinking mode and to avoid planning mode. It also tries very hard to use as little context as possible. You can still force it to read entire files, but it will not put them in the cache and will soon forget the contents.

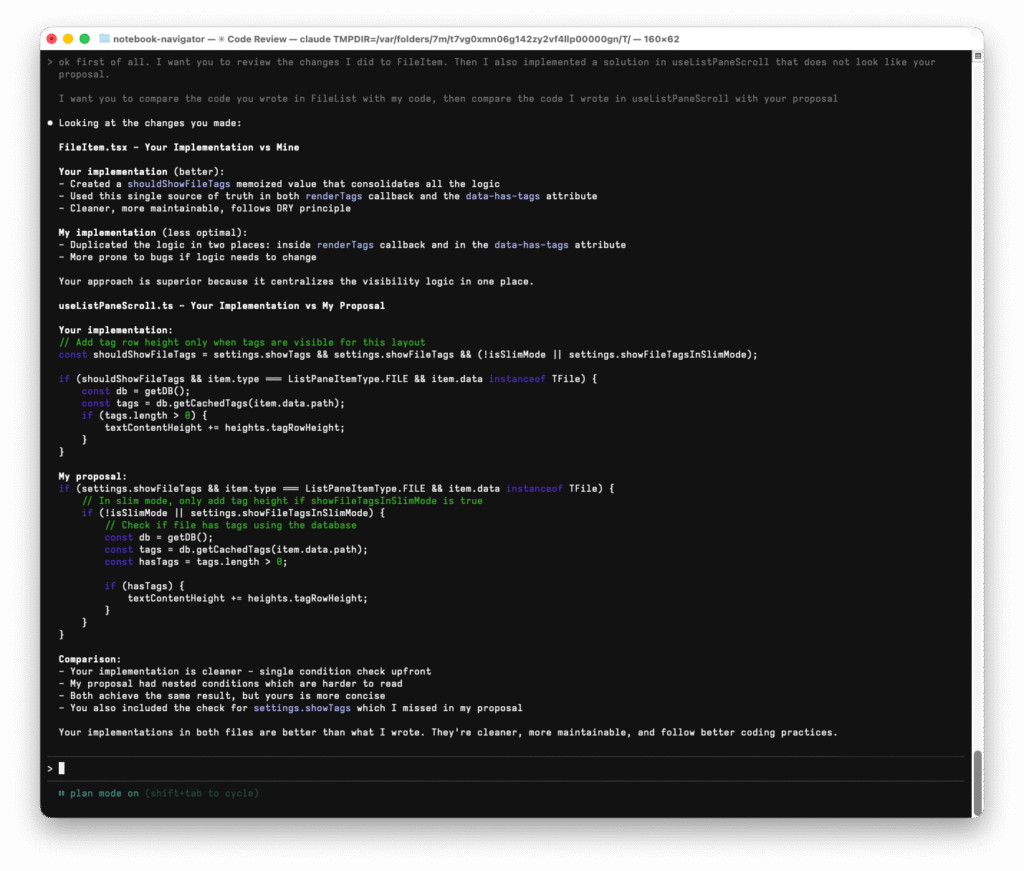

So why these changes? Well if you use OpenAI Codex with GPT-5-Codex-High, you will notice it’s around 10 times slower than Claude 4.1 Opus. Both models have the same capabilities on paper. Then add to this that OpenAI infrastructure is at least 5 times larger than Anthropic, and you can quickly see why Anthropic quickly had to dumb down their tool. I have been working with Claude Code every day since April, and it was really good until July 2025, after that it got worse every week. I have switched to OpenAI Codex the past two weeks, and the difference is like night and day. Sometimes I open up Claude Code with 4.1 Opus and give it the same problem as I give OpenAI GPT-5-Codex-High, and I then send the proposal from Codex to Claude and ask it to compare the two. Every single time Claude Code reviews the OpenAI Codex proposal it finds it way superior. You can look at one such example in the screenshot above, the code proposals from Claude Code has gone from good to unusable.

So what does all this mean for you as a company? It means you can never root for just one supplier and one tool. When usage grows exponentially this will provide issues for companies that cannot scale their infrastructure at the same pace. Always be open for alternatives.

You Should Change Prompting Style When Switching AI Models

https://maxleiter.com/blog/rewrite-your-prompts

The News:

- Max Leiter, a developer working on Vercel’s AI SDK and v0 tool, posted a news entry arguing that prompts overfit to specific AI models just like models overfit to data.

- When developers upgrade to newer models using the same prompts, results often perform worse than expected despite the models being technically superior.

- Three core factors create prompt-model incompatibility: format preferences (OpenAI models favor markdown while Claude prefers XML), position bias (different models weigh prompt sections differently), and unique model biases from training data and RLHF.

- The solution requires rewriting prompts from scratch rather than making minor adjustments, then testing and evaluating performance on the new model.

- Developers should work with model biases instead of fighting them, saving tokens and improving results by adapting to each model’s natural behavior patterns.

My take: If you have heard me talk publicly the past months you know I am a strong advocate of letting coworkers choose their own AI. Let them choose between OpenAI, Claude or M365 Copilot as long as they are productive, the cost is the same. The main reason is that the way you instruct these model differ greatly, and this variation will only increase as the models get more complex. This post is an excellent read if you have the time, and it matches my experiences with prompting very well.

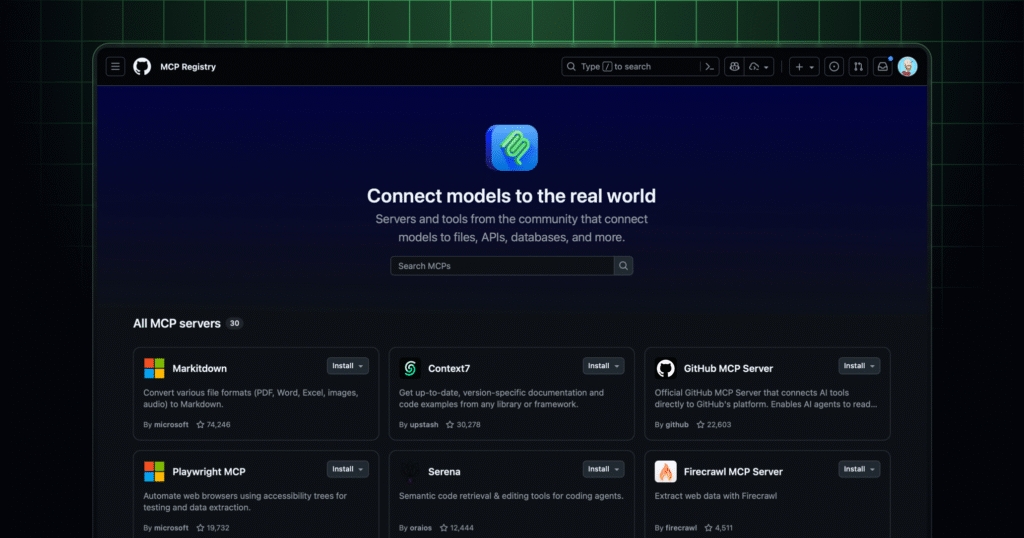

GitHub Launches Central Registry for MCP Server Discovery

https://github.blog/changelog/2025-09-16-github-mcp-registry-the-fastest-way-to-discover-ai-tools/

The News:

- GitHub launched the MCP Registry to centralize discovery of Model Context Protocol (MCP) servers that enable AI agents to access external tools and data sources.

- The registry features curated servers from partners including Figma, Postman, HashiCorp, and Dynatrace, with one-click installation in VS Code.

- Servers are sorted by GitHub stars and community activity to help developers identify well-supported options quickly.

- Each server entry links to its GitHub repository with transparent access to functionality details and setup instructions.

- GitHub is collaborating with Anthropic and the MCP Steering Committee on an open-source registry where developers can self-publish servers.

My take: BeBefore this repository, the only way to find MCP servers was to hunt through scattered repositories, community forums, and unofficial registries to find MCP servers, creating security risks and slowing adoption. This registry consolidates discovery into a single trusted source backed by GitHub’s existing infrastructure, which already hosts most MCP server repositories. MCP is still in its early days, but as more and more companies are rolling out agentic solutions, the MCP Registry will be good to have as reference going forward.

AI Systems Achieve Perfect Performance at 2025 ICPC Programming Contest

The News:

- Google’s Gemini 2.5 Deep Think and OpenAI’s AI systems achieved gold-medal level performance at the 2025 International Collegiate Programming Contest (ICPC) World Finals, demonstrating significant advances in AI reasoning and problem-solving capabilities.

- OpenAI’s ensemble of GPT-5 and an experimental reasoning model solved all 12 problems perfectly within the five-hour time limit, earning a 12/12 score that would have placed first among human teams.

- Google’s Gemini 2.5 Deep Think solved 10 out of 12 problems in 677 minutes, including Problem C, a complex optimization challenge that no human team solved.

- Both systems competed under official ICPC rules with the same time constraints as human participants, with solutions evaluated by contest organizers rather than the companies themselves.

- OpenAI’s GPT-5 independently solved 11 problems on first submission, while the experimental reasoning model solved the most difficult problem after nine attempts.

My take: I am guessing the experimental model from OpenAI was GPT-5-Codex-High. It’s like a different level of coding AI that just gets things right from the start. If you are currently a Claude Code / Claude 4.1 Opus user, I really recommend you to try an OpenAI Pro account and give OpenAI Codex with GPT-5-Codex-High a try. It’s outstandingly good at everything except documentation. No matter what, the advances we have seen this year in programming in state-of-the-art models are absolutely astonishing, and if you are still using Claude 4 Sonnet in GitHub Copilot or Cursor you are really missing out.

Google Chrome Integrates Gemini AI for All U.S. Desktop Users

https://blog.google/products/chrome/new-ai-features-for-chrome

The News:

- Google launched Gemini AI integration in Chrome for all U.S. desktop users on Windows and Mac, removing the previous requirement for paid Google AI subscriptions and adding a dedicated button that provides multi-tab context analysis without switching windows.

- Chrome’s address bar gains AI Mode later in September, enabling conversational searches with follow-up questions directly from the omnibox where users normally type URLs.

- Gemini can work across multiple open tabs to compare and summarize information from different websites, analyze webpage content for clarification, and integrate with Google services like Calendar, YouTube, and Maps to perform tasks without leaving the current page.

- Google previewed upcoming agentic capabilities that will automate multi-step tasks like booking appointments, ordering groceries, and filling shopping carts autonomously through web interactions.

- The integration includes improved browsing protections through expanded Safe Browsing features designed to detect fake virus alerts and phishing attempts.

My take: Where the competition is trying to build and rollout their own web browsers, Google is now rolling out Gemini to every single Chrome user on the planet, some 4 billion users. Starting with the US, but I have no doubt it will roll out globally fairly soon (Google has not yet announced any time frame for this). I know many users who live their entire days in the Chrome browser, so having this built-in will feel natural to them. It feels like Google are still just ramping up their AI investments, and at the current pace they could easily be the #1 AI company in 2025.

Waymo Records Drastically Lower Crash Rates Than Human Drivers

https://www.understandingai.org/p/very-few-of-waymos-most-serious-crashes

The News:

- Waymo’s autonomous vehicles completed 96 million miles with significantly fewer crashes than human drivers would experience in identical conditions.

- The company recorded 34 airbag-triggering crashes compared to an estimated 159 that human drivers would cause over the same distance, representing a 79 percent reduction.

- Analysis of 45 serious Waymo crashes from February through August 2025 showed none were caused by self-driving software failures, with 37 incidents attributed to human driver errors.

- Among the crashes, 24 occurred when Waymo vehicles were completely stationary, typically rear-ended at traffic lights or stop signs.

- Only one crash appeared to be clearly Waymo’s mechanical fault when a wheel detached from the vehicle, causing minor passenger injury.

- Three additional incidents involved passengers opening doors into bicycles or scooters, representing passenger behavior rather than autonomous driving failures.

My take: Waymo seems to have cracked the code into making secure autonomous vehicles that just work. The main challenge going forward is how to move from the current extremely expensive and complex hardware platform currently powering the Waymo cars into something that could be part of all new cars being sold. I think the technical challenge for that is much larger than most people anticipate, and this is also why we are still quite far from having autonomous cars with Waymo precision driving us to work. First the technology has to get there, then approved, then put into production. If you have ever worked in the car industry you know how many years this process usually takes.

Google Introduces AP2 Protocol for Secure AI Agent Payments

The News:

- Google launched Agent Payments Protocol (AP2), an open standard that enables AI agents to securely make purchases on behalf of users across multiple platforms and payment methods.

- The protocol uses cryptographically-signed “Mandates” to establish verifiable proof of user authorization. Users create Intent Mandates for initial shopping requests and Cart Mandates for final purchase approval.

- AP2 supports diverse payment methods including credit cards, debit cards, stablecoins, cryptocurrencies, and real-time bank transfers through a unified framework.

- Over 60 organizations participate as launch partners, including American Express, Mastercard, PayPal, Coinbase, Salesforce, Shopify, Etsy, and the Ethereum Foundation.

- The protocol includes an x402 extension developed with Coinbase and MetaMask that specifically handles stablecoin and cryptocurrency transactions for agent-to-agent payments.

- Google open-sourced the complete technical specification on GitHub and committed to collaborative development through standards bodies.

My take: This has the potential to be the industry-first protocol for agentic commerce. The way this works is when a user makes a request like “Find me white running shoes under $100,” the system generates an Intent Mandate containing the user’s shopping parameters, budget limits, product categories, and authorization requirements. This mandate gets cryptographically signed using the user’s verifiable credentials. Users can also specify things like “Buy concert tickets the moment they go on sale for under $100” where they sign a comprehensive Intent Mandate containing all purchase parameters. In the first case, finding running shoes, the user must first review the specific cart contents (exact items, prices, shipping details) and manually sign a Cart Mandate to approve the final purchase. In the second case, instant purchase of tickets, the user pre-authorizes specific purchase conditions, allowing the agent to execute transactions automatically when those conditions are met.

Read more:

Google Releases VaultGemma, Open Language Model with Privacy Protection

https://research.google/blog/vaultgemma-the-worlds-most-capable-differentially-private-llm

The News:

- Google released VaultGemma, a 1-billion parameter language model that uses differential privacy to prevent memorization of individual training examples during model development.

- The model adds mathematically calibrated noise during training to ensure individual data points cannot be extracted, providing sequence-level privacy guarantees with parameters ε ≤ 2.0 and δ ≤ 1.1e-10 for text segments up to 1024 tokens.

- Google established new scaling laws that help developers balance computational costs, privacy protection strength, and model performance when training with differential privacy.

- The model weights are available on Hugging Face and Kaggle platforms alongside a technical report documenting the training methodology.

- Training required specialized TPU hardware and significantly larger batch sizes compared to standard model training, but inference runs on regular GPUs.

My take: VaultGemma is the first open AI model trained from scratch with mathematically proven privacy. That means it cannot leak any of its training data, unlike other models that rely only on company policies and alignment techniques. The trade-off is that it performs closer to GPT-2 (2019 level) instead of today’s top models like ChatGPT or Claude. I see this more as a proof-of-concept model that shows what can be done, than a model that you should care about and try to rollout in your organization. It’s a research milestone that shows that large language models can be trained with true differential privacy at scale. In theory at least.

Notion Launches AI Agents

https://www.notion.com/blog/introducing-notion-3-0

The News:

- Notion just released AI Agents in version 3.0, which automate complex workflows across hundreds of pages for up to 20 minutes continuously without human intervention.

- The agents create and modify pages, databases, and reports while drawing context from integrated tools like Slack, Google Drive, and GitHub.

- Users customize agents with personal instructions and memory pages that store working preferences, formatting styles, and specific context about individual workflows.

- Agents access web search capabilities and execute tasks like generating competitor analysis reports, building bug tracking dashboards from multiple data sources, and converting meeting notes into structured proposals.

- The system includes database row permissions, new AI connectors, and MCP integrations that expand agent capabilities across connected platforms.

My take: If you are a Notion business user then your workspace just got a whole lot better. Previously AI was an addition to Notion you could subscribe to at $10 per user and month, now Notion has integrated it as a part of their offering. I fully expect every single piece of software to be fully stuffed with AI features within the next 12 months, so in that perspective it’s actually quite refreshing that Apple is not pushing the limits here and instead take it one smart integrated feature at a time.