In the coming few weeks we should see a massive influx of new AI models being released. ChatGPT 4.5 is due in a few weeks, Grok 3 is to be released today (!) and is described as “scary smart” by Elon Musk, and Claude 4 is on it’s way too. These models are trained using next-generation hardware and should all show a substantial leap in performance, in particular related to coding and agentic workflows.

For those less familiar with AI hardware, this next part might get a bit technical, but it’s worth understanding as it signals a major shift in how AI models are deployed.

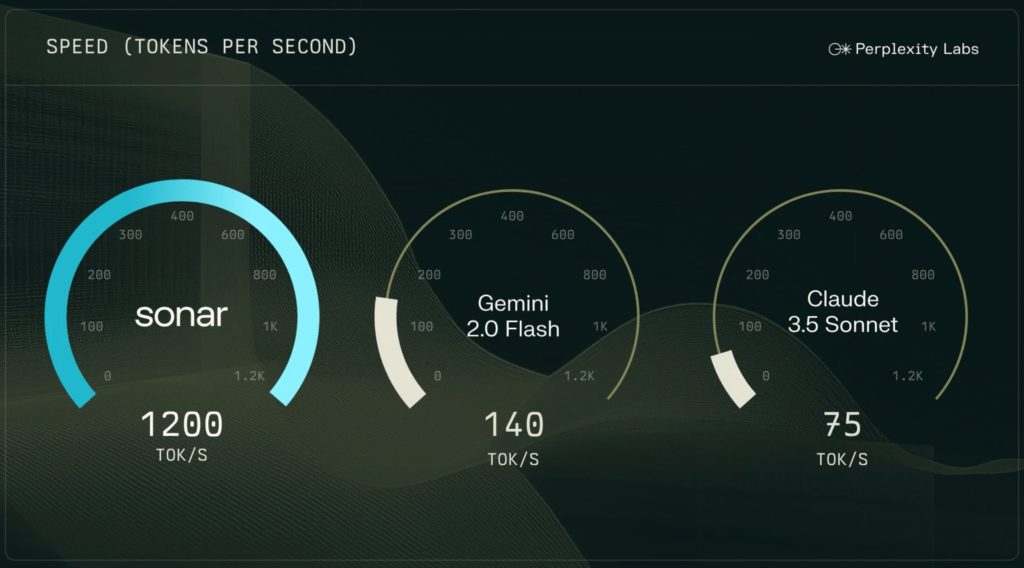

The biggest news last week was undoubtedly the rollout of Cerebras WSE-3 (Wafer-Scale Engine 3) as the hardware platform behind Perplexity Sonar. WSE-3 integrates 900,000 AI cores on a single chip that is 52x denser than the NVIDIA H100. In practice this means 125 petaflops of FP16, comparable to ~3.5 NVIDIA DGX B200 (Blackwell) servers. The WSE-3’s monolithic structure avoids the latency penalties of multi-GPU clusters, enabling near-instant responses even for complex queries. When all future models are going test-time-compute (spending most time in the inference phase), this means we will most definitely see a split where NVIDIA is mostly used for training (due to CUDA’s flexibility for backpropagation and mixed precision), and where custom chips such as WSE-3 are used for inference (where priority is on fixed-point ops and memory bandwidth). This is also the current split used by Perplexity. OpenAI, Google, AWS and many other companies are investing heavily in custom silicon, and I expect NVIDIAs monopoly to be heavily challenged within the next few years, at least for inference.

Among other news: Adobe finally launched Firefly Video in beta and I have already installed Premiere Pro so I can use it on my next video project. Perplexity launched their own version of Deep Research which is better than o3-mini and almost as good as the full OpenAI Deep Research, but it is released for free (!) and is much faster. France secured a deal of €109 for AI investments, and it is clear which European country will be leading the way forward in terms of AI development. And lastly, Lantmäteriet in Sweden has finally made huge amounts of Swedish Geographic data free and open, and if you’re a map nerd like me you have probably already checked out their API and started categorizing your downloaded data!

Thank you for being a Tech Insights subscriber!

THIS WEEK’S NEWS:

- Adobe Debuts Firefly Video as First Commercially Safe Generative AI for Video

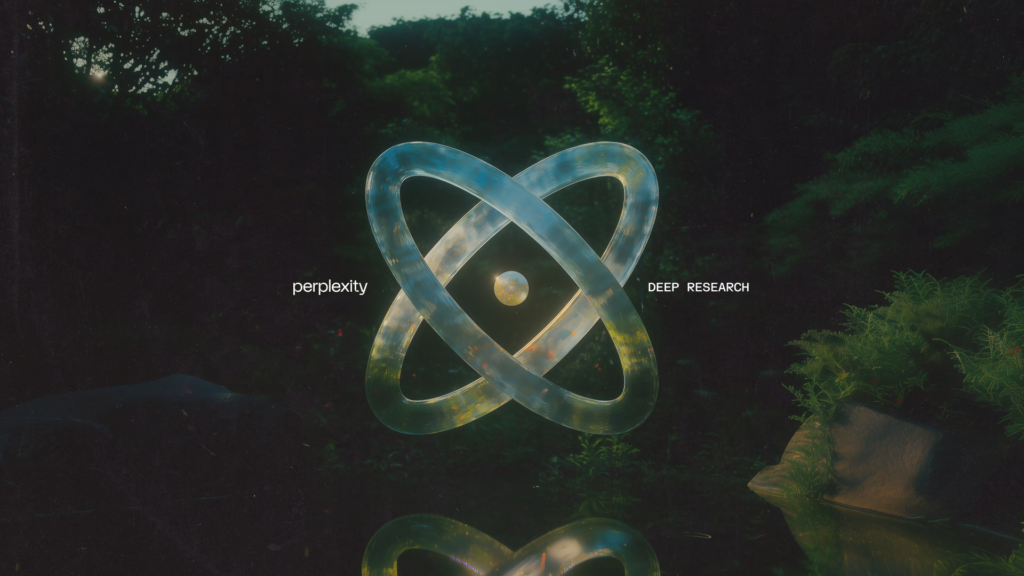

- Perplexity AI Launches Free Deep Research Tool to Compete with ChatGPT and Gemini

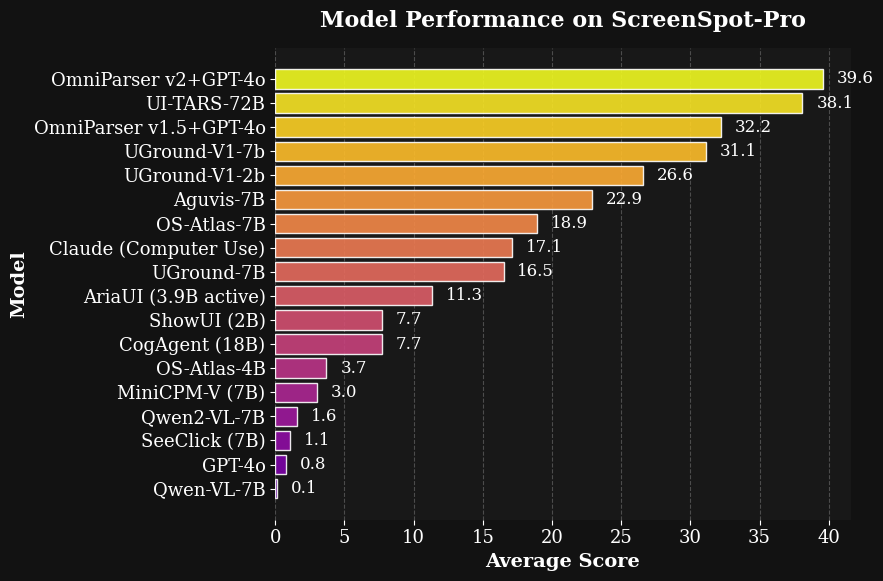

- Microsoft OmniParser 2 Turns Any LLM into a Computer Use Agent

- Perplexity’s New Sonar Model Processes 1,200 Tokens Per Second

- OpenAI Brings Data Storage to Europe for Enterprise and Edu Customers

- France Secures Record €109B AI Investment Package

- ByteDance and HKU Introduces Goku AI for Image and Video Generation

- Google’s AlphaGeometry2 Now Outperforms the Smartest High Schoolers in Math

- Lantmäteriet Makes Swedish Geographic Data Free and Open

- Deepgram Releases New Speech Recognition Model Nova-3

- Anthropic Launches AI Economic Index

- Sam Altman Outlines Three Key Observations on AI Economics and Future Impact

- OpenAI Releases Major Model Spec Update

- Gemini Flash 2.0 Leads New AI Agent Performance Rankings

Adobe Debuts Firefly Video as First Commercially Safe Generative AI for Video

The News:

- Last week Adobe launched the Firefly Video Model in public beta, a generative AI tool for creating and extending video content via text prompts or images. Integrated with Premiere Pro and Adobe’s Firefly web app, it emphasizes commercial safety by training on Adobe Stock and licensed content to avoid IP disputes.

- According to Adobe, Firefly is the only generative AI video model that is IP-friendly and commercially safe, so brands and creative professionals can use it confidently for production-ready content. Unlike OpenAI’s Sora or Runway’s Gen-3 Alpha, Firefly Video integrates directly with Premiere Pro.

- Brands and agencies using Firefly now include Deloitte Digital, dentsu, IBM, IPG Health, Mattel, PepsiCo/Gatorade, Stagwell and Tapestry.

- Firefly is available in two subscription tiers: Standard ($9.99/month): 20 monthly 1080p video generations, and Pro ($29.99/month): 70 monthly generations up to 4k.

My take: Firefly Video is the only tool for generative AI I would trust myself for anything commercially-related. My only concern with Firefly is that it is exclusive to Adobe Premiere. My personal choice of video editors today are Resolve and Final Cut Pro with CommandPost as a shared #1, and Premiere Pro as a clear #2. I would love to see Adobe adding support for other tools such as Final Cut or Resolve, but I seriously doubt this is going to happen. In contrast, I wonder how many will switch to Premiere Pro if Firefly makes their job much quicker and efficient?

Read more:

Perplexity AI Launches Free Deep Research Tool to Compete with ChatGPT and Gemini

https://www.perplexity.ai/hub/blog/introducing-perplexity-deep-research

The News:

- Perplexity AI introduced a Deep Research feature that performs comprehensive research by searching multiple sources, reading documents, and synthesizing information into detailed reports in under 3 minutes.

- Deep Research is available for free to all users, with paid users ($20/month) getting access to 500 daily queries. Free users have a limited number of queries per day (the exact number is not specified).

- Deep Research achieves 93.9% accuracy on the SimpleQA benchmark and 20.5% on Humanity’s Last Exam, outperforming several competitors including Gemini Thinking and o3-mini.

- The feature is currently available on the web platform, with iOS, Android, and Mac apps coming soon. Users can export their research results as PDF documents or convert them into shareable Perplexity Pages.

My take: As you probably have already guessed, Perplexity Deep Research is powered by DeekSeek-R1, deployed locally on Perplexity’s own infrastructure. They don’t say which version it is but I am guessing it’s the 70B-version. And a score of 20.5% on “Humanity’s Last Exam” is really outstandingly good. OpenAI’s Deep Research that requires a $200/month subscription scores 26.6%, and OpenAI o3-mini (high) scores 13%. And now Perplexity releases this feature for free! If you haven’t already checked it out, do yourself a favor and go to perplexity.ai and ask it a difficult question!

Microsoft OmniParser 2 Turns Any LLM into a Computer Use Agent

The News:

- Microsoft just released OmniParser V2, an open-source tool that converts UI screenshots into structured data that large language models (LLMs) can understand and interact with, enabling AI agents to control Windows computers through natural language commands.

- OmniParser achieves 60% faster processing compared to its predecessor, with average latencies of 0.6 seconds per frame on an A100 GPU. It supports multiple LLM platforms out of the box, including OpenAI (4o/o1/o3-mini), DeepSeek (R1), Qwen (2.5VL), and Anthropic Computer Use.

- The system includes OmniTool, a dockerized Windows environment that provides essential tools for agents to interact with the operating system.

- Performance tests show significant improvements, with OmniParser+GPT-4o achieving 39.6 average accuracy on the ScreenSpot Pro benchmark, a substantial improvement from GPT-4o’s original score of 0.8.

My take: Wow, just look at this improvement in computer use performance in less than a year! Now bring on GPT 5, Grok 3 and Claude 4 and let’s see what OmniParser V2 can really do! And just like every good UX designer knows how to design for accessibility, I am willing to bet that the next big trend in UX design will be how to design for LLM interactions. The better your online service works with tools like OmniParser the more likely it is that your future customers will choose your service instead of a competitor. I haven’t seen that much discussion yet about designing UX for LLMs, but I’m willing to bet it’s going to be a hot topic later this year!

Perplexity’s New Sonar Model Processes 1,200 Tokens Per Second

https://the-decoder.com/perplexity-ai-launches-new-ultra-fast-ai-search-model-sonar

The News:

- Perplexity AI just released a new version of their Sonar search model, powered by Meta’s Llama 3.3 70B.

- The new version achieves unprecedented speed of 1,200 tokens per second through partnership with Cerebras Systems and their Wafer Scale Engine technology.

- In internal testing, Sonar outperforms models like GPT-4o mini and Claude 3.5 Haiku, while matching or exceeding premium models like GPT-4o and Claude 3.5 Sonnet in search tasks.

- The service is currently available to Perplexity Pro subscribers.

My take: This again shows the strength of using custom hardware to boost inference speeds. Using specialized hardware by Cerebras Systems, Perplexity can now show search results nearly 10 times faster than what is possible with models like Gemini 2.0 Flash. With test-time-compute becoming the new standard for all new models, open source models and custom-built hardware sure looks like a winning combination.

OpenAI Brings Data Storage to Europe for Enterprise and Edu Customers

https://openai.com/index/introducing-data-residency-in-europe

The News:

- OpenAI has launched European data residency for ChatGPT Enterprise, ChatGPT Edu, and API Platform, enabling European organizations to store and process their data within European borders while complying with local data protection laws like GDPR.

- New API customers can choose to process data in Europe with zero data retention, meaning AI model requests and responses won’t be stored on OpenAI’s servers.

- For new ChatGPT Enterprise and Edu customers, all customer content – including conversations, prompts, images, uploaded files, and custom bots – will be stored within European borders.

- The service currently only applies to new projects – existing projects cannot be migrated to European data residency.

My take: Well if you wondered why Microsoft is investing $3.2 billion in Swedish AI infrastructure the picture is becoming much clearer now. Also note that this does not affect API customers that do not have an Enterprise or Edu agreement. Now, before you rush ahead and start applying for an OpenAI Enterprise account, just know that it’s not cheap. The price is $60 per user, minimum contract length is 12 months, minimum number of seats is 150. Until OpenAI enables zero data retention for Teams or Pro users I still think most companies will go with the route of using private GPT-4o hosting in Azure + a custom frontend like Librechat for sensitive data.

France Secures Record €109B AI Investment Package

The News:

- France announced securing €109 billion in private investments for AI infrastructure and development, marking it the largest AI investment package in European history.

- The UAE will invest €30-50 billion to build a one-gigawatt data center campus in France.

- Canadian firm Brookfield Corporation commits €20 billion for AI infrastructure development.

- French companies including Iliad SA, Orange SA, and Thales SA will contribute to the investment package.

- France has identified 35 sites ready for immediate data center development, supported by the country’s energy surplus from nuclear power.

- The country plans to increase its annual output of AI and data science specialists from 40,000 to 100,000.

My take: France has 57 active nuclear reactors across 18 sites, with a combined capacity of approximately 63,000 megawatts. When countries like Germany and Sweden has actively shut down their nuclear power plants, France has been continuously building new ones. The latest Flamanville 3 has been in construction since 2007 and is expected to reach full capacity by autumn 2025. Additionally, the French government has announced plans to build six new reactors to replace aging units and secure future energy supplies.

This investment of €109 billion actually matches recent US announcements when adjusted for market size. Unlike previous AI summits that focused on safety, France’s approach emphasized action and adoption, with participation from over 1,000 participants from more than 100 countries.

ByteDance and HKU Introduces Goku AI for Image and Video Generation

https://saiyan-world.github.io/goku

The News:

- ByteDance and the University of Hong Kong have released Goku, a unified AI model for generating both images and videos from text prompts. The model uses rectified flow Transformers to create high-quality visual content with improved consistency and realism.

- Goku can generate videos longer than 20 seconds featuring stable hand movements and highly expressive facial and body actions, making it particularly useful for creating virtual digital human videos.

- The system uses a 3D joint image-video variational autoencoder (VAE) that processes both images and videos in a unified way.

- Training data includes 160 million images and 36 million videos, with strict quality controls including minimum resolution requirements of 480 pixels and bit rates of at least 500 kilobits per second.

My take: You can clearly see the direction ByteDance is going with their video generation tools: Virtual influencers. Checkout the video posted in this reddit thread and think how this technology will improve in the coming year, and what effect it will have on social media and marketing. Companies will be able to create their own virtual influencer that will say anything and do anything they want, 24 hours of the day, every day. And no-one will be able to spot that it is a digital generation unless they say it.

Read more:

- [2502.04896] Goku: Flow Based Video Generative Foundation Models

- Goku, the new AI video model from ByteDance (source below) : r/singularity

Google’s AlphaGeometry2 Now Outperforms the Smartest High Schoolers in Math

The News:

- AlphaGeometry2 (AG2) is DeepMind’s latest AI system that solves complex geometry problems at a level exceeding human experts. The system achieved an 84% success rate on International Mathematical Olympiad (IMO) geometry problems from 2000-2024, surpassing the average gold medalist performance.

- The system combines Google’s Gemini language model with a symbolic engine called DDAR, which verifies mathematical proofs and ensures logical consistency.

- AG2 solved 42 out of 50 benchmark problems from past IMO competitions, compared to the average gold medalist score of 40.9.

My take: One year ago Google DeepMind released their first AlphaGeometry,which managed to solve 25 “Olympiad geometry problems” within the standard time limit. And now one year later AlphaGeometry2 beats gold medalists in maths. There are a few limitations in the current version, for example technical issues prevent the model from solving problems with a variable number of points, nonlinear equations, and inequalities. And the model also did worse on another set of harder IMO problems. But look at the progress in one year, and then think 1-2 years ahead. It won’t be long now until we have foundation models that exceed all humans at all math problems.

Read more:

Lantmäteriet Makes Swedish Geographic Data Free and Open

The News:

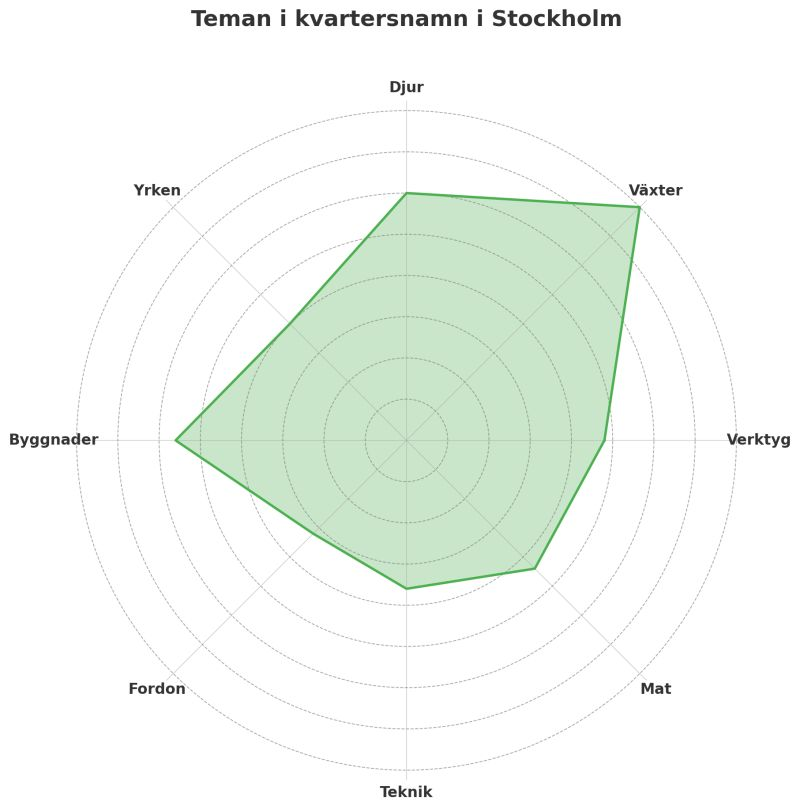

- As of February 3, 2025, Sweden’s mapping agency Lantmäteriet released valuable geographic data sets free of charge, including property boundaries, elevation data, aerial photos, maps, and address information.

- The initiative is backed by a €60 million annual government funding and is expected to generate societal benefits worth 10-21 billion SEK per year.

- The change follows an EU directive requiring valuable public data to be made freely available with minimal restrictions.

- The release excludes Swepos, Lantmäteriet’s navigation support system for precise positioning.

My take: If you like data, this is a gold mine. People have already come up with lots of cool ideas for how the data can be used, and I have listed two of them below. For anyone working with city planning, mobility solutions, safety solutions or anything that requires things like buildings, elevation, boundaries and more go and check out the release and see if there is anything you can use!

Read more:

- Anders Elias on LinkedIn: Spännande att utforska kvartersnamn med öppna data

- Anders Elias on LinkedIn: Äntligen finns kommungränser som öppna data

Deepgram Releases New Speech Recognition Model Nova-3

https://deepgram.com/learn/introducing-nova-3-speech-to-text-api

The News:

- Nova-3 is a new speech-to-text model that converts spoken words to written text in real-time. It makes fewer errors than previous versions – 54.3% fewer mistakes in live transcription and 47.4% fewer in recorded audio processing.

- The model works with 10 languages at once: English, Spanish, French, German, Hindi, Russian, Portuguese, Japanese, Italian, and Dutch. Users don’t need to switch between different language models.

- Users can add up to 100 specific terms or phrases without needing technical knowledge. This helps industries like healthcare where specific terminology is common.

My take: If you have ever tried the Microsoft Teams auto-transcribe feature and you have a bit of “Swedish accent” you know how weird the spelling can be sometimes, and how slow it is. Nova-3 shows better performance than OpenAI’s Whisper, and reaches up to 8:1 preference ratio by users. I am sure we will quite soon reach a point where real-time text transcription “just works”, and we will look back at the age when people had write subtitles by hand like it was a thousand years ago.

Anthropic Launches AI Economic Index

https://www.anthropic.com/economic-index

The News:

- Anthropic introduced a new data-driven initiative that analyzes millions of anonymized Claude.ai conversations to track AI’s impact on labor markets and the economy. This represents the first large-scale study using actual AI interaction data rather than surveys or expert opinions.

- Key findings show that 36% of occupations use AI in at least 25% of their tasks, while only 4% of occupations use AI in 75% or more of their tasks.

- The index reveals AI is primarily used for augmentation (57%) rather than automation (43%), indicating AI serves more as a collaborative tool than a replacement.

- Software development and technical writing show the highest AI adoption rates, while agriculture, fishing, and forestry demonstrate the lowest usage.

- Mid-to-high wage occupations show higher AI adoption rates, with software engineering leading at 37.2% of queries to Claude

My take: Not many surprises in this study. AI usage is based on model quality, meaning few use it for automation and more for augmentation. The main value of studies like this comes in 2-3 years of time when we can start to predict where things are heading, and how quickly things are heading in that direction.

Sam Altman Outlines Three Key Observations on AI Economics and Future Impact

https://blog.samaltman.com/three-observations

The News:

- OpenAI CEO Sam Altman outlined key trends in AI development, emphasizing the scaling economics of intelligence, the rapid cost reduction of AI usage, and the implications of AI agents.

- The first trend is that the intelligence of an AI model roughly equals the log of the resources used to train and run it. It appears that you can spend arbitrary amounts of money and get continuous and predictable gains.

- The second trend is that the cost to use a given level of AI falls about 10x every 12 months, and lower prices lead to much more use. Moore’s law changed the world at 2x every 18 months; this is “unbelievably stronger”.

- The third trend is that the socioeconomic value of linearly increasing intelligence is super-exponential in nature. A consequence of this is that Sam Altman sees no reason for exponentially increasing investments to stop in the near future.

- The impact will be gradual but transformative, affecting scientific progress most significantly while potentially causing dramatic shifts in goods pricing – with everyday items becoming cheaper while luxury goods and limited resources like land may become more expensive.

My take: If you are interested in AI and the potential it has for disrupting our entire society you should probably just go ahead and read the full article. I agree with Sam Altman that AI agents will soon feel like “virtual co-workers” and even if it sounds like science-fiction today this future will come fast, and if you are not prepared for it catching-up will be both expensive and difficult.

OpenAI Releases Major Model Spec Update

https://model-spec.openai.com/2025-02-12.html

The News:

- Last week OpenAI released a major update to Model Spec, expanding it from 10 to 63 pages, also making it freely available under a Creative Commons Zero (CC0) license. The Model Spec is OpenAI’s rulebook that defines how their AI models should behave, think, and interact with users.

- The update allows AI models to engage with controversial topics more openly while maintaining ethical boundaries. Instead of avoiding sensitive subjects, models are now encouraged to “seek the truth together” with users.

- OpenAI is implementing a more nuanced approach to content moderation, including exploring a “grown-up mode” for appropriate mature content while maintaining strict bans on harmful material.

- The timing coincides with OpenAI’s preparation for GPT-4.5 (codenamed Orion) and eventual GPT-5 release, suggesting a proactive approach to ethical AI development.

My take: Finally an open-source release by OpenAI! 🎉 My personal view and best guess on this “more nuanced” approach to content moderation is that is is hard to moderate the learning process when using strict reinforcement learning. We have just passed the point when AI is training itself, and from here on things are going to accelerate much quicker than before. It also means we loose some parts of the moderation capabilities we had previously, for both good and bad.

Gemini Flash 2.0 Leads New AI Agent Performance Rankings

https://huggingface.co/spaces/galileo-ai/agent-leaderboard

The News:

- Galileo Labs just launched a new AI agent leaderboard evaluating 17 leading language models across 14 benchmarks, including tests on tool usage and selection, long context, complex interactions, and more.

- Google’s Gemini Flash 2.0 topped the rankings with a 0.938 score, outperforming more expensive competitors while maintaining cost-effectiveness at $0.15/$0.6 per million tokens (input/output).

- Open-source models are showing competitive performance, with Mistral’s latest Small release achieving scores comparable to premium offerings at lower price points

My take: Most current models are just slightly too stupid to be usable as agents, but I predict this will change completely once the next generation models are released later this year. It’s like AI coding, once it passed a certain level it suddenly got usable, and we should see the same with agentic workflows within a few months, making benchmarks like this exciting to follow.